Performance Evaluation of Deep Learning Techniques in Gesture Recognition Systems

DOI:

https://doi.org/10.12928/biste.v6i1.10120Keywords:

Gesture Recognition, Deep Learning Variants, Machine Learning, Human-Computer Interaction, Deep Learning ComparisonAbstract

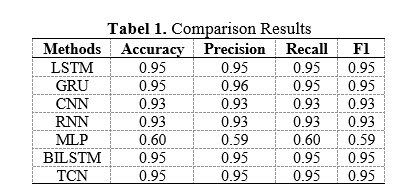

As human-computer interaction becomes increasingly sophisticated, the significance of gesture recognition systems has expanded, impacting diverse sectors such as healthcare, smart device interfacing, and immersive gaming. This study conducts an in-depth comparison of seven cutting-edge deep learning models to assess their capabilities in accurately recognizing gestures. The analysis encompasses Long Short-Term Memory Networks (LSTMs), Gated Recurrent Units (GRUs), Convolutional Neural Networks (CNNs), Simple Recurrent Neural Networks (RNNs), Multi-Layer Perceptrons (MLPs), Bidirectional LSTMs (BiLSTMs), and Temporal Convolutional Networks (TCNs). Evaluated on a dataset representative of varied human gestures, the models were rigorously scored based on accuracy, precision, recall, and F1 metrics, with LSTMs, GRUs, BiLSTMs, and TCNs outperforming others, achieving an impressive score bracket of 0.93 to 0.95. Conversely, MLPs trailed with scores around 0.59 to 0.60, underscoring the challenges of non-temporal models in processing sequential data. This study pinpoints model selection as pivotal for optimal system performance and suggests that recognizing the temporal patterns in gesture sequences is crucial. Limitations such as dataset diversity and computational demands were noted, emphasizing the need for further research into models' operational efficiencies. Future studies are poised to explore hybrid models and real-time processing, with the prospect of enhancing gesture recognition systems' interactivity and accessibility. This research thus provides a foundational benchmark for selecting and implementing the most suitable computational methods for advancing gesture recognition technologies.

References

D. Sayers et al., “The Dawn of the Human-Machine Era: A forecast of new and emerging language technologies,” hal-03230287, 2021, https://doi.org/10.17011/jyx/reports/20210518/1.

Z. Ding, Y. Ji, Y. Gan, Y. Wang, and Y. Xia, “Current status and trends of technology, methods, and applications of Human--Computer Intelligent Interaction (HCII): A bibliometric research,” Multimed. Tools Appl., pp. 1–34, 2024, https://doi.org/10.1007/s11042-023-18096-6.

M. Tzampazaki, C. Zografos, E. Vrochidou, and G. A. Papakostas, “Machine Vision—Moving from Industry 4.0 to Industry 5.0,” Applied Sciences, vol. 14, no. 4, p. 1471, 2024, https://doi.org/10.3390/app14041471.

C. Holloway and G. Barbareschi, Disability interactions: creating inclusive innovations. Springer Nature, 2022, https://doi.org/10.1007/978-3-031-03759-7.

A. Taghian, M. Abo-Zahhad, M. S. Sayed, and A. H. Abd El-Malek, “Virtual and augmented reality in biomedical engineering,” Biomed. Eng. Online, vol. 22, no. 1, p. 76, 2023, https://doi.org/10.1186/s12938-023-01138-3.

S. Berman and H. Stern, “Sensors for gesture recognition systems,” IEEE Trans. Syst. Man, Cybern. Part C (Applications Rev., vol. 42, no. 3, pp. 277–290, 2011, https://doi.org/10.1109/TSMCC.2011.2161077.

D. Sarma and M. K. Bhuyan, “Methods, databases and recent advancement of vision-based hand gesture recognition for hci systems: A review,” SN Comput. Sci., vol. 2, no. 6, p. 436, 2021, https://doi.org/10.1007/s42979-021-00827-x.

S. Wang et al., “Hand gesture recognition framework using a lie group based spatio-temporal recurrent network with multiple hand-worn motion sensors,” Inf. Sci. (Ny)., vol. 606, pp. 722–741, 2022, https://doi.org/10.1016/j.ins.2022.05.085.

J. Zhao, X. Sun, Q. Li, and X. Ma, “Edge caching and computation management for real-time internet of vehicles: An online and distributed approach,” IEEE Trans. Intell. Transp. Syst., vol. 22, no. 4, pp. 2183–2197, 2020, https://doi.org/10.1109/TITS.2020.3012966.

Z. Wang et al., “A study on hand gesture recognition algorithm realized with the aid of efficient feature extraction method and convolution neural networks: design and its application to VR environment,” Soft Comput., pp. 1–24, 2023, https://doi.org/10.1007/s00500-023-09077-w.

Y. L. Coelho, F. de A. S. dos Santos, A. Frizera-Neto, and T. F. Bastos-Filho, “A lightweight framework for human activity recognition on wearable devices,” IEEE Sens. J., vol. 21, no. 21, pp. 24471–24481, 2021, https://doi.org/10.1109/JSEN.2021.3113908.

L. M. Dang, K. Min, H. Wang, M. J. Piran, C. H. Lee, and H. Moon, “Sensor-based and vision-based human activity recognition: A comprehensive survey,” Pattern Recognit., vol. 108, p. 107561, 2020, https://doi.org/10.1016/j.patcog.2020.107561.

S. Ahmed, K. D. Kallu, S. Ahmed, and S. H. Cho, “Hand gestures recognition using radar sensors for human-computer-interaction: A review,” Remote Sens., vol. 13, no. 3, p. 527, 2021, https://doi.org/10.3390/rs13030527.

Z. Lv, F. Poiesi, Q. Dong, J. Lloret, and H. Song, “Deep learning for intelligent human--computer interaction,” Appl. Sci., vol. 12, no. 22, p. 11457, 2022, https://doi.org/10.3390/app122211457.

J. V Tembhurne and T. Diwan, “Sentiment analysis in textual, visual and multimodal inputs using recurrent neural networks,” Multimed. Tools Appl., vol. 80, pp. 6871–6910, 2021, https://doi.org/10.1007/s11042-020-10037-x.

V. Kamath and A. Renuka, “Deep Learning Based Object Detection for Resource Constrained Devices-Systematic Review, Future Trends and Challenges Ahead,” Neurocomputing, 2023, https://doi.org/10.1016/j.neucom.2023.02.006.

Z. Ma and G. Mei, “A hybrid attention-based deep learning approach for wind power prediction,” Appl. Energy, vol. 323, p. 119608, 2022, https://doi.org/10.1016/j.apenergy.2022.119608.

Y. Shen, J. Wang, C. Feng, and Q. Wang, “Dual attention-based deep learning for construction equipment activity recognition considering transition activities and imbalanced dataset,” Autom. Constr., vol. 160, p. 105300, 2024, https://doi.org/10.1016/j.autcon.2024.105300.

Y. Mehrish, N. Majumder, R. Bharadwaj, R. Mihalcea, and S. Poria, “A review of deep learning techniques for speech processing,” Inf. Fusion, p. 101869, 2023, https://doi.org/10.1016/j.inffus.2023.101869.

C. Eze and C. Crick, “Learning by Watching: A Review of Video-based Learning Approaches for Robot Manipulation,” arXiv Prepr. arXiv2402.07127, 2024, https://doi.org/10.48550/arXiv.2402.07127.

E. Murphy. In Service to Security: Constructing the Authority to Manage European Border Data Infrastructures. Copenhagen Business School [Phd]. 2023. https://research.cbs.dk/en/publications/in-service-to-security-constructing-the-authority-to-manage-europ.

H. Zhou et al., “Deep-learning-assisted noncontact gesture-recognition system for touchless human-machine interfaces,” Adv. Funct. Mater., vol. 32, no. 49, p. 2208271, 2022, https://doi.org/10.1002/adfm.202208271.

T. Valtonen et al., “Learning environments preferred by university students: A shift toward informal and flexible learning environments,” Learn. Environ. Res., vol. 24, pp. 371–388, 2021, https://doi.org/10.1007/s10984-020-09339-6.

L. A. Al-Haddad, W. H. Alawee, and A. Basem, “Advancing task recognition towards artificial limbs control with ReliefF-based deep neural network extreme learning,” Comput. Biol. Med., vol. 169, p. 107894, 2024, https://doi.org/10.1016/j.compbiomed.2023.107894.

N. Schizas, A. Karras, C. Karras, and S. Sioutas, “TinyML for Ultra-Low Power AI and Large Scale IoT Deployments: A Systematic Review,” Futur. Internet, vol. 14, no. 12, p. 363, 2022, https://doi.org/10.3390/fi14120363.

X. Xu et al., “Enabling hand gesture customization on wrist-worn devices,” in Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, pp. 1–19, 2022, https://doi.org/10.1145/3491102.3501904.

W. Li et al., “A perspective survey on deep transfer learning for fault diagnosis in industrial scenarios: Theories, applications and challenges,” Mech. Syst. Signal Process., vol. 167, p. 108487, 2022, https://doi.org/10.1016/j.ymssp.2021.108487.

R. E. Nogales and M. E. Benalcázar, “Hand gesture recognition using machine learning and infrared information: a systematic literature review,” Int. J. Mach. Learn. Cybern., vol. 12, no. 10, pp. 2859–2886, 2021, https://doi.org/10.1007/s13042-021-01372-y.

H. Mohyuddin, S. K. R. Moosavi, M. H. Zafar, and F. Sanfilippo, “A comprehensive framework for hand gesture recognition using hybrid-metaheuristic algorithms and deep learning models,” Array, vol. 19, p. 100317, 2023, https://doi.org/10.1016/j.array.2023.100317.

M. Lee and J. Bae, “Real-time gesture recognition in the view of repeating characteristics of sign languages,” IEEE Trans. Ind. Informatics, vol. 18, no. 12, pp. 8818–8828, 2022, https://doi.org/10.1109/TII.2022.3152214.

M. Simão, P. Neto, and O. Gibaru, “EMG-based online classification of gestures with recurrent neural networks,” Pattern Recognition Letters, vol. 128, pp. 45-51, 2019, https://doi.org/10.1016/j.patrec.2019.07.021.

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 Gregorius Airlangga

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Authors who publish with this journal agree to the following terms:

- Authors retain copyright and grant the journal right of first publication with the work simultaneously licensed under a Creative Commons Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this journal.

- Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the journal's published version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial publication in this journal.

- Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See The Effect of Open Access).

This journal is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.