Improved DeepFake Image Generation Using StyleGAN2-ADA with Real-Time Personal Image Projection

DOI:

https://doi.org/10.12928/biste.v7i4.14659Keywords:

DeepFake Image Generation, StyleGAN2-ADA, Generative Adversarial Networks, Real-Time Projection, Personal Image Dataset, Adaptive Discriminator AugmentationAbstract

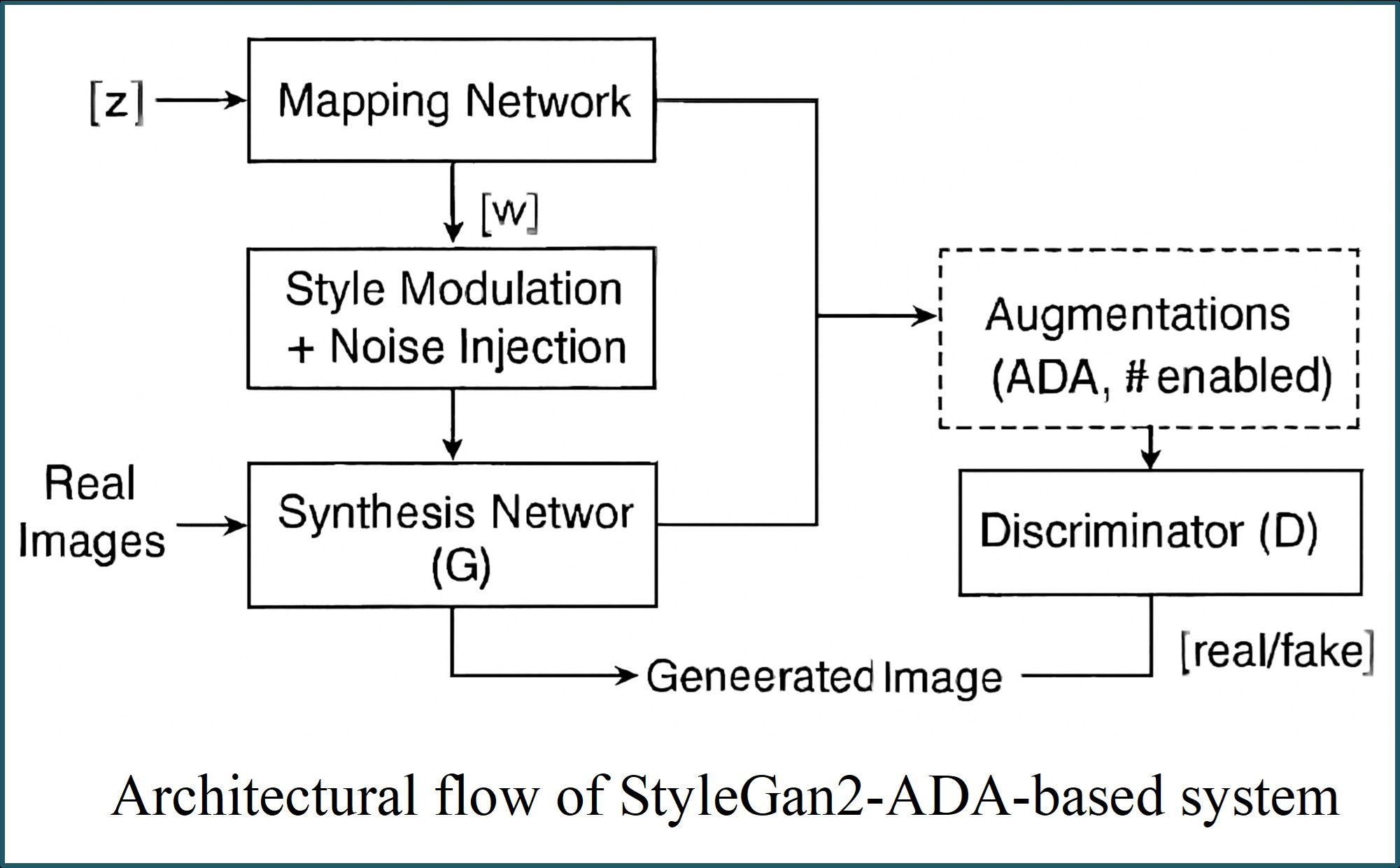

This paper presents an improved approach for DeepFake image generation using StyleGAN2-ADA framework. The system is designed to generate high-quality synthetic facial images from a limited dataset of personal photos in real time. By leveraging the Adaptive Discriminator Augmentation (ADA) mechanism, the training process is stabilized without modifying the network architecture, enabling robust image generation even with small-scale datasets. Real-time image capturing and projection techniques are integrated to enhance personalization and identity consistency. The experimental results demonstrate that the proposed method achieve a very high generation performance, significantly outperforming the baseline StyleGAN2 model. The proposed system using StyleGAN2-ADA achieves 99.1% identity similarity, a low Fréchet Inception Distance (FID) of 8.4, and less than 40 ms latency per generated frame. This approach provides a practical solution for dataset augmentation and supports ethical applications in animation, digital avatars, and AI-driven simulations.

References

L. H. Singh, P. Charanarur, and N. K. Chaudhary, “Advancements in detecting Deepfakes : AI algorithms and future prospects − a review,” Discov. Internet Things, vol. 5, no. 53, pp. 1–30, 2025, https://doi.org/10.1007/s43926-025-00154-0.

B. Acim, M. Boukhlif, H. Ouhnni, N. Kharmoum, and S. Ziti, “A Decade of Deepfake Research in the Generative AI Era , 2014 – 2024: A Bibliometric Analysis,” Publications, vol. 13, no. 4, pp. 1–32, 2025, https://doi.org/10.3390/publications13040050.

A. S. George and A. S. H. George, “Deepfakes: The Evolution of Hyper realistic Media Manipulation,” Partners Univers. Innov. Res. Publ., vol. 1, no. 2, pp. 58–74, 2023, https://doi.org/10.5281/zenodo.10148558.

Furizal et al., “Social Sciences & Humanities Open Social , legal , and ethical implications of AI-Generated deepfake pornography on digital platforms : A systematic literature review,” Soc. Sci. Humanit. Open, vol. 12, no. 101882, pp. 1–25, 2025, https://doi.org/10.1016/j.ssaho.2025.101882.

A. Rossler, D. Cozzolino, L. Verdoliva, C. Riess, J. Thies, and M. Niessner, “FaceForensics++: Learning to detect manipulated facial images,” in Proceedings of the IEEE International Conference on Computer Vision, pp. 1–14, 2019, https://doi.org/10.1109/ICCV.2019.00009.

N. F. Abdul Hassan, A. A. Abed, and T. Y. Abdalla, “Face mask detection using deep learning on NVIDIA Jetson Nano,” Int. J. Electr. Comput. Eng., vol. 12, no. 5, pp. 5427–5434, 2022, https://doi.org/10.11591/ijece.v12i5.pp5427-5434.

T. Karras, S. Laine, and T. Aila, “A Style-Based Generator Architecture for Generative Adversarial Networks,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 43, no. 12, pp. 4217–4228, 2021, https://doi.org/10.1109/TPAMI.2020.2970919.

T. Karras, M. Aittala, J. Hellsten, S. Laine, J. Lehtinen, and T. Aila, “Training generative adversarial networks with limited data,” Adv. Neural Inf. Process. Syst., vol. 2020–Decem, pp. 12104–12114, 2020, https://dl.acm.org/doi/10.5555/3495724.3496739.

A. A. Abed, A. Al-Ibadi, and I. A. Abed, “Vision-Based Soft Mobile Robot Inspired by Silkworm Body and Movement Behavior,” J. Robot. Control, vol. 4, no. 3, pp. 299–307, 2023, https://doi.org/10.18196/jrc.v4i3.16622.

T. Karras et al., “Alias-free generative adversarial networks,” in Proceedings of the 35th International Conference on Neural Information Processing Systems, pp. 852–863, 2021, https://dl.acm.org/doi/10.5555/3540261.3540327.

J. Xiao, L. Li, C. Wang, Z.-J. Zha, and Q. Huang, “Few Shot Generative Model Adaption via Relaxed Spatial Structural Alignment,” in 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 11194–11203, 2022, https://doi.org/10.1109/CVPR52688.2022.01092.

J. Zhu, H. Ma, J. Chen, and J. Yuan, “High-Quality and Diverse Few-Shot Image Generation via Masked Discrimination,” IEEE Trans. Image Process., vol. 33, pp. 2950–2965, 2024, https://doi.org/10.1109/TIP.2024.3385295.

D. Matuzevičius, “Diverse Dataset for Eyeglasses Detection: Extending the Flickr-Faces-HQ (FFHQ) Dataset,” Sensors, vol. 24, no. 23, pp. 1–27, 2024, https://doi.org/10.3390/s24237697.

C. N. Valdebenito Maturana, A. L. Sandoval Orozco, and L. J. García Villalba, “Exploration of Metrics and Datasets to Assess the Fidelity of Images Generated by Generative Adversarial Networks,” Appl. Sci., vol. 13, no. 19, pp. 1–29, 2023, https://doi.org/10.3390/app131910637.

M. Mahmoud, M. S. E. Kasem, and H. S. Kang, “A Comprehensive Survey of Masked Faces: Recognition, Detection, and Unmasking,” Appl. Sci., vol. 14, no. 19, pp. 1–37, 2024, https://doi.org/10.3390/app14198781.

R. Sunil, P. Mer, A. Diwan, R. Mahadeva, and A. Sharma, “Exploring autonomous methods for deepfake detection: A detailed survey on techniques and evaluation,” Heliyon, vol. 11, no. 3, p. e42273, 2025, https://doi.org/10.1016/j.heliyon.2025.e42273.

D. A. Talib and A. A. Abed, “Real-Time Deepfake Image Generation Based on Stylegan2-ADA,” Rev. d’Intelligence Artif., vol. 37, no. 2, pp. 397–405, 2023, https://doi.org/10.18280/ria.370216.

I. J. Goodfellow et al., “Generative adversarial nets,” in Proceedings of the 28th International Conference on Neural Information Processing Systems - Volume 2, pp. 2672–2680, 2014, https://dl.acm.org/doi/10.5555/2969033.2969125.

T. Karras, S. Laine, M. Aittala, J. Hellsten, J. Lehtinen, and T. Aila, “Analyzing and Improving the Image Quality of StyleGAN,” in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 8107–8116, 2020, https://doi.org/10.1109/CVPR42600.2020.00813.

A. Fejjari, A. Abela, M. Tanti, and A. Muscat, “Evaluation of StyleGAN-CLIP Models in Text-to-Image Generation of Faces,” Appl. Sci., vol. 15, no. 15, pp. 1–19, 2025, https://doi.org/10.3390/app15158692.

M. Lai, M. Mascalchi, C. Tessa, and S. Diciotti, “Generating Brain MRI with StyleGAN2-ADA: The Effect of the Training Set Size on the Quality of Synthetic Images,” J. Imaging Informatics Med., no. 123456789, pp. 1–11, 2025, https://doi.org/10.1007/s10278-025-01536-0.

S. A. Khodaei and M. Bitaraf, “Structural dynamic response synthesis: A transformer-based time-series GAN approach,” Results Eng., vol. 26, no. February, p. 105549, 2025, https://doi.org/10.1016/j.rineng.2025.105549.

L. Liu, D. Tong, W. Shao, and Z. Zeng, “AmazingFT: A Transformer and GAN-Based Framework for Realistic Face Swapping,” Electron., vol. 13, no. 18, pp. 1–22, 2024, https://doi.org/10.3390/electronics13183589.

T. Zhang, W. Zhang, Z. Zhang, and Y. Gan, “PFGAN: Fast transformers for image synthesis,” Pattern Recognit. Lett., vol. 170, no. June 2023, pp. 106–112, 2023, https://doi.org/https://doi.org/10.1016/j.patrec.2023.04.013.

Y. Huang, “ViT-R50 GAN: Vision Transformers Hybrid Model based Generative Adversarial Networks for Image Generation,” in 2023 3rd International Conference on Consumer Electronics and Computer Engineering (ICCECE), pp. 590–593, 2023, https://doi.org/10.1109/ICCECE58074.2023.10135253.

M. Abdel-Basset, N. Moustafa, and H. Hawash, “Disentangled Representation GANs,” in Deep Learning Approaches for Security Threats in IoT Environments, pp. 303–316, 2023, https://doi.org/10.1002/9781119884170.ch14.

I. Jeon, W. Lee, M. Pyeon, and G. Kim, “IB-GAN: Disentangled Representation Learning with Information Bottleneck Generative Adversarial Networks,” in 35th AAAI Conference on Artificial Intelligence, AAAI 2021, pp. 7926–7934, 2021, https://doi.org/10.1609/aaai.v35i9.16967.

L. Tran, X. Yin, and X. Liu, “Disentangled Representation Learning GAN for Pose-Invariant Face Recognition,” in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1283–1292, 2017, https://doi.org/10.1109/CVPR.2017.141.

C.-C. Chiu, Y.-H. Lee, P.-H. Chen, Y.-C. Shih, and J. Hao, “Application of Generative Adversarial Network for Electromagnetic Imaging in Half-Space,” Sensors, vol. 24, no. 7, pp. 1–15, 2024, https://doi.org/https://doi.org/10.3390/s24072322.

C. C. Chiu, C. L. Li, P. H. Chen, Y. C. Li, and E. H. Lim, “Electromagnetic Imaging in Half-Space Using U-Net with the Iterative Modified Contrast Scheme,” Sensors, vol. 25, no. 4, pp. 1–16, 2025, https://doi.org/10.3390/s25041120.

W. Zhu, Z. Lu, Y. Zhang, Z. Zhao, B. Lu, and R. Li, “RaDiT: A Differential Transformer-Based Hybrid Deep Learning Model for Radar Echo Extrapolation,” Remote Sens., vol. 17, no. 12, pp. 1–30, 2025, https://doi.org/10.3390/rs17121976.

R. Abdal, Y. Qin, and P. Wonka, “Image2StyleGAN: How to Embed Images Into the StyleGAN Latent Space?,” in 2019 IEEE/CVF International Conference on Computer Vision (ICCV), IEEE, 2019, pp. 4432–4441. https://doi.org/10.1109/ICCV.2019.00453.

S. Kim, K. Kang, G. Kim, S.-H. Baek, and S. Cho, “DynaGAN: Dynamic Few-shot Adaptation of GANs to Multiple Domains,” in SIGGRAPH Asia 2022 Conference Papers, pp. 1–8, 2022,https://doi.org/10.1145/3550469.3555416.

T. Karras, M. Aittala, J. Hellsten, S. Laine, J. Lehtinen, and T. Aila, “StyleGAN2 with adaptive discriminator augmentation (ADA) - Official TensorFlow implementation,” GitHub repository, NVIDIA. 2025, https://github.com/NVlabs/stylegan2-ada

J. Deng, J. Guo, N. Xue, and S. Zafeiriou, “ArcFace: Additive Angular Margin Loss for Deep Face Recognition,” in 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 4685–4694. https://doi.org/10.1109/CVPR.2019.00482.

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 Ali A. Abed, Doaa Alaa Talib, Abdel-Nasser Sharkawy

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Authors who publish with this journal agree to the following terms:

- Authors retain copyright and grant the journal right of first publication with the work simultaneously licensed under a Creative Commons Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this journal.

- Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the journal's published version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial publication in this journal.

- Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See The Effect of Open Access).

This journal is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.