Transformer Models in Deep Learning: Foundations, Advances, Challenges and Future Directions

DOI:

https://doi.org/10.12928/biste.v7i2.13053Keywords:

Transformer, Self-Attention, Natural Language Processing, Deep Learning, ModelsAbstract

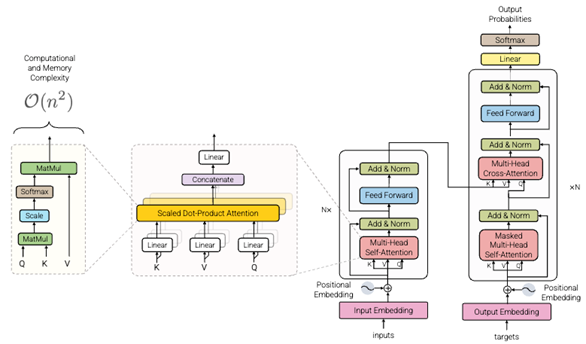

Transformer models have significantly advanced deep learning by introducing parallel processing and enabling the modeling of long-range dependencies. Despite their performance gains, their high computational and memory demands hinder deployment in resource-constrained environments such as edge devices or real-time systems. This review aims to analyze and compare Transformer architectures by categorizing them into encoder-only, decoder-only, and encoder-decoder variants and examining their applications in natural language processing (NLP), computer vision (CV), and multimodal tasks. Representative models BERT, GPT, T5, ViT, and MobileViT are selected based on architectural diversity and relevance across domains. Core components including self-attention mechanisms, positional encoding schemes, and feed-forward networks are dissected using a systematic review methodology, supported by a visual framework to improve clarity and reproducibility. Performance comparisons are discussed using standard evaluation metrics such as accuracy, F1-score, and Intersection over Union (IoU), with particular attention to trade-offs between computational cost and model effectiveness. Lightweight models like DistilBERT and MobileViT are analyzed for their deployment feasibility. Major challenges including quadratic attention complexity, hardware constraints, and limited generalization are explored alongside solutions such as sparse attention mechanisms, model distillation, and hardware accelerators. Additionally, ethical aspects including fairness, interpretability, and sustainability are critically reviewed in relation to Transformer adoption across sensitive domains. This study offers a domain-spanning overview and proposes practical directions for future research aimed at building scalable, efficient, and ethically aligned. Transformer-based systems suited for mobile, embedded, and healthcare applications.

References

I. Dirgová Luptáková, M. Kubovčík, and J. Pospíchal, “Wearable Sensor-Based Human Activity Recognition with Transformer Model,” Sensors, vol. 22, no. 5, p. 1911, 2022, https://doi.org/10.3390/s22051911.

Y. Wang, “Research and Application of Machine Learning Algorithm in Natural Language Processing and Semantic Understanding,” in 2024 International Conference on Telecommunications and Power Electronics (TELEPE), pp. 655–659, 2024, https://doi.org/10.1109/TELEPE64216.2024.00123.

X. Chen, “The Advance of Deep Learning and Attention Mechanism,” in 2022 International Conference on Electronics and Devices, Computational Science (ICEDCS), pp. 318–321, 2022, https://doi.org/10.1109/ICEDCS57360.2022.00078.

J. W. Chan and C. K. Yeo, “A Transformer based approach to electricity load forecasting,” The Electricity Journal, vol. 37, no. 2, p. 107370, 2024, https://doi.org/10.1016/j.tej.2024.107370.

A. Di Ieva, C. Stewart, and E. Suero Molina, “Large Language Models in Neurosurgery,” pp. 177–198, 2024, https://doi.org/10.1007/978-3-031-64892-2_11.

S. Islam et al., “A comprehensive survey on applications of transformers for deep learning tasks,” Expert Syst Appl, vol. 241, p. 122666, 2024, https://doi.org/10.1016/j.eswa.2023.122666.

T. Zhang, W. Xu, B. Luo, and G. Wang, “Depth-Wise Convolutions in Vision Transformers for efficient training on small datasets,” Neurocomputing, vol. 617, p. 128998, 2025, https://doi.org/10.1016/j.neucom.2024.128998.

A. Sriwastawa and J. A. Arul Jothi, “Vision transformer and its variants for image classification in digital breast cancer histopathology: a comparative study,” Multimed Tools Appl, vol. 83, no. 13, pp. 39731–39753, 2023, https://doi.org/10.1007/s11042-023-16954-x.

C. M. Thwal, Y. L. Tun, K. Kim, S.-B. Park, and C. S. Hong, “Transformers with Attentive Federated Aggregation for Time Series Stock Forecasting,” in 2023 International Conference on Information Networking (ICOIN), pp. 499–504, 2023, https://doi.org/10.1109/ICOIN56518.2023.10048928.

B. T. Hung and N. H. M. Thu, “Novelty fused image and text models based on deep neural network and transformer for multimodal sentiment analysis,” Multimed Tools Appl, vol. 83, no. 25, pp. 66263–66281, 2024, https://doi.org/10.1007/s11042-023-18105-8.

T. G. Altundogan, M. Karakose, and S. Tanberk, “Transformer Based Multimodal Summarization and Highlight Abstraction Approach for Texts and Speech Audios,” in 2024 28th International Conference on Information Technology (IT), pp. 1–4, 2024, https://doi.org/10.1109/IT61232.2024.10475775.

B. Kashyap, P. Aswini, V. H. Raj, K. Pithamber, R. Sobti, and Z. Salman, “Integration of Vision and Language in Multi-Mode Video Converters (Partially) Aligns with the Brain,” in 2024 Second International Conference Computational and Characterization Techniques in Engineering & Sciences (IC3TES), pp. 1–6, 2024, https://doi.org/10.1109/IC3TES62412.2024.10877464.

V. Pandelea, E. Ragusa, T. Apicella, P. Gastaldo, and E. Cambria, “Emotion Recognition on Edge Devices: Training and Deployment,” Sensors, vol. 21, no. 13, p. 4496, 2021, https://doi.org/10.3390/s21134496.

F. Liu et al., “Vision Transformer-based overlay processor for Edge Computing,” Appl Soft Comput, vol. 156, p. 111421, 2024, https://doi.org/10.1016/j.asoc.2024.111421.

N. Penkov, K. Balaskas, M. Rapp, and J. Henkel, “Differentiable Slimming for Memory-Efficient Transformers,” IEEE Embed Syst Lett, vol. 15, no. 4, pp. 186–189, 2023, https://doi.org/10.1109/LES.2023.3299638.

S. Tuli and N. K. Jha, “EdgeTran: Device-Aware Co-Search of Transformers for Efficient Inference on Mobile Edge Platforms,” IEEE Trans Mob Comput, vol. 23, no. 6, pp. 7012–7029, 2024, https://doi.org/10.1109/TMC.2023.3328287.

O. A. Sarumi and D. Heider, “Large language models and their applications in bioinformatics,” Comput Struct Biotechnol J, vol. 23, pp. 3498–3505, 2024, https://doi.org/10.1016/j.csbj.2024.09.031.

B. Rahmadhani, P. Purwono, and Safar Dwi Kurniawan, “Understanding Transformers: A Comprehensive Review,” Journal of Advanced Health Informatics Research, vol. 2, no. 2, pp. 85–94, 2024, https://doi.org/10.59247/jahir.v2i2.292.

H. Yeom and K. An, “A Simplified Query-Only Attention for Encoder-Based Transformer Models,” Applied Sciences, vol. 14, no. 19, p. 8646, 2024, https://doi.org/10.3390/app14198646.

J. Liu, W. Sun, and X. Gao, “Ship Classification Using Swin Transformer for Surveillance on Shore,” pp. 774–785, 2022, https://doi.org/10.1007/978-981-19-3927-3_76.

J. Yeom, T. Kim, R. Chang, and K. Song, “Structural and positional ensembled encoding for Graph Transformer,” Pattern Recognit Lett, vol. 183, pp. 104–110, 2024, https://doi.org/10.1016/j.patrec.2024.05.006.

J. Su, M. Ahmed, Y. Lu, S. Pan, W. Bo, and Y. Liu, “RoFormer: Enhanced transformer with Rotary Position Embedding,” Neurocomputing, vol. 568, p. 127063, 2024, https://doi.org/10.1016/j.neucom.2023.127063.

Y. Yin, Z. Tang, and H. Weng, “Application of visual transformer in renal image analysis,” Biomed Eng Online, vol. 23, no. 1, p. 27, 2024, https://doi.org/10.1186/s12938-024-01209-z.

Z. Li, R. Liu, L. Sun, and Y. Zheng, “Multi-Feature Cross Attention-Induced Transformer Network for Hyperspectral and LiDAR Data Classification,” Remote Sens (Basel), vol. 16, no. 15, p. 2775, 2024, https://doi.org/10.3390/rs16152775.

Y. Wang, Y. Liu, and Z.-M. Ma, “The scale-invariant space for attention layer in neural network,” Neurocomputing, vol. 392, pp. 1–10, 2020, https://doi.org/10.1016/j.neucom.2020.01.090.

I.-Y. Kwak et al., “Proformer: a hybrid macaron transformer model predicts expression values from promoter sequences,” BMC Bioinformatics, vol. 25, no. 1, p. 81, 2024, https://doi.org/10.1186/s12859-024-05645-5.

A. Kumar, C. Barla, R. K. Singh, A. Kant, and D. Kumar, “Exploratory Study on Different Transformer Models,” In International Conference on Advanced Network Technologies and Intelligent Computing, pp. 32–45, 2025, https://doi.org/10.1007/978-3-031-83796-8_3.

A. Zaeemzadeh, N. Rahnavard, and M. Shah, “Norm-Preservation: Why Residual Networks Can Become Extremely Deep?,” IEEE Trans Pattern Anal Mach Intell, vol. 43, no. 11, pp. 3980–3990, 2021, https://doi.org/10.1109/TPAMI.2020.2990339.

S. Islam et al., “A comprehensive survey on applications of transformers for deep learning tasks,” Expert Syst Appl, vol. 241, p. 122666, 2024, https://doi.org/10.1016/j.eswa.2023.122666.

M. Gao, H. Zhao, and M. Deng, “A Novel Convolution Kernel with Multi-head Self-attention,” in 2023 8th International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), pp. 417–420, 2023, https://doi.org/10.1109/ICIIBMS60103.2023.10347796.

H. Ding, K. Chen, and Q. Huo, “An Encoder-Decoder Approach to Handwritten Mathematical Expression Recognition with Multi-head Attention and Stacked Decoder,” pp. 602–616, 2021, https://doi.org/10.1007/978-3-030-86331-9_39.

J. Wang, C. Lai, Y. Wang, and W. Zhang, “EMAT: Efficient feature fusion network for visual tracking via optimized multi-head attention,” Neural Networks, vol. 172, p. 106110, 2024, https://doi.org/10.1016/j.neunet.2024.106110.

R. Rende, F. Gerace, A. Laio, and S. Goldt, “Mapping of attention mechanisms to a generalized Potts model,” Phys Rev Res, vol. 6, no. 2, p. 023057, 2024, https://doi.org/10.1103/PhysRevResearch.6.023057.

J. Tang, S. Wang, S. Chen, and Y. Kang, “DP-FFN: Block-Based Dynamic Pooling for Accelerating Feed-Forward Layers in Transformers,” in 2024 IEEE International Symposium on Circuits and Systems (ISCAS), pp. 1–5, 2024, https://doi.org/10.1109/ISCAS58744.2024.10558119.

Y. Yu, K. Adu, N. Tashi, P. Anokye, X. Wang, and M. A. Ayidzoe, “RMAF: Relu-Memristor-Like Activation Function for Deep Learning,” IEEE Access, vol. 8, pp. 72727–72741, 2020, https://doi.org/10.1109/ACCESS.2020.2987829.

M. Al Hamed, M. A. Nemer, J. Azar, A. Makhoul, and J. Bourgeois, “Evaluating Transformer Architectures for Fault Detection in Industry 4.0: A Multivariate Time Series Classification Approach,” in 2025 5th IEEE Middle East and North Africa Communications Conference (MENACOMM), pp. 1–6, 2025, https://doi.org/10.1109/MENACOMM62946.2025.10911019.

S. K. Assayed, M. Alkhatib, and K. Shaalan, “A Transformer-Based Generative AI Model in Education: Fine-Tuning BERT for Domain-Specific in Student Advising,” pp. 165–174, 2024, https://doi.org/10.1007/978-3-031-65996-6_14.

Y. Akimoto, K. Fukuchi, Y. Akimoto, and J. Sakuma, “Privformer: Privacy-preserving Transformer with MPC,” in 2023 IEEE 8th European Symposium on Security and Privacy (EuroS&P), pp. 392–410, 2023, https://doi.org/10.1109/EuroSP57164.2023.00031.

Z. Bi, S. Cheng, J. Chen, X. Liang, F. Xiong, and N. Zhang, “Relphormer: Relational Graph Transformer for Knowledge Graph Representations,” Neurocomputing, vol. 566, p. 127044, 2024, https://doi.org/10.1016/j.neucom.2023.127044.

M. E. Mswahili and Y.-S. Jeong, “Transformer-based models for chemical SMILES representation: A comprehensive literature review,” Heliyon, vol. 10, no. 20, p. e39038, 2024, https://doi.org/10.1016/j.heliyon.2024.e39038.

Y. Z. Vakili, A. Fallah, and H. Sajedi, “Distilled BERT Model in Natural Language Processing,” in 2024 14th International Conference on Computer and Knowledge Engineering (ICCKE), pp. 243–250, 2024, https://doi.org/10.1109/ICCKE65377.2024.10874673.

A. Jain, A. Rouhe, S.-A. Grönroos, and M. Kurimo, “Finnish ASR with Deep Transformer Models,” in Interspeech 2020, pp. 3630–3634, 2020, https://doi.org/10.21437/Interspeech.2020-1784.

I. Daqiqil ID, H. Saputra, S. Syamsudhuha, R. Kurniawan, and Y. Andriyani, “Sentiment analysis of student evaluation feedback using transformer-based language models,” Indonesian Journal of Electrical Engineering and Computer Science, vol. 36, no. 2, p. 1127, 2024, https://doi.org/10.11591/ijeecs.v36.i2.pp1127-1139.

M. A. Ferrag et al., “Revolutionizing Cyber Threat Detection With Large Language Models: A Privacy-Preserving BERT-Based Lightweight Model for IoT/IIoT Devices,” IEEE Access, vol. 12, pp. 23733–23750, 2024, https://doi.org/10.1109/ACCESS.2024.3363469.

O. Elharrouss et al., “ViTs as backbones: Leveraging vision transformers for feature extraction,” Information Fusion, vol. 118, p. 102951, 2025, https://doi.org/10.1016/j.inffus.2025.102951.

Y. Li et al., “How Does Attention Work in Vision Transformers? A Visual Analytics Attempt,” IEEE Trans Vis Comput Graph, vol. 29, no. 6, pp. 2888–2900, 2023, https://doi.org/10.1109/TVCG.2023.3261935.

G. L. Baroni, L. Rasotto, K. Roitero, A. H. Siraj, and V. Della Mea, “Vision Transformers for Breast Cancer Histology Image Classification,” pp. 15–26, 2024, https://doi.org/10.1007/978-3-031-51026-7_2.

J. Chen, P. Wu, X. Zhang, R. Xu, and J. Liang, “Add-Vit: CNN-Transformer Hybrid Architecture for Small Data Paradigm Processing,” Neural Process Lett, vol. 56, no. 3, p. 198, 2024, https://doi.org/10.1007/s11063-024-11643-8.

Z. Liang, J. Liu, J. Zhang, and J. Zhao, “High Performance Target Detection Based on Swin Transformer,” in 2024 2nd International Conference on Signal Processing and Intelligent Computing (SPIC), pp. 1077–1080, 2024, https://doi.org/10.1109/SPIC62469.2024.10691413.

S. Pak et al., “Application of deep learning for semantic segmentation in robotic prostatectomy: Comparison of convolutional neural networks and visual transformers,” Investig Clin Urol, vol. 65, no. 6, p. 551, 2024, https://doi.org/10.4111/icu.20240159.

H. Rangwani, P. Mondal, P. Mondal, M. Mishra, A. R. Asokan, and R. V. Babu, “DeiT-LT: Distillation Strikes Back for Vision Transformer Training on Long-Tailed Datasets,” in 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 23396–23406, 2024, https://doi.org/10.1109/CVPR52733.2024.02208.

L. Liming, F. Yao, L. Pengwei, and L. Renjie, “Remote sensing image object detection based on MobileViT and multiscale feature aggregation,” CAAI Transactions on Intelligent Systems, vol. 19, no. 5, pp. 1168–1177, 2024, https://doi.org/10.11992/tis.202310022.

S. Islam et al., “A comprehensive survey on applications of transformers for deep learning tasks,” Expert Syst Appl, vol. 241, p. 122666, 2024, https://doi.org/10.1016/j.eswa.2023.122666.

M.-G. Lin, J.-P. Wang, Y.-J. Luo, and A.-Y. A. Wu, “A 28nm 64.5TOPS/W Sparse Transformer Accelerator with Partial Product-based Speculation and Sparsity-Adaptive Computation,” in 2024 IEEE Asia Pacific Conference on Circuits and Systems (APCCAS), pp. 664–668, 2024, https://doi.org/10.1109/APCCAS62602.2024.10808854.

I. Zharikov, I. Krivorotov, V. Alexeev, A. Alexeev, and G. Odinokikh, “Low-Bit Quantization of Transformer for Audio Speech Recognition,” pp. 107–120, 2023, https://doi.org/10.1007/978-3-031-19032-2_12.

Y. Chen, F. Tonin, Q. Tao, and J. A. K. Suykens, “Primal-Attention: Self-attention through Asymmetric Kernel SVD in Primal Representation,” In Advances in Neural Information Processing Systems, vol. 36, pp. 65088-65101, 2023, https://proceedings.neurips.cc/paper_files/paper/2023/hash/cd687a58a13b673eea3fc1b2e4944cf7-Abstract-Conference.html.

A. Kolesnikova, Y. Kuratov, V. Konovalov, and M. Mikhail, “Knowledge Distillation of Russian Language Models with Reduction of Vocabulary,” in Computational Linguistics and Intellectual Technologies, pp. 295–310, 2022, https://doi.org/10.28995/2075-7182-2022-21-295-310.

Y. Z. Vakili, A. Fallah, and H. Sajedi, “Distilled BERT Model in Natural Language Processing,” in 2024 14th International Conference on Computer and Knowledge Engineering (ICCKE), pp. 243–250, 2024, https://doi.org/10.1109/ICCKE65377.2024.10874673.

A. Nazir et al., “LangTest: A comprehensive evaluation library for custom LLM and NLP models,” Software Impacts, vol. 19, p. 100619, 2024, https://doi.org/10.1016/j.simpa.2024.100619.

M. Harahus, Z. Sokolová, M. Pleva, and D. Hládek, “Evaluating BERT-Derived Models for Grammatical Error Detection Across Diverse Dataset Distributions,” in 2024 International Symposium ELMAR, pp. 237–240, 2024, https://doi.org/10.1109/ELMAR62909.2024.10694387.

E. Reiter, “A Structured Review of the Validity of BLEU,” Computational Linguistics, vol. 44, no. 3, pp. 393–401, 2018, https://doi.org/10.1162/coli_a_00322.

E. Durmus, F. Ladhak, and T. Hashimoto, “Spurious Correlations in Reference-Free Evaluation of Text Generation,” in Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Paperspp. 1443–1454, 2022, https://doi.org/10.18653/v1/2022.acl-long.102.

F. Yang and S. Koyejo, “On the consistency of top-k surrogate losses,” In International Conference on Machine Learning (pp. 10727-10735, 2020, https://proceedings.mlr.press/v119/yang20f.html.

W.-S. Jeon and S.-Y. Rhee, “MPFANet: Semantic Segmentation Using Multiple Path Feature Aggregation,” International Journal of Fuzzy Logic and Intelligent Systems, vol. 21, no. 4, pp. 401–408, 2021, https://doi.org/10.5391/IJFIS.2021.21.4.401.

B. Kaur and S. Singh, “Object Detection using Deep Learning,” in Proceedings of the International Conference on Data Science, Machine Learning and Artificial Intelligence, pp. 328–334, 2021, https://doi.org/10.1145/3484824.3484889.

S. Tuli and N. K. Jha, “EdgeTran: Device-Aware Co-Search of Transformers for Efficient Inference on Mobile Edge Platforms,” IEEE Trans Mob Comput, vol. 23, no. 6, pp. 7012–7029, 2024, https://doi.org/10.1109/TMC.2023.3328287.

Y. Gao, J. Zhang, S. Wei, and Z. Li, “PFormer: An efficient CNN-Transformer hybrid network with content-driven P-attention for 3D medical image segmentation,” Biomed Signal Process Control, vol. 101, p. 107154, 2025, https://doi.org/10.1016/j.bspc.2024.107154.

B. K. H. Samosir and S. M. Isa, “Evaluating Vision Transformers Efficiency in Image Captioning,” in 2024 International Conference on Intelligent Cybernetics Technology & Applications (ICICyTA), pp. 926–931, 2024, https://doi.org/10.1109/ICICYTA64807.2024.10913182.

Y. Kumar, A. Ilin, H. Salo, S. Kulathinal, M. K. Leinonen, and P. Marttinen, “Self-Supervised Forecasting in Electronic Health Records With Attention-Free Models,” IEEE Transactions on Artificial Intelligence, vol. 5, no. 8, pp. 3926–3938, 2024, https://doi.org/10.1109/TAI.2024.3353164.

Y. Qin et al., “Ayaka: A Versatile Transformer Accelerator With Low-Rank Estimation and Heterogeneous Dataflow,” IEEE J Solid-State Circuits, vol. 59, no. 10, pp. 3342–3356, 2024, https://doi.org/10.1109/JSSC.2024.3397189.

Z. Zhang, Z. Dong, W. Xu, and J. Han, “Reparameterization of Lightweight Transformer for On-Device Speech Emotion Recognition,” IEEE Internet Things J, vol. 12, no. 4, pp. 4169–4182, 2025, https://doi.org/10.1109/JIOT.2024.3483232.

S. Gholami, “Can Pruning Make Large Language Models More Efficient?,” In Redefining Security With Cyber AI, pp. 1–14, 2024, https://doi.org/10.4018/979-8-3693-6517-5.ch001.

S. Tuli and N. K. Jha, “TransCODE: Co-Design of Transformers and Accelerators for Efficient Training and Inference,” IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, vol. 42, no. 12, pp. 4817–4830, 2023, https://doi.org/10.1109/TCAD.2023.3283443.

B. Reidy, M. Mohammadi, M. Elbtity, H. Smith, and Z. Ramtin, “Work in Progress: Real-time Transformer Inference on Edge AI Accelerators,” in 2023 IEEE 29th Real-Time and Embedded Technology and Applications Symposium (RTAS), pp. 341–344, 2023, https://doi.org/10.1109/RTAS58335.2023.00036.

C. Zhang et al., “Benchmarking and In-depth Performance Study of Large Language Models on Habana Gaudi Processors,” in Proceedings of the SC ’23 Workshops of the International Conference on High Performance Computing, Network, Storage, and Analysis, pp. 1759–1766, 2023, https://doi.org/10.1145/3624062.3624257.

Z. Lu, F. Wang, Z. Xu, F. Yang, and T. Li, “On the Performance and Memory Footprint of Distributed Training: An Empirical Study on Transformers,” Softw Pract Exp, 2024, https://doi.org/10.1002/spe.3421.

P. Xu, X. Zhu, and D. A. Clifton, “Multimodal Learning With Transformers: A Survey,” IEEE Trans Pattern Anal Mach Intell, vol. 45, no. 10, pp. 12113–12132, 2023, https://doi.org/10.1109/TPAMI.2023.3275156.

E. Tamakloe, B. Kommey, J. J. Kponyo, E. T. Tchao, A. S. Agbemenu, and G. S. Klogo, “Predictive AI Maintenance of Distribution Oil‐Immersed Transformer via Multimodal Data Fusion: A New Dynamic Multiscale Attention CNN‐LSTM Anomaly Detection Model for Industrial Energy Management,” IET Electr Power Appl, vol. 19, no. 1, 2025, https://doi.org/10.1049/elp2.70011.

A. W. S. Er, W. K. Wong, F. H. Juwono, I. M. Chew, S. Sivakumar, and A. P. Gurusamy, “Automated Transformer Health Prediction: Evaluation of Complexity and Linearity of Models for Prediction,” In International Conference on Green Energy, Computing and Intelligent Technology, 2024, pp. 21–33, 2024, https://doi.org/10.1007/978-981-99-9833-3_3.

J. Huang and S. Kaewunruen, “Forecasting Energy Consumption of a Public Building Using Transformer and Support Vector Regression,” Energies (Basel), vol. 16, no. 2, p. 966, 2023, https://doi.org/10.3390/en16020966.

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 Iis Setiawan Mangkunegara, Purwono Purwono, Alfian Ma’arif, Noorulden Basil, Hamzah M. Marhoon, Abdel-Nasser Sharkawy

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Authors who publish with this journal agree to the following terms:

- Authors retain copyright and grant the journal right of first publication with the work simultaneously licensed under a Creative Commons Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this journal.

- Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the journal's published version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial publication in this journal.

- Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See The Effect of Open Access).

This journal is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.