Evaluation of the Effectiveness of Hand Gesture Recognition Using Transfer Learning on a Convolutional Neural Network Model for Integrated Service of Smart Robot

DOI:

https://doi.org/10.12928/biste.v7i4.14507Keywords:

Transfer Learning, Hand Gesture Recognition, Convolutional Neural Network, Service Robot Integrated, Innovation, Information and Communication Technology, InfrastructureAbstract

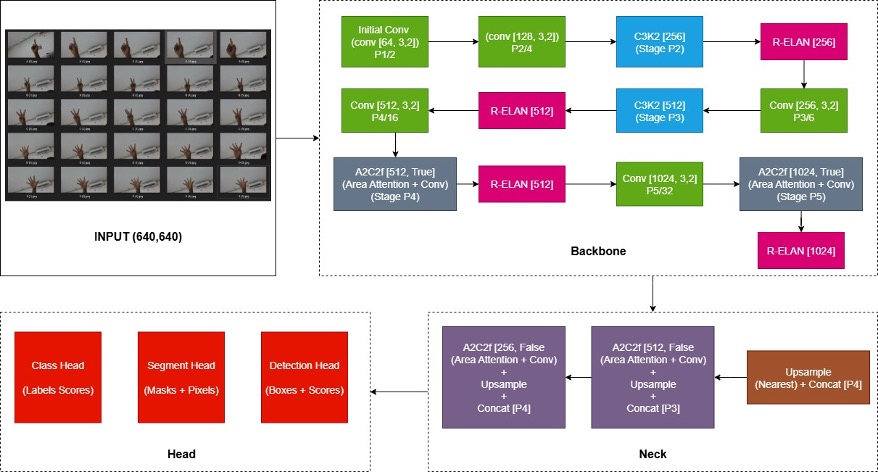

This study aims to develop and evaluate the effectiveness of a transfer learning model on CNN with the proposed YOLOv12 architecture for recognizing hand gestures in real time on an integrated service robot. In addition, this study compares the performance of MobileNetV3, ResNet50, and EfficientNetB0, as well as a previously funded model (YOLOv8) and the proposed YOLOv12 development model. This research contributes to SDG 4 (Quality Education), SDG 9 (Industry, Innovation and Infrastructure), and SDG 11 (Sustainable Cities and Communities) by enhancing intelligent human–robot interaction for educational and service environments. The study applies an experimental method by comparing the performance of various transfer learning models in hand gesture recognition. The custom dataset consists of annotated hand gesture images, fine-tuned to improve model robustness under different lighting conditions, camera angles, and gesture variations. Evaluation metrics include mean Average Precision (mAP), inference latency, and computational efficiency, which determine the most suitable model for deployment in integrated service robots. The test results show that the YOLOv12 model achieved an mAP@0.5 of 99.5% with an average inference speed of 1–2 ms per image, while maintaining stable detection performance under varying conditions. Compared with other CNN-based architectures (MobileNetV3, ResNet50, and EfficientNetB0), which achieved accuracies between 97% and 99%, YOLOv12 demonstrated superior performance. Furthermore, it outperformed previous research using YOLOv8 (91.6% accuracy.

References

S. Padmakala, S. O. Husain, E. Poornima, P. Dutta, and M. Soni, “Hyperparameter Tuning of Deep Convolutional Neural Network for Hand Gesture Recognition,” in 2024 Second International Conference on Networks, Multimedia and Information Technology (NMITCON), pp. 1–4, 2024, https://doi.org/10.1109/NMITCON62075.2024.10698984.

K. L. Manikanta, N. Shyam, and S. S, “Real-Time Hand Gesture Recognition and Sentence Generation for Deaf and Non-Verbal Communication,” in 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), pp. 1–6, 2024, https://doi.org/10.1109/ADICS58448.2024.10533502.

J. W. Smith, S. Thiagarajan, R. Willis, Y. Makris, and M. Torlak, “Improved Static Hand Gesture Classification on Deep Convolutional Neural Networks Using Novel Sterile Training Technique,” IEEE Access, vol. 9, pp. 10893–10902, 2021, https://doi.org/10.1109/ACCESS.2021.3051454.

S. Meshram, R. Singh, P. Pal, and S. K. Singh, “Convolution Neural Network based Hand Gesture Recognition System,” in 2023 Third International Conference on Advances in Electrical, Computing, Communication and Sustainable Technologies (ICAECT), pp. 1–5, 2023, https://doi.org/10.1109/ICAECT57570.2023.10118267.

R. Bekiri, S. Babahenini, and M. C. Babahenini, “Transfer Learning for Improved Hand Gesture Recognition with Neural Networks,” in 2024 8th International Conference on Image and Signal Processing and their Applications (ISPA), pp. 1–6, 2024, https://doi.org/10.1109/ISPA59904.2024.10536712.

U. Kulkarni, S. Agasimani, P. P. Kulkarni, S. Kabadi, P. S. Aditya, and R. Ujawane, “Vision based Roughness Average Value Detection using YOLOv5 and EasyOCR,” in 2023 IEEE 8th International Conference for Convergence in Technology (I2CT), pp. 1–7, 2023, https://doi.org/10.1109/I2CT57861.2023.10126305.

S. Dhyani and V. Kumar, “Real-Time License Plate Detection and Recognition System using YOLOv7x and EasyOCR,” in 2023 Global Conference on Information Technologies and Communications (GCITC), pp. 1–5, 2023, https://doi.org/10.1109/GCITC60406.2023.10425814.

W. Fang, L. Wang, and P. Ren, “Tinier-YOLO: A Real-Time Object Detection Method for Constrained Environments,” IEEE Access, vol. 8, pp. 1935–1944, 2020, https://doi.org/10.1109/ACCESS.2019.2961959.

P. Samothai, P. Sanguansat, A. Kheaksong, K. Srisomboon, and W. Lee, “The Evaluation of Bone Fracture Detection of YOLO Series,” in 2022 37th International Technical Conference on Circuits/Systems, Computers and Communications (ITC-CSCC), pp. 1054–1057, 2022, https://doi.org/10.1109/ITC-CSCC55581.2022.9895016.

T.-H. Nguyen, R. Scherer, and V.-H. Le, “YOLO Series for Human Hand Action Detection and Classification from Egocentric Videos,” Sensors, vol. 23, p. 3255, 2023, https://doi.org/10.3390/s23063255.

P. Shourie, V. Anand, D. Upadhyay, S. Devliyal, and S. Gupta, “YogaPoseVision: MobileNetV3-Powered CNN for Yoga Pose Identification,” in 2024 7th International Conference on Circuit Power and Computing Technologies (ICCPCT), pp. 659–663, 2024, https://doi.org/10.1109/ICCPCT61902.2024.10673072.

S. Guanglei and W. Wen, “Recognizing Crop Diseases and Pests Using an Improved MobileNetV3 Model,” in 2023 13th International Conference on Information Technology in Medicine and Education (ITME), pp. 302–308, 2023, https://doi.org/10.1109/ITME60234.2023.00069.

X. Zhang, N. Li, and R. Zhang, “An Improved Lightweight Network MobileNetv3 Based YOLOv3 for Pedestrian Detection,” in 2021 IEEE International Conference on Consumer Electronics and Computer Engineering (ICCECE), pp. 114–118, 2021, https://doi.org/10.1109/ICCECE51280.2021.9342416.

G. Singh, K. Guleria, and S. Sharma, “A Fine-Tuned MobileNetV3 Model for Real and Fake Image Classification,” in 2024 Second International Conference on Intelligent Cyber Physical Systems and Internet of Things (ICoICI), pp. 1–5, 2024, https://doi.org/10.1109/ICoICI62503.2024.10696448.

N. Anggraini, S. H. Ramadhani, L. K. Wardhani, N. Hakiem, I. M. Shofi, and M. T. Rosyadi, “Development of Face Mask Detection using SSDLite MobilenetV3 Small on Raspberry Pi 4,” in 2022 5th International Conference of Computer and Informatics Engineering (IC2IE), pp. 209–214, 2022, https://doi.org/10.1109/IC2IE56416.2022.9970078.

R. Pillai, N. Sharma, and R. Gupta, “Detection & Classification of Abnormalities in GI Tract through MobileNetV3 Transfer Learning Model,” in 2023 14th International Conference on Computing Communication and Networking Technologies (ICCCNT), pp. 1–6, 2023, https://doi.org/10.1109/ICCCNT56998.2023.10307732.

S. Saifullah, R. dreżewski, A. Yudhana, and A. P. Suryotomo, “Automatic Brain Tumor Segmentation: Advancing U-Net With ResNet50 Encoder for Precise Medical Image Analysis,” IEEE Access, vol. 13, pp. 43473–43489, 2025, https://doi.org/10.1109/ACCESS.2025.3547430.

A. F. A. Alshamrani and F. Saleh Zuhair Alshomrani, “Optimizing Breast Cancer Mammogram Classification Through a Dual Approach: A Deep Learning Framework Combining ResNet50, SMOTE, and Fully Connected Layers for Balanced and Imbalanced Data,” IEEE Access, vol. 13, pp. 4815–4826, 2025, https://doi.org/10.1109/ACCESS.2024.3524633.

R. Singh, S. Gupta, S. Bharany, A. Almogren, A. Altameem, and A. Ur Rehman, “Ensemble Deep Learning Models for Enhanced Brain Tumor Classification by Leveraging ResNet50 and EfficientNet-B7 on High-Resolution MRI Images,” IEEE Access, vol. 12, pp. 178623–178641, 2024, https://doi.org/10.1109/ACCESS.2024.3494232.

S. Tamilselvi, M. Suchetha, and R. Raman, “Leveraging ResNet50 With Swin Attention for Accurate Detection of OCT Biomarkers Using Fundus Images,” IEEE Access, vol. 13, pp. 35203–35218, 2025, https://doi.org/10.1109/ACCESS.2025.3544332.

N. Ansori, S. S. Putro, S. Rifka, E. M. S. Rochman, Y. P. Asmara, and A. Rachmad, “The Effect of Color and Augmentation on Corn Stalk Disease Classification Using ResNet-101,” Mathematical Modelling of Engineering Problems, vol. 12, no. 3, pp. 1007–1012, 2025, https://doi.org/10.18280/mmep.120327.

A. Younis et al., “Abnormal Brain Tumors Classification Using ResNet50 and Its Comprehensive Evaluation,” IEEE Access, vol. 12, pp. 78843–78853, 2024, https://doi.org/10.1109/ACCESS.2024.3403902.

Z. Liu et al., “Research on Arthroscopic Images Bleeding Detection Algorithm Based on ViT-ResNet50 Integrated Model and Transfer Learning,” IEEE Access, vol. 12, pp. 181436–181453, 2024, https://doi.org/10.1109/ACCESS.2024.3508797.

N. Saba et al., “A Synergistic Approach to Colon Cancer Detection: Leveraging EfficientNet and NSGA-II for Enhanced Diagnostic Performance,” IEEE Access, pp. 1–1, 2024, https://doi.org/10.1109/ACCESS.2024.3519216.

Z. Liu, J. John, and E. Agu, “Diabetic Foot Ulcer Ischemia and Infection Classification Using EfficientNet Deep Learning Models,” IEEE Open J Eng Med Biol, vol. 3, pp. 189–201, 2022, https://doi.org/10.1109/OJEMB.2022.3219725.

Z. Liu, J. John, and E. Agu, “Diabetic Foot Ulcer Ischemia and Infection Classification Using EfficientNet Deep Learning Models,” IEEE Open J Eng Med Biol, vol. 3, pp. 189–201, 2022, https://doi.org/10.1109/OJEMB.2022.3219725.

A. U. Nabi, J. Shi, Kamlesh, A. K. Jumani, and J. Ahmed Bhutto, “Hybrid Transformer-EfficientNet Model for Robust Human Activity Recognition: The BiTransAct Approach,” IEEE Access, vol. 12, pp. 184517–184528, 2024, https://doi.org/10.1109/ACCESS.2024.3506598.

C. Huang, W. Wang, X. Zhang, S.-H. Wang, and Y.-D. Zhang, “Tuberculosis Diagnosis Using Deep Transferred EfficientNet,” IEEE/ACM Trans Comput Biol Bioinform, vol. 20, no. 5, pp. 2639–2646, 2023, https://doi.org/10.1109/TCBB.2022.3199572.

L. Li, Q. Yin, X. Wang, and H. Wang, “Defects Localization and Classification Method of Power Transmission Line Insulators Aerial Images Based on YOLOv5 EfficientNet and SVM,” IEEE Access, vol. 13, pp. 74833–74843, 2025, https://doi.org/10.1109/ACCESS.2025.3559657.

J. Padhi, L. Korada, A. Dash, P. K. Sethy, S. K. Behera, and A. Nanthaamornphong, “Paddy Leaf Disease Classification Using EfficientNet B4 With Compound Scaling and Swish Activation: A Deep Learning Approach,” IEEE Access, vol. 12, pp. 126426–126437, 2024, https://doi.org/10.1109/ACCESS.2024.3451557.

P. Kumar Tiwary, P. Johri, A. Katiyar, and M. K. Chhipa, “Deep Learning-Based MRI Brain Tumor Segmentation With EfficientNet-Enhanced UNet,” IEEE Access, vol. 13, pp. 54920–54937, 2025, https://doi.org/10.1109/ACCESS.2025.3554405.

H. Han, H. Liu, C. Yang, and J. Qiao, “Transfer Learning Algorithm With Knowledge Division Level,” IEEE Trans Neural Netw Learn Syst, vol. 34, no. 11, pp. 8602–8616, 2023, https://doi.org/10.1109/TNNLS.2022.3151646.

Y. Ma, S. Chen, S. Ermon, and D. B. Lobell, “Transfer learning in environmental remote sensing,” Remote Sens Environ, vol. 301, p. 113924, 2024, https://doi.org/10.1016/j.rse.2023.113924.

Z. Zhao, L. Alzubaidi, J. Zhang, Y. Duan, and Y. Gu, “A comparison review of transfer learning and self-supervised learning: Definitions, applications, advantages and limitations,” Expert Syst Appl, vol. 242, p. 122807, 2024, https://doi.org/10.1016/j.eswa.2023.122807.

S. A. Serrano, J. Martinez-Carranza, and L. E. Sucar, “Knowledge Transfer for Cross-Domain Reinforcement Learning: A Systematic Review,” IEEE Access, vol. 12, pp. 114552–114572, 2024, https://doi.org/10.1109/ACCESS.2024.3435558.

J. Pordoy et al., “Multi-Frame Transfer Learning Framework for Facial Emotion Recognition in e-Learning Contexts,” IEEE Access, vol. 12, pp. 151360–151381, 2024, https://doi.org/10.1109/ACCESS.2024.3478072.

Z. Zhu, K. Lin, A. K. Jain, and J. Zhou, “Transfer Learning in Deep Reinforcement Learning: A Survey,” IEEE Trans Pattern Anal Mach Intell, vol. 45, no. 11, pp. 13344–13362, 2023, https://doi.org/10.1109/TPAMI.2023.3292075.

H. Han et al., “SWIPTNet: A Unified Deep Learning Framework for SWIPT Based on GNN and Transfer Learning,” IEEE Trans Mob Comput, vol. 24, no. 10, pp. 9477–9488, 2025, https://doi.org/10.1109/TMC.2025.3563892.

Q. Song, Y.-J. Zheng, J. Yang, Y.-J. Huang, W.-G. Sheng, and S.-Y. Chen, “Predicting Demands of COVID-19 Prevention and Control Materials via Co-Evolutionary Transfer Learning,” IEEE Trans Cybern, vol. 53, no. 6, pp. 3859–3872, 2023, https://doi.org/10.1109/TCYB.2022.3164412.

S. H. Waters and G. D. Clifford, “Physics-Informed Transfer Learning to Enhance Sleep Staging,” IEEE Trans Biomed Eng, vol. 71, no. 5, pp. 1599–1606, 2024, https://doi.org/10.1109/TBME.2023.3345888.

L. Cheng, P. Singh, and F. Ferranti, “Transfer Learning-Assisted Inverse Modeling in Nanophotonics Based on Mixture Density Networks,” IEEE Access, vol. 12, pp. 55218–55224, 2024, https://doi.org/10.1109/ACCESS.2024.3383790.

F. Umam, H. Sukri, A. Dafid, F. Maolana, M. Natalis, and S. Ndruru, “Optimization of Hand Gesture Object Detection Using Fine-Tuning Techniques on an Integrated Service of Smart Robot,” International Journal of Industrial Engineering & Production Research, vol. 35, no. 4, pp. 1–11, 2024, https://doi.org/10.22068/ijiepr.35.4.2141.

B. La and H. Wu, “The Few-Shot YOLOv12 Object Detection Method Based on Dual-Path Cosine Feature Alignment,” in 2025 6th International Conference on Computer Vision, Image and Deep Learning (CVIDL), pp. 318–324, 2025, https://doi.org/10.1109/CVIDL65390.2025.11085796.

P. B. Rana and S. Thapa, “Comparative Study of Object Detection Models for Fresh and Rotten Apples and Tomatoes: Faster R-CNN, DETR, YOLOv8, and YOLOv12S,” in 2025 International Conference on Inventive Computation Technologies (ICICT), pp. 660–667, 2025, https://doi.org/10.1109/ICICT64420.2025.11005373.

S. H. Thai, H. N. Viet, H. N. Thu, N. Nguyen Van, and H. Dang Van, “AI-Driven Real-Time Traffic Management Using YOLOv12 for Dynamic Signal Control and Intelligent Surveillance,” in 2025 4th International Conference on Electronics Representation and Algorithm (ICERA), pp. 92–97, 2025, https://doi.org/10.1109/ICERA66156.2025.11087320.

A. B. Shaik, A. K. Kandula, G. K. Tirumalasetti, B. Yendluri, and H. K. Kalluri, “Comparative Analysis of YOLOv11 and YOLOv12 for Automated Weed Detection in Precision Agriculture,” in 2025 5th International Conference on Pervasive Computing and Social Networking (ICPCSN), pp. 787–793, 2025, https://doi.org/10.1109/ICPCSN65854.2025.11036078.

A. M. El-Kafrawy and E. H. Seddik, “Personal Protective Equipment (PPE) Monitoring for Construction Site Safety using YOLOv12,” in 2025 International Conference on Machine Intelligence and Smart Innovation (ICMISI), pp. 456–459, 2025, https://doi.org/10.1109/ICMISI65108.2025.11115450.

N. Simic and A. Gavrovska, “Comparative Analysis of YOLOv11 and YOLOv12 for AI-Powered Aerial People Detection,” in 2025 12th International Conference on Electrical, Electronic and Computing Engineering (IcETRAN), pp. 1–4, 2025, https://doi.org/10.1109/IcETRAN66854.2025.11114306.

H. S. Patel, D. K. Patel, D. K. Patel, M. K. Patel, and M. Darji, “Enhancing Brain Tumor Detection Using YOLOv12,” in 2025 3rd International Conference on Inventive Computing and Informatics (ICICI), pp. 1–7, 2025, https://doi.org/10.1109/ICICI65870.2025.11069471.

C. Gong and M. Fang, “An Automated Detection System for Magnetic Bead Residues in Cell Therapy Products Based on YOLOv12,” in 2025 7th International Conference on Artificial Intelligence Technologies and Applications (ICAITA), pp. 479–483, 2025, https://doi.org/10.1109/ICAITA67588.2025.11137958.

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 Faikul Umam, Ach. Dafid, Hanifudin Sukri, Yuli Panca Asmara, Md Monzur Morshed, Firman Maolana, Ahcmad Yusuf

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Authors who publish with this journal agree to the following terms:

- Authors retain copyright and grant the journal right of first publication with the work simultaneously licensed under a Creative Commons Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this journal.

- Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the journal's published version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial publication in this journal.

- Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See The Effect of Open Access).

This journal is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.