Guava Fruit Detection and Classification Using Mask Region-Based Convolutional Neural Network

DOI:

https://doi.org/10.12928/biste.v4i3.7412Keywords:

Mask R-CNN, Classification, Detection, Guava fruit, Computer visionAbstract

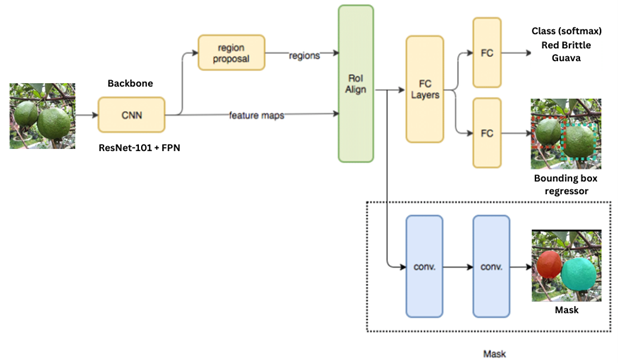

Guava has various types and each type has different nutritional content, shapes, and colors. It is often difficult for some people to recognize guava correctly with so many varieties of guava on the market. In industry, the classification and segmentation of guava fruit is the first important step in measuring the guava fruit quality. The quality inspection of guava fruit is usually still done manually by observing the size, shape, and color which is prone to mistakes due to human error. Therefore, a method was proposed to detect and classify guava fruit automatically using computer vision technology. This research implements a Mask Region-Based Convolutional Neural Network (Mask R-CNN) which is an extension of Faster R-CNN by adding a branch that is used to predict the segmentation mask in each region of interest in parallel with classification and bounding box regression. The system classifies guava fruit into each category, determines the position of each fruit, and marks the region of each fruit. These outputs can be used for further analysis such as quality inspection. The performance evaluation of guava detection and classification using the Mask R-CNN method achieves an mAR score of 88%, an mAP score of 90%, and an F1-Score of 89%. It can be concluded that the proposed method performs well in detecting and classifying guava fruit.

References

R. Upadhyay, J. F. P. Dass, A. K. Chauhan, P. Yadav, M. Singh, and R. B. Singh, “Chapter 21 - Guava Enriched Functional Foods: Therapeutic Potentials and Technological Challenges,” in The Role of Functional Food Security in Global Health, R. B. Singh, R. R. Watson, and T. Takahashi, Eds. Academic Press, pp. 365–378, 2019, https://doi.org/10.1016/B978-0-12-813148-0.00021-9.

Y. Suwanwong and S. Boonpangrak, “Phytochemical contents, antioxidant activity, and anticancer activity of three common guava cultivars in Thailand,” Eur. J. Integr. Med., vol. 42, p. 101290, 2021, https://doi.org/10.1016/j.eujim.2021.101290.

A. Prabhu, A. K. K, A. Abhiram, and B. R. Pushpa, “Mango Fruit Classification using Computer Vision System,” in 2022 4th International Conference on Inventive Research in Computing Applications (ICIRCA), pp. 1797–1802, 2022, https://doi.org/10.1109/ICIRCA54612.2022.9985773.

H. Altaheri, M. Alsulaiman, and G. Muhammad, “Date Fruit Classification for Robotic Harvesting in a Natural Environment Using Deep Learning,” IEEE Access, vol. 7, pp. 117115–117133, 2019, https://doi.org/10.1109/ACCESS.2019.2936536.

Y. Zhang and L. Wu, “Classification of Fruits Using Computer Vision and a Multiclass Support Vector Machine,” Sensors, vol. 12, no. 9, pp. 12489–12505, 2012, https://doi.org/10.3390/s120912489.

J. L. Rojas-Aranda, J. I. Nunez-Varela, J. C. Cuevas-Tello, and G. Rangel-Ramirez, “Fruit Classification for Retail Stores Using Deep Learning,” in Pattern Recognition, pp. 3–13, 2020, https://doi.org/10.1007/978-3-030-49076-8_1.

F. Femling, A. Olsson, and F. Alonso-Fernandez, “Fruit and Vegetable Identification Using Machine Learning for Retail Applications,” in 2018 14th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), pp. 9–15, 2018, https://doi.org/10.1109/SITIS.2018.00013.

M. S. Hossain, M. Al-Hammadi, and G. Muhammad, “Automatic Fruit Classification Using Deep Learning for Industrial Applications,” IEEE Trans. Ind. Informatics, vol. 15, no. 2, pp. 1027–1034, 2019, https://doi.org/10.1109/TII.2018.2875149.

R. Katarzyna and M. Paweł, “A Vision-Based Method Utilizing Deep Convolutional Neural Networks for Fruit Variety Classification in Uncertainty Conditions of Retail Sales,” Appl. Sci., vol. 9, no. 19, 2019, https://doi.org/10.3390/app9193971.

Y. Yu, K. Zhang, L. Yang, and D. Zhang, “Fruit detection for strawberry harvesting robot in non-structural environment based on Mask-RCNN,” Comput. Electron. Agric., vol. 163, no. February, p. 104846, 2019, https://doi.org/10.1016/j.compag.2019.06.001.

P. Ganesh, K. Volle, T. F. Burks, and S. S. Mehta, “Deep Orange: Mask R-CNN based Orange Detection and Segmentation,” IFAC-PapersOnLine, vol. 52, no. 30, pp. 70–75, 2019, https://doi.org/10.1016/j.ifacol.2019.12.499.

S. Bargoti and J. Underwood, “Deep fruit detection in orchards,” in 2017 IEEE International Conference on Robotics and Automation (ICRA), pp. 3626–3633, 2017, https://doi.org/10.1109/ICRA.2017.7989417.

A. Koirala, K. B. Walsh, Z. Wang, and C. McCarthy, “Deep learning for real-time fruit detection and orchard fruit load estimation: benchmarking of ‘MangoYOLO,’” Precis. Agric., vol. 20, no. 6, pp. 1107–1135, 2019, https://doi.org/10.1007/s11119-019-09642-0.

I. Sa, Z. Ge, F. Dayoub, B. Upcroft, T. Perez, and C. McCool, “DeepFruits: A Fruit Detection System Using Deep Neural Networks,” Sensors, vol. 16, no. 8, 2016, https://doi.org/10.3390/s16081222.

K. Hameed, D. Chai, and A. Rassau, “A comprehensive review of fruit and vegetable classification techniques,” Image Vis. Comput., vol. 80, pp. 24–44, 2018, https://doi.org/10.1016/j.imavis.2018.09.016.

S. K. Behera, A. K. Rath, A. Mahapatra, and P. K. Sethy, “Identification, classification & grading of fruits using machine learning & computer intelligence: a review,” J. Ambient Intell. Humaniz. Comput., 2020, https://doi.org/10.1007/s12652-020-01865-8.

K. He, G. Gkioxari, P. Dollar, R. Girshick, P. Dollár, and R. Girshick, “Mask R-CNN,” in Proceedings of the IEEE International Conference on Computer Vision (ICCV), vol. 42, no. 2, pp. 386–397, 2017, https://doi.org/10.1109/TPAMI.2018.2844175.

R. Girshick, J. Donahue, T. Darrell, and J. Malik, “Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation,” Proc. 2014 IEEE Conf. Comput. Vis. Pattern Recognit., pp. 580–587, 2014, https://doi.org/10.1109/CVPR.2014.81.

X. Zou, “A Review of Object Detection Techniques,” in 2019 International Conference on Smart Grid and Electrical Automation (ICSGEA), pp. 251–254, 2019, https://doi.org/10.1109/ICSGEA.2019.00065.

F. Sultana, A. Sufian, and P. Dutta, “A Review of Object Detection Models Based on Convolutional Neural Network,” in Intelligent Computing: Image Processing Based Applications, J. K. Mandal and S. Banerjee, Eds. Singapore: Springer Singapore, pp. 1–16, 2020, https://doi.org/10.1007/978-981-15-4288-6_1.

J. Deng, X. Xuan, W. Wang, Z. Li, H. Yao, and Z. Wang, “A review of research on object detection based on deep learning,” J. Phys. Conf. Ser., vol. 1684, no. 1, p. 12028, 2020, https://doi.org/10.1088/1742-6596/1684/1/012028.

R. Girshick, “Fast R-CNN,” in Proceedings of the IEEE International Conference on Computer Vision (ICCV), pp. 1440–1448, 2015, https://doi.org/10.1109/ICCV.2015.169.

S. Ren, K. He, R. Girshick, and J. Sun, “Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks,” in Advances in Neural Information Processing Systems, vol. 28, 2015, https://proceedings.neurips.cc/paper/2015/hash/14bfa6bb14875e45bba028a21ed38046-Abstract.html.

K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778, 2016, https://doi.org/10.1109/CVPR.2016.90.

K. Simonyan and A. Zisserman, “Very Deep Convolutional Networks for Large-Scale Image Recognition,” in 3rd International Conference on Learning Representations, {ICLR} 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings, Sep. 2015. 2019, https://doi.org/10.48550/arXiv.1409.1556.

A. Gholamy, V. Kreinovich, and O. Kosheleva, “Why 70/30 or 80/20 Relation Between Training and Testing Sets : A Pedagogical Explanation,” Dep. Tech. Reports, pp. 1–6, 2018, https://scholarworks.utep.edu/cs_techrep/1209/.

Published

How to Cite

Issue

Section

License

Copyright (c) 2023 Bayu Alif Farisqi, Adhi Prahara

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Authors who publish with this journal agree to the following terms:

- Authors retain copyright and grant the journal right of first publication with the work simultaneously licensed under a Creative Commons Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this journal.

- Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the journal's published version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial publication in this journal.

- Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See The Effect of Open Access).

This journal is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.