ISSN: 2685-9572 Buletin Ilmiah Sarjana Teknik Elektro

Vol. 8, No. 1, February 2026, pp. 241-257

Profiling Digital Competency of Prospective Vocational IT Educators Using Generalized DINA Model: A Cognitive Diagnostic Approach

I Nyoman Indhi Wiradika 1, Samsul Hadi 2, Mohammad Khairudin 3, Syarief Fajaruddin 4

1,2,3 Educational Research and Evaluation, Graduate School, Universitas Negeri Yogyakarta, Yogyakarta, Indonesia

4 Indonesian Language and Literature Education, Faculty of Education, Universitas Sarjanawiyata Tamansiswa, Yogyakarta, Indonesia

ARTICLE INFORMATION |

| ABSTRACT |

Article History: Received 15 November 2025 Revised 28 January 2026 Accepted 13 February 2026 |

|

Indonesia faces a significant shortage of digitally competent vocational teachers, yet existing assessment instruments often rely on aggregate scores that fail to diagnose specific cognitive deficits. This study addresses this gap by developing a diagnostic assessment instrument for vocational IT pre-service teachers. The research contribution is the validation of a domain-specific Cognitive Diagnostic Model (CDM) framework that integrates general digital pedagogy with vocational IT technical expertise, enabling precise attribute mastery profiling. The study employed a cross-sectional survey design with a purposive sample of 270 informatics education students. Data were analyzed using a multi-stage psychometric approach, combining Classical Test Theory (CTT), Confirmatory Factor Analysis (CFA), and the Generalized Deterministic Inputs, Noisy "And" Gate (G-DINA) model to determine attribute mastery. The results demonstrated that the 32-item instrument achieved excellent model fit (RMSEA₂=0.011) and outstanding classification accuracy (93.71%). Systematic profiling revealed that female students consistently outperformed males across all dimensions, while a non-linear developmental trajectory was observed with a significant competency decline in the third semester followed by recovery. In conclusion, the G-DINA-based instrument provides a robust diagnostic tool for identifying specific learning needs, suggesting that teacher preparation programs require targeted interventions during critical transition periods to support continuous competency development. |

Keywords: Digital Competency; Cognitive Diagnostic Models; G-DINA; Vocational IT Education; Pre-Service Teachers |

Corresponding Author: I Nyoman Indhi Wiradika, Educational Research and Evaluation, Graduate School, Universitas Negeri Yogyakarta, Yogyakarta, Indonesia. Email: inyoman.2020@student.uny.ac.id |

This work is open access under a Creative Commons Attribution-Share Alike 4.0

|

Document Citation: I. N. I. Wiradika, S. Hadi, M. Khairudin, and S. Fajaruddin, “Profiling Digital Competency of Prospective Vocational IT Educators Using Generalized DINA Model: A Cognitive Diagnostic Approach,” Buletin Ilmiah Sarjana Teknik Elektro, vol. 8, no. 1, pp. 241-257, 2026, DOI: 10.12928/biste.v8i1.15340. |

- INTRODUCTION

- Digital Competence for Vocational IT Pre-Service Teachers

Digital transformation has fundamentally reshaped workforce requirements, creating unprecedented demand for digitally proficient professionals across sectors. Indonesia faces significant challenges in preparing a digitally competent workforce amid the digital transformation era. The projected demand for approximately 9 million skilled digital workers during 2015-2030 [1]. Indonesia's human capital capacity in technological proficiency remains constrained. Empirical evidence indicates that workers possessing advanced digital competencies constitute a negligible proportion, significantly lagging regional counterparts in Southeast Asia [2][3]. This gap becomes increasingly critical within vocational education contexts, where educators must not only master technical competencies but also effectively integrate technology into pedagogical practice [4]-[6].

Digital competence for prospective vocational IT educators constitutes a multidimensional construct encompassing technical content mastery, digital pedagogical capabilities, professionalism, and social engagement within digital ecosystems [6]-[8]. Empirical evidence demonstrates that vocational educators' digital competence remains at moderate-to-low levels with uneven distribution across competency dimensions [9]-[11]. Indonesian vocational teachers exhibit similar patterns, characterized by substantial deficiencies in advanced digital integration skills and pronounced regional disparities [12]-[14].

Digital competency within vocational IT education contexts represents a multidimensional construct integrating technical expertise, pedagogical knowledge, and professional dispositions [6],[8],[15][16]. The construct extends beyond basic digital literacy to encompass sophisticated capabilities enabling educators to design technology-enhanced learning environments, facilitate learner development, and model professional digital practices [9][10],[17][18].

The DigCompEdu framework, developed through extensive consultation with European education stakeholders, delineates six core competency areas: Professional Engagement, Digital Resources, Teaching and Learning, Assessment, Empowering Learners, and Facilitating Learners' Digital Competence [7][8]. This framework has been validated across multiple educational contexts and adapted for various national implementations [31]-[35]. Each competency area encompasses progressive proficiency levels from awareness (A1-A2), through integration (B1-B2), to innovation (C1-C2), enabling differentiated conceptualization of educator capabilities [24]-[26].

However, vocational IT pre-service teachers require competency frameworks extending beyond generic educator digital competence to integrate domain-specific technical proficiencies across information technology specializations [27]. These extensions encompass software engineering methodologies, network architecture and administration, database design and management systems, cybersecurity principles, cloud computing infrastructures, and emerging technologies including artificial intelligence, Internet of Things, and blockchain applications [28]-[31]. The integration of technical and pedagogical competencies creates distinctive challenges for competency modeling in vocational IT education, requiring frameworks that simultaneously address technological content knowledge and pedagogical content knowledge specific to technical domains [32]-[35].

Contemporary conceptualizations recognize digital competency as comprising three interconnected dimensions: Digital Pedagogy, encompassing technology-enhanced instructional design, learning facilitation, and pedagogical innovation; Digital Professionalism, incorporating professional communication, ethical technology use, collaborative practices, and continuous professional development; and Digital Social Engagement, addressing digital citizenship education, safe technology practices, and socially responsible technology integration [36][37]. These dimensions interact dynamically, with proficiency in one area supporting and reinforcing capabilities across others.

The foundational layer supporting all three competency dimensions consists of technological competencies operationalized through established taxonomies [38]-[40]. These taxonomies delineate hierarchical cognitive processes: technological awareness representing foundational recognition of technology affordances and constraints; technological literacy encompassing functional operational capabilities; technological capability indicating integrated application across contexts; technological creativity involving innovative technology-mediated problem-solving; and technological criticism reflecting evaluative judgment regarding appropriate technology deployment [41][42]. Empirical investigations demonstrate that competency development typically progresses sequentially through these levels, though individual trajectories vary substantially based on contextual and personal factors [11],[43].

Systematic reviews synthesizing international research indicate vocational educator digital competency levels predominantly cluster within intermediate proficiency ranges (B1-B2 within European frameworks), revealing substantial competency gaps particularly in advanced integration and innovation capabilities [17],[44][45]. Considerable variation exists across competency dimensions, with educators demonstrating relatively stronger proficiencies in basic digital resource utilization compared to sophisticated capabilities in differentiated technology-enhanced instruction and data-driven educational decision-making [46]. These patterns persist across diverse geographical contexts, suggesting systemic challenges in digital competency development rather than localized phenomena [10].

Empirical investigations have identified multifaceted factors influencing digital competency acquisition and development trajectories. Personal characteristics including age, gender, prior ICT experience, educational background, and technology self-efficacy beliefs significantly predict competency levels [43]. Institutional variables encompassing organizational support structures, technology infrastructure access, professional development opportunities, collaborative learning cultures, and leadership technology emphasis demonstrate substantial explanatory power for competency variation [34],[47]. Motivational elements including perceived technology usefulness, subjective norms regarding technology integration, behavioral intention toward technology adoption, and anxiety related to technology use mediate relationships between external factors and competency development [48]-[51].

Recent theoretical developments propose integrated frameworks specifically addressing TVET teachers' digital competence requirements. The Chinese framework for vocational teachers incorporates five interrelated dimensions: digital mindset and attitude, reflecting dispositions toward technology integration and continuous learning; digital knowledge and skills, encompassing both generic digital competencies and domain-specific technical expertise; digital education and teaching, addressing pedagogical applications of technology; digital care and support, involving learner guidance and differentiated technology-mediated instruction; and digital collaboration and development, emphasizing professional learning communities and collective capacity building [52]. This framework explicitly recognizes the dual nature of vocational teacher competency requirements, simultaneously addressing general educator digital competence and specialized vocational domain expertise.

The integration of vocational IT domain knowledge with pedagogical competencies necessitates frameworks that account for disciplinary epistemologies and professional practices characteristic of information technology fields. Vocational IT educators must demonstrate competencies enabling them to translate rapidly evolving technological innovations into pedagogically sound learning experiences while maintaining alignment with industry standards and professional certification requirements [53][54]. This dual expertise requirement distinguishes vocational IT teacher preparation from both general teacher education and domain-specific IT professional development.

Contemporary frameworks increasingly emphasize the contextual nature of digital competency development within communities of practice [55][56]. Pre-service teachers develop digital competencies through authentic participation in professional communities, engaging with experienced practitioners, collaborating on technology-mediated instructional design, and reflecting on pedagogical technology integration experiences [32],[57]. This situated perspective emphasizes the importance of apprenticeship models, mentoring relationships, and collaborative inquiry processes in fostering robust digital competency development.

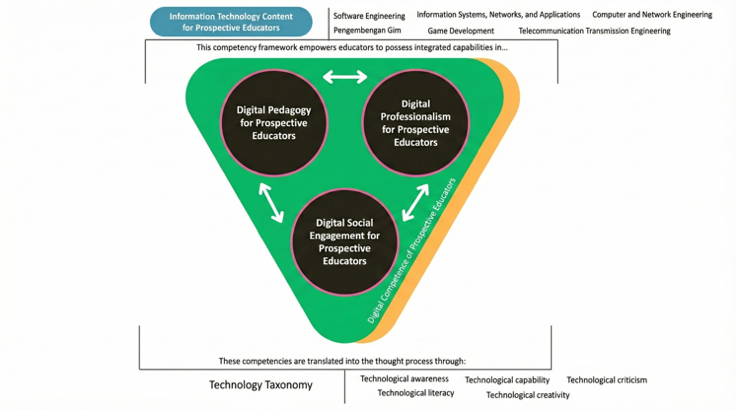

Figure 1 synthesizes the theoretical framework guiding digital competency conceptualization for vocational IT pre-service teachers. The framework positions three interconnected competency dimensions include Digital Pedagogy (PD), Digital Professionalism (PRO), and Digital Social Engagement (KSD) as integrated capabilities essential for effective technology-mediated vocational IT education. These three dimensions operate synergistically within the foundation of Information Technology Content Knowledge (KTI), which encompasses domain-specific technical expertise across vocational IT specializations.

The competency dimensions are grounded in taxonomic levels of technological competency progressing from awareness through literacy, capability, creativity, and criticism. The framework explicitly recognizes the domain-specific context of KTI, encompassing diverse specializations including Game Development Software, Information Systems and Applications, Computer Engineering and Networking, and Telecommunication Transmission Engineering. This integration of general educator digital competence with vocational IT domain expertise addresses the distinctive competency requirements for pre-service teachers preparing to educate future IT professionals.

- Cognitive Diagnostic Models (CDMs)

Cognitive Diagnostic Models (CDMs), alternatively termed Diagnostic Classification Models (DCMs), constitute a family of psychometric models designed to classify individuals into latent classes characterized by unique attribute profiles [58]-[60]. Unlike traditional psychometric approaches that provide unidimensional proficiency estimates, CDMs furnish fine-grained diagnostic information regarding examinees' strengths and weaknesses on specific cognitive attributes or skills required for successful task completion [61][62]. This diagnostic capability renders CDMs particularly valuable for formative assessment purposes, competency-based curriculum design, and targeted intervention development in educational contexts.

A fundamental component of CDMs involves the Q-matrix, a binary incidence matrix specifying hypothesized relationships between assessment items and latent cognitive attributes. Each element qjk indicates whether attribute k is required for correctly responding to item j, thereby operationalizing the cognitive theory underlying the assessment instrument [63][64]. Q-matrix specification represents a critical step in CDM application, as misspecification can substantially compromise classification accuracy and diagnostic validity.

The Generalized Deterministic Input, Noisy "And" gate (G-DINA) model represents a saturated general CDM framework subsuming various restricted models as special cases [65] Restricted models include the DINA (Deterministic Input, Noisy "And" gate) model requiring mastery of all specified attributes for high success probability, the DINO (Deterministic Input, Noisy "Or" gate) model requiring any single attribute mastery, the Additive Cognitive Diagnostic Model (ACDM) assuming additive attribute effects, and the Linear Logistic Model (LLM) incorporating linear attribute relationships [65][66]. The G-DINA framework employs identity, logit, or log link functions to model the probability of correct responses as a function of attribute mastery patterns, permitting flexible representation of compensatory, conjunctive, and hybrid attribute interactions.

Parameter estimation in CDM applications typically utilizes marginal maximum likelihood estimation (MMLE) via Expectation-Maximization (EM) algorithms, though Bayesian estimation approaches employing Markov Chain Monte Carlo (MCMC) methods provide viable alternatives particularly for complex model specifications or small sample contexts. Computational implementations are available through various R packages including GDINA, CDM, and measr, each offering distinct advantages for particular analytical requirements [67]-[69]. Recent developments in software capabilities facilitate complex model specifications, parallel processing for computational efficiency, and comprehensive diagnostic output generation [70].

Psychometric property evaluation of CDM analyses encompasses multiple validation components essential for establishing diagnostic inference credibility. Q-matrix validation procedures ensure accurate item-attribute specifications through empirical validation methods including the general discrimination index, mesa plot examination, and stepwise Wald test approaches [59],[67],[71]. These validation techniques systematically evaluate whether hypothesized Q-matrix entries correspond to empirical data patterns, enabling data-driven refinement of cognitive models.

Model fit evaluation employs absolute fit indices including the root mean square error of approximation for dichotomous data (RMSEA₂) and standardized root mean square residual (SRMSR), alongside relative fit indices for comparing nested or non-nested model alternatives [72]. Model comparison criteria such as Akaike Information Criterion (AIC), Bayesian Information Criterion (BIC), and sample-size adjusted BIC facilitate principled model selection balancing fit quality against parsimony [73][74]. Item-level fit assessment identifies misfitting items requiring revision or removal through standardized residual examination, log-odds ratio analysis, and transformed correlation indices.

Classification accuracy evaluation operates at multiple hierarchical levels, including overall test-level accuracy, individual attribute-level accuracy, and complete attribute pattern-level accuracy [75]. Pattern classification accuracy quantifies the proportion of examinees correctly classified into their true latent classes, while attribute-level accuracy assesses correct classification for each individual attribute. Reliability indices specific to CDM contexts include attribute-level classification consistency and accuracy indices, providing diagnostic-specific reliability evidence distinct from classical test theory or item response theory frameworks.

Recent methodological developments have expanded CDM applicability and robustness across diverse assessment contexts. Approaches for empirically determining optimal attribute numbers address model specification uncertainty through sequential likelihood ratio tests, information criteria comparison, and cross-validation procedures [76]. Small sample performance investigations demonstrate viable CDM applications with samples substantially smaller than traditional IRT requirements, though classification accuracy may be compromised depending on test length, attribute structure, and item quality [77]. Differential item functioning (DIF) detection methods adapted for CDM frameworks enable evaluation of measurement invariance across demographic groups, identifying items functioning differentially for examinees with equivalent attribute profiles. These methodological innovations enhance CDM utility for high-stakes assessment applications requiring rigorous validity evidence and fairness documentation.

A fundamental limitation in digital competency assessment concerns the absence of instruments providing granular diagnostic information about specific cognitive attribute mastery [78]. Most existing instruments rely on self-assessment approaches prone to bias and failing to deliver actionable diagnostic feedback [79][80]. General frameworks like DigCompEdu provide valuable structure but lack specificity for vocational IT education, which requires domain-specific technical competencies, digital pedagogy, and IT-sector professional skills [8],[43],[81],[82].

Reliance on traditional aggregate scoring or unidimensional Item Response Theory (IRT) often obscures the complexity of multidimensional digital competence. These approaches typically assume a compensatory mechanism, where high proficiency in one domain masks critical deficits in another, thereby distorting the true structure of competence [58],[65]. Such lack of diagnostic granularity leads to generalized remediation strategies that fail to target specific latent attribute mastery [83]. Consequently, a shift toward diagnostic classification models is essential to isolate cognitive attributes and ensure targeted interventions for vocational educators [84].

Cognitive Diagnostic Models (CDMs), specifically the Generalized Deterministic Inputs, Noisy "And" Gate (G-DINA) approach, provide methodological solutions for generating fine-grained diagnostic information regarding attribute mastery patterns [65],[67],[85]. Unlike classical test theory or item response theory producing unidimensional scores, CDMs classify examinees into discrete mastery profiles across multiple attributes, enabling identification of specific strengths and weaknesses [58],[68]. G-DINA as a general CDM framework subsumes various restricted models while permitting flexible modeling of attribute interactions [65][66],[86].

This study addresses identified gaps through four objectives:

- Developing and validating a digital competency assessment instrument for vocational IT pre-service teachers using multiple psychometric approaches;

- Analyzing competency profiles using G-DINA to identify attribute mastery patterns;

- Investigating profile variations based on demographic characteristics (gender and semester level);

- Identifying attributes requiring strengthening in teacher preparation programs.

Contributions include a comprehensive assessment framework specific to vocational IT teacher contexts and empirical evidence informing curriculum development and instructional improvement

Figure 1. digital competency conceptualization for vocational IT pre-service teachers

- METHODS

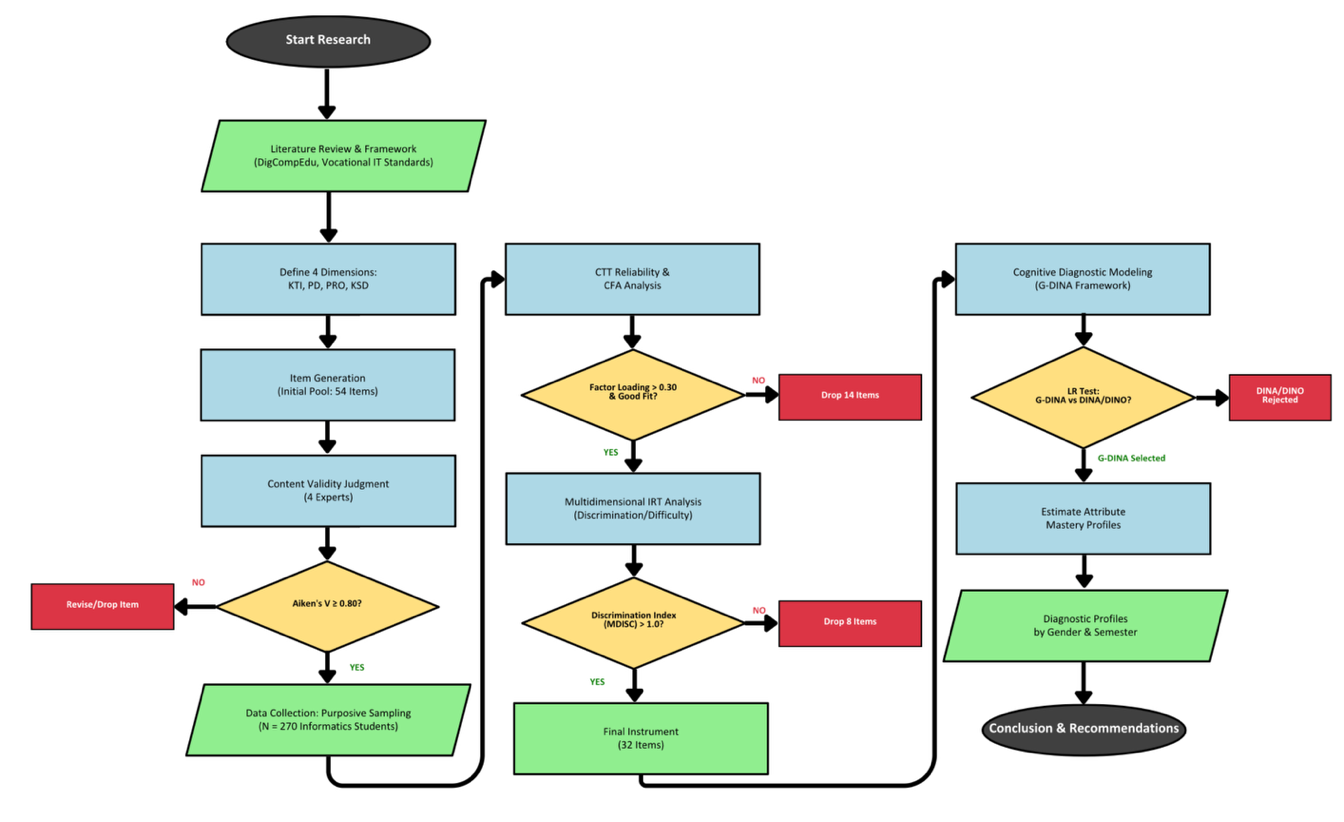

This quantitative study employed a cross-sectional survey design utilizing advanced psychometric approaches to develop and validate a comprehensive digital competency assessment instrument for vocational IT pre-service teachers. The methodological framework integrated Classical Test Theory (CTT), Confirmatory Factor Analysis (CFA), Multidimensional Item Response Theory (MIRT), and Cognitive Diagnostic Modeling (CDM) using the Generalized Deterministic Inputs, Noisy "And" Gate (G-DINA) model to provide fine-grained diagnostic information about attribute mastery patterns. The research procedure is comprehensively visualized in Figure 2. The study followed a systematic five-stage workflow: (1) conceptualization of the four-dimensional framework; (2) instrument development and content validation involving expert judgment; (3) purposive data collection (N=270); (4) rigorous psychometric filtering using CTT, CFA, and MIRT, which reduced the initial 54 items to 32 high-quality items; and (5) cognitive diagnostic analysis using the G-DINA model to generate attribute mastery profiles.

Figure 2. Research Methodology Flowchart

- Research Design and Participants

This study employed a quantitative survey design with cross-sectional data collection conducted between September and November 2025. The research population comprised informatics education students enrolled in a teacher preparation program at a public university in Indonesia. Ethical guidelines were strictly followed, and all participants provided informed consent. The participants were selected using a purposive sampling technique rather than convenience sampling to ensure representativeness of the vocational teacher preparation trajectory. The specific inclusion criteria were: (1) active enrollment in the Informatics Education study program (representing vocational IT teacher candidates); (2) distribution across specific semester levels (1, 3, 5, and 7) to capture developmental stages from novice to advanced pre-service teachers; and (3) completion of foundational pedagogical coursework for upper-semester students. This stratification ensures that the sample reflects the diverse competency levels required for diagnostic modeling calibration. The final sample consisted of 270 participants. The sample size exceeded the minimum requirement for CDM analysis (N ≥ 200) recommended by recent methodological studies [87] and provided adequate statistical power (1-β > 0.80) for detecting medium effect sizes (d = 0.50) at α = 0.05 significance level. Table 1 presents the detailed demographic characteristics of participants.

Table 1. Demographic Characteristics of Participants ( =270)

=270)

Characteristic | Category | Frequency (n) | Percentage (%) |

Gender | Male | 191 | 70.7% |

Female | 79 | 29.3% |

Academic Level

| Semester 1 | 72 | 26.7% |

Semester 3 | 123 | 45.6% |

Semester 5 | 52 | 19.3% |

Semester 7 | 23 | 8.5% |

Total |

| 270 | 100% |

- Instrument Development

The digital competency assessment framework integrated multiple established theoretical models: the European Framework for the Digital Competence of Educators [8], UNESCO ICT Competency Framework for Teachers [88] , and International Society for Technology in Education Standards for Educators [89]. These frameworks were synthesized with vocational IT education-specific requirements identified through systematic literature review and expert consultation with IT education faculty, educational technology specialists, and industry practitioners.

The resulting framework conceptualized digital competency for vocational IT pre-service teachers as a multidimensional construct comprising four primary dimensions, each containing four specific attributes (16 attributes total). "It is important to note that within the Cognitive Diagnostic Model (CDM) framework employed in this study, these four competency dimensions (KTI, PD, PRO, and KSD) are operationalized as latent 'attributes' in the Q-matrix structure. Consequently, the terms 'dimension' and 'attribute' are used interchangeably throughout the results section, with 'attribute' referring specifically to the parameters estimated by the G-DINA model. Table 2 presents the comprehensive dimensional structure with theoretical foundations and operational definitions.

Table 2. Digital Competency Dimensional Framework

Attribute | Operational Definition |

IT Content Knowledge (KTI) | Proficiency in the knowledge and skills necessary for the utilization, development, and management of various Information and Communication Technologies (ICT) for educational purposes. |

Digital Pedagogy (PD) | The competence to design, execute, and evaluate learning processes that effectively integrate digital technology, including the design of digital learning activities, the facilitation of online collaboration, the development of digital-based self-regulated learning, and the implementation of meaningful digital assessment and feedback |

Digital Professionalism (PRO) | The ability to demonstrate professional attitudes and conduct in leveraging digital technology, which encompasses the application of digital ethics, the engagement in reflective practice concerning technology usage, the establishment of industry partnerships, and leadership in digital transformation within educational settings |

Digital Social Engagement (KSD) | The aptitude for establishing and maintaining socio-professional relationships through digital technology, including the application of accessibility and inclusion principles in digital learning, the cultivation of online professional networks, the execution of effective digital collaboration, and ethical and persuasive digital communication |

- Q-Matrix Development

Development proceeded through iterative expert review involving four specialists (two educational technology experts, two psychometricians) who independently evaluated item-attribute mappings. The Q-matrix employed binary coding (1 = attribute required for item solution; 0 = attribute not required) following established CDM conventions. Items were strategically designed to measure single attributes (simple items) or multiple attributes simultaneously (complex items requiring conjunctive or compensatory attribute combinations).

- Item Generation and Expert Review

The initial item pool consisted of 54 items distributed unequally across four dimensions: KTI (14), PD (14), PRO (13), and KSD (13). Item formats were diverse, including Multiple Choice (30), Code Completion (8), Sequencing (7), Matching (5), and True/False (4), all based on scenarios reflecting vocational IT teaching contexts. Items were designed to measure digital competency application.

Content validity evaluation employed Aiken's V coefficient methodology [90], with four expert reviewers independently rating each item on two dimensions using 5-point Likert scales: (1) relevance to the specified attribute ( ); (2) representativeness of vocational IT teacher competency (

); (2) representativeness of vocational IT teacher competency ( ). Aiken's V coefficients were computed using the formula:

). Aiken's V coefficients were computed using the formula:

|

| (1) |

where  represents the score given by each rater minus the lowest possible score,

represents the score given by each rater minus the lowest possible score,  denotes the number of raters, and

denotes the number of raters, and  indicates the number of rating categories. Items achieving

indicates the number of rating categories. Items achieving  were retained.

were retained.

- RESULT AND DISCUSSION

- Development and Validation of Digital Competency Assessment Instrument

The digital competency assessment instrument was developed through an iterative process integrating expert validation and rigorous psychometric analyses. The initial instrument comprised 54 items distributed across four dimensions: IT Content Knowledge (KTI, 14 items), Digital Pedagogy (PD, 15 items), Digital Professionalism (PRO, 13 items), and Digital Social Engagement (KSD, 12 items). Content validity evaluation using Aiken's V coefficient with four expert reviewers yielded values approaching 1.00 for most items, with eight items requiring revision due to misalignment between constructs and indicators or inappropriate cognitive level demands can be seen in Table 3.

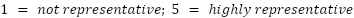

Classical Test Theory analysis (N=270) demonstrated excellent reliability with Cronbach's alpha and KR-20 coefficients of 0.895Confirmatory Factor Analysis validated the four-factor structure with acceptable fit (CFI=0.928, TLI=0.925, RMSEA=0.057), though 14 items showed problematic factor loadings (<0.30 or non-significant). Following two-stage filtering, first eliminating 14 items based on CFA results, then removing 8 additional items identified through Multidimensional Item Response Theory analysis. The final 32-item instrument demonstrated substantially improved psychometric properties. The refined instrument achieved excellent model fit (CFI=0.974, TLI=0.972, RMSEA=0.044) with all dimensions showing strong reliability (Cronbach's α: 0.727-0.827, Composite Reliability: 0.723-0.831) can be seen in Figure 3. The MIRT analysis revealed that 80% of items demonstrated good or excellent multidimensional discrimination (MDISC) (MDISC > 1.0), with PRO dimension showing highest mean MDISC (2.02). Item difficulty distribution was balanced, though one item (KTI.1.4.1) exhibited extreme difficulty (MDIFF=2.018) requiring elimination. These findings demonstrate successful integration of multiple psychometric frameworks to ensure instrument quality exceeding conventional single-approach validation.

Table 3. Psychometric Validation Results Across Multiple Approaches

Approach | Initial (54 items) | Final (32 items) | Interpretation |

CTT Reliability |  = 0.895 = 0.895

|  = 0.727-0.827 = 0.727-0.827

| Good-Excellent |

CFA Model Fit | CFI=0.928 | CFI=0.974 | Excellent |

MIRT MDISC | Not analyzed | 80% Good/Excellent | High discrimination |

G-DINA Fit | Not analyzed | RMSEA₂=0.011 | Excellent |

Figure 3. Item Characteristics

- Attribute Mastery Patterns Using G-DINA

The G-DINA model with four attributes (KTI, PD, PRO, KSD) demonstrated excellent fit (RMSEA₂=0.011, SRMSR=0.060), enabling precise diagnostic classification. Classification accuracy reached outstanding levels: test-level accuracy of 93.71% and attribute-level accuracies exceeding 96% for all dimensions—KTI (97.12%), PD (99.74%), PRO (99.46%), and KSD (96.39%). These accuracy rates substantially exceed typical CDM benchmarks of 80-90%, validating the instrument's diagnostic utility can be seen in Table 4. Q-matrix validation through Proportion of Variance Accounted For (PVAF) methodology confirmed empirical accuracy for most items (PVAF > 0.90), with three items receiving empirical recommendations for modification. The saturated G-DINA model outperformed restricted alternatives (DINA, DINO, ACDM) in likelihood ratio tests, justifying its selection for comprehensive attribute interaction modeling. Analysis revealed that 87.5% of items (28 of 32) demonstrated excellent fit based on adjusted p-values >0.05, confirming item-level consistency with the model. The highest classification accuracy for Digital Pedagogy (99.74%) suggests exceptionally discriminative items for this dimension, while the slightly lower accuracy for Digital Social Engagement (96.39%) may indicate greater attribute complexity or overlap with other dimensions.

Table 4. G-DINA Classification Accuracy by Level and Attribute

Level/Attribute | Accuracy |

Test-Level Overall | 93.71% |

IT Content Knowledge (KTI) | 97.12% |

Digital Pedagogy (PD) | 99.74% |

Digital Professionalism (PRO) | 99.46% |

Digital Social Engagement (KSD) | 96.39% |

- Model Selection and Fit Comparison

To empirically justify the application of the saturated G-DINA model over more restrictive Cognitive Diagnostic Models (CDMs), we conducted a comparative analysis using the Likelihood Ratio Test (LRT) and information criteria (AIC and BIC). The analysis evaluated whether reduced models, specifically DINA, DINO, and A-CDM could adequately represent the attribute interactions within the instrument. As presented in Table 5, although the reduced models yielded lower BIC values due to their parsimony (fewer parameters), the Likelihood Ratio Test (LRT) demonstrated that these models were statistically rejected for a significant proportion of the items when compared to the G-DINA baseline. Specifically, the A-CDM was rejected for 22 items (68.8%) and the DINA model was rejected for 13 items (40.6%) at the level. This substantial item-level misfit indicates that assuming a single interaction mechanism (e.g., purely conjunctive or additive) is insufficient for this instrument. Consequently, the G-DINA model was selected as it provides the necessary flexibility to capture the complex mix of attribute interactions present in the data, ensuring robust diagnostic classification.

Table 5. Model Fit Comparison and Selection based on Item-Level Likelihood Ratio Test (LRT)

Model | AIC | BIC | Items with Significant Rejection (p<.05)* | Recommendation |

G-DINA | 8483.27 | 9530.41 | - (Baseline) | Selected |

DINA | 8667.28 | 8951.56 | 13 (40.6%) | Rejected |

DINO | 8660.33 | 8944.61 | 2 (6.2%) | Rejected |

A-CDM | 8420.94 | 8924.72 | 22 (68.8%) | Rejected |

- Profile Variations Based on Demographic Characteristics

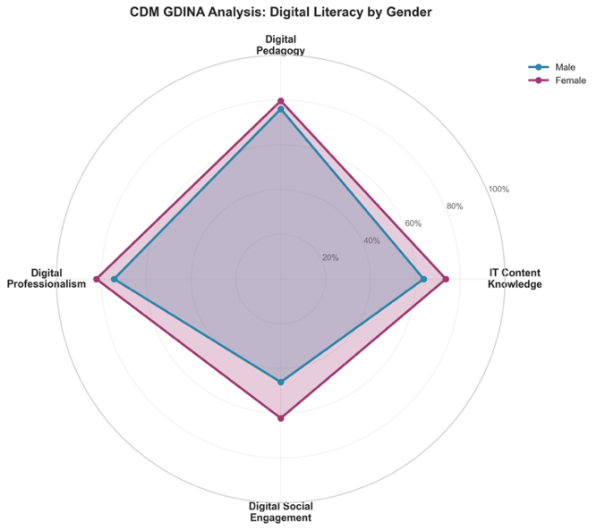

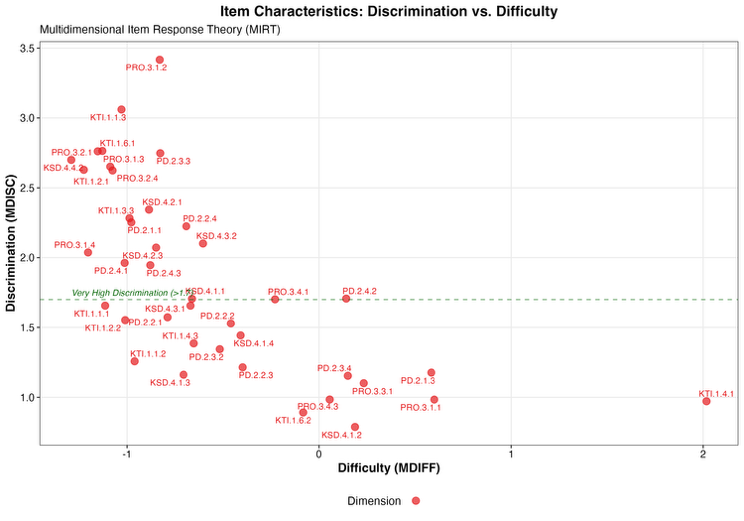

Competency profile analysis across demographic groups revealed systematic patterns. Among 270 participants (70.7% male, 29.3% female) distributed across semesters 1 (26.7%), 3 (45.6%), 5 (19.3%), and 7 (8.5%), female pre-service teachers consistently demonstrated superior attribute mastery probabilities across all four dimensions. Gender-based differences were most pronounced in Digital Professionalism and Digital Pedagogy dimensions, with smallest gaps in IT Content Knowledge. This consistent female advantage across all dimensions suggests systematic rather than domain-specific differences in competency acquisition, potentially attributable to differential pedagogical training exposure or motivational factors in digital competency development can be seen in Figure 4.

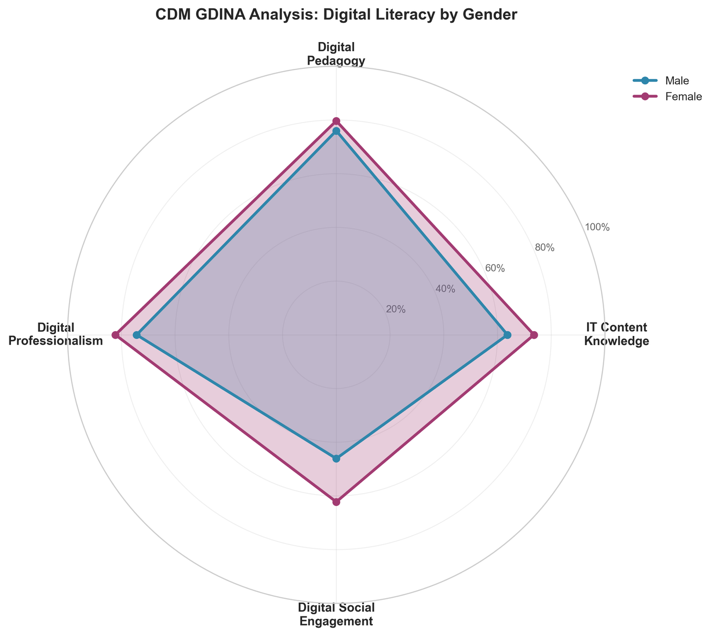

Semester-based progression revealed non-linear development patterns with a critical transition point at semester 3. Unexpectedly, semester 3 students showed decreased mastery probabilities across all attributes compared to semester 1, representing competency decline rather than growth. This pattern may reflect increased curriculum complexity and introduction of advanced coursework temporarily challenging students' perceived competency. However, semesters 5 and 7 demonstrated substantial recovery and growth, with semester 7 students achieving highest mastery levels across all dimensions. Digital Pedagogy consistently demonstrated highest mastery probabilities across all demographic groups, indicating successful pedagogical training integration throughout the program. The competency trajectory suggests that targeted interventions during semester 3 transition period could prevent temporary decline and maintain continuous competency development can be seen in Figure 5.

- Attributes Requiring Strengthening in Teacher Preparation

Analysis of attribute mastery patterns identified specific competency areas requiring enhanced focus in teacher preparation programs. IT Content Knowledge exhibited the widest performance range and lowest overall mastery probabilities among semester 1 and 3 students, suggesting need for strengthened foundational technical training. The semester 3 competency decline across all attributes indicates this transition period as critical intervention point.

Digital Social Engagement, while showing adequate mastery in advanced semesters, demonstrated inconsistent development patterns with relatively lower classification accuracy (96.39%) compared to other attributes. This suggests greater complexity in this competency domain, potentially requiring more explicit instructional attention and scaffolded learning experiences throughout the program.

The gender gap across all competency dimensions, particularly in Digital Professionalism and Digital Pedagogy, indicates need for examining pedagogical approaches that may differentially impact male and female students. The consistent female advantage suggests potential benefits of analyzing and potentially adapting teaching methods that prove effective for female students to benefit all learners.

Program recommendations include: (1) enhanced foundational IT content instruction in early semesters; (2) structured support interventions during semester 3 transition; (3) explicit integration of digital social engagement competencies across curriculum; (4) investigation of gender-responsive pedagogical approaches; and (5) continuous diagnostic monitoring using validated instruments to identify at-risk students early in their development.

- Discussion

The development of a psychometrically robust digital competency assessment instrument represents a critical methodological contribution addressing longstanding measurement challenges in vocational IT teacher preparation. The systematic integration of Classical Test Theory, Confirmatory Factor Analysis, Multidimensional Item Response Theory, and Cognitive Diagnostic Modeling establishes a comprehensive validation framework substantially exceeding conventional single-approach methodologies. This multi-method triangulation strategy aligns with contemporary measurement standards advocating rigorous construct validation through convergent psychometric evidence.

The instrument's excellent reliability coefficients (Cronbach's α ranging 0.727-0.827; Composite Reliability 0.723-0.831) across all four competency dimensions demonstrate strong internal consistency comparable to established international frameworks. These reliability indices substantially exceed minimum acceptability thresholds of 0.70 for research instruments while approaching optimal ranges of 0.80-0.90 recommended for high-stakes educational assessment contexts. The dimensional structure's empirical validation through confirmatory factor analysis, yielding superior fit indices (CFI=0.974, TLI=0.972, RMSEA=0.044), provides compelling structural validity evidence supporting the theoretical four-factor competency framework [91][92].

Multidimensional Item Response Theory analysis revealed exceptional item discrimination capacity, with 80% of items demonstrating good-to-excellent multidimensional discrimination indices exceeding 1.0. The Digital Professionalism dimension exhibited particularly strong discriminative power (mean MDISC=2.02), suggesting items within this domain effectively differentiate between varying competency levels. This finding holds significant implications for formative assessment applications, as highly discriminative items enable precise identification of learners requiring targeted interventions. The balanced difficulty distribution across items, coupled with careful elimination of extreme-difficulty items, ensures comprehensive competency spectrum coverage from foundational awareness through advanced integration capabilities.

This study advances digital competency assessment methodologies by moving beyond the limitations of traditional measurement approaches. Previous studies [11],[14] predominantly utilized Classical Test Theory (CTT) or unidimensional Item Response Theory (IRT), which typically generate a single continuous score to rank individuals. While useful for summative purposes, these methods often mask the multidimensional complexity of digital competence. In contrast, the G-DINA approach employed in this research provides a fine-grained diagnostic profile for each student, identifying specific mastery patterns (e.g., mastery of Digital Pedagogy but deficits in IT Content Knowledge) [65]. This diagnostic granularity aligns with recent recommendations in educational measurement [58],[84] shifting the focus from merely evaluating performance to diagnosing underlying cognitive structures for targeted intervention

The content validity evidence, operationalized through Aiken's V coefficients approaching 1.00 with expert consensus, establishes strong alignment between assessment items and theoretical competency constructs. This rigorous expert validation process, involving educational technology specialists and psychometricians, ensures items authentically represent vocational IT educator competency requirements rather than generic digital competence [93]. The iterative refinement process, systematically eliminating 22 problematic items through combined psychometric criteria, demonstrates commitment to measurement quality over comprehensive content coverage, a critical distinction for diagnostic precision.

The G-DINA model's exceptional classification accuracy represents a substantial advancement in educational measurement precision for digital competency assessment. The test-level classification accuracy of 93.71% substantially exceeds typical Cognitive Diagnostic Model benchmarks of 80-90% reported in educational assessment literature [58][59],[63] This performance validates the instrument's capacity to reliably classify examinees into discrete attribute mastery profiles, enabling confident diagnostic inference for instructional decision-making.

Attribute-level classification accuracies exceeding 96% for all four competency dimensions, IT Content Knowledge (97.12%), Digital Pedagogy (99.74%), Digital Professionalism (99.46%), and Digital Social Engagement (96.39%), demonstrate remarkable diagnostic precision. The exceptionally high accuracy for Digital Pedagogy (99.74%) suggests items within this dimension possess optimal discriminative properties and minimal attribute overlap, facilitating unambiguous classification. The relatively lower accuracy for Digital Social Engagement (96.39%), while still excellent, may indicate greater construct complexity or potential attribute interdependence requiring further theoretical refinement.

The Q-matrix empirical validation through Proportion of Variance Accounted For methodology confirmed theoretical item-attribute specifications for most items (PVAF > 0.90), with only three items receiving modification recommendations. This high level of Q-matrix correspondence between theoretical cognitive model and empirical response patterns provides critical validity evidence supporting the underlying competency structure. The saturated G-DINA model's superior performance relative to restricted alternatives (DINA, DINO, ACDM) in likelihood ratio tests justifies flexible attribute interaction modeling rather than imposing restrictive compensatory or conjunctive constraints.

Item-level fit evaluation revealed 87.5% of items (28 of 32) demonstrated excellent fit based on adjusted p-values exceeding 0.05, confirming consistency with model assumptions. This high proportion of well-fitting items indicates appropriate item construction and minimal model-data misfit that could compromise classification validity. The G-DINA framework's capacity to accommodate diverse attribute interaction patterns, including conjunctive, compensatory, and hybrid relationships proves particularly valuable for modeling complex multidimensional competencies where attributes may interact non-uniformly across items.

The model's excellent absolute fit indices (RMSEA₂=0.011, SRMSR=0.060) substantially surpass conventional acceptability criteria (RMSEA < 0.05, SRMSR < 0.08), indicating minimal discrepancy between observed response patterns and model-predicted probabilities. This close model-data correspondence provides essential evidence that diagnostic classifications accurately reflect examinees' true attribute mastery states rather than measurement artifacts or model misspecification.

The systematic gender differences in digital competency mastery, with female pre-service teachers consistently demonstrating superior attribute mastery probabilities across all four dimensions, contradicts prevalent stereotypes associating males with technology proficiency [94]. This finding aligns with emerging international evidence documenting diminishing or reversed gender gaps in educational technology contexts, particularly within teacher preparation programs [34]. The consistent female advantage across IT Content Knowledge, Digital Pedagogy, Digital Professionalism, and Digital Social Engagement suggests systematic rather than domain-specific competency differences.

Several theoretical explanations may account for observed gender patterns. Female students in teacher preparation programs frequently demonstrate higher academic engagement, more systematic learning approaches, and stronger intrinsic motivation for pedagogical skill development [95][96]. Educational technology research indicates female pre-service teachers often exhibit greater conscientiousness in technology integration coursework and more positive attitudes toward technology-enhanced pedagogy compared to male counterparts [97]. The gender differences were most pronounced in Digital Professionalism and Digital Pedagogy dimensions, suggesting female students may place greater emphasis on professional competency development and pedagogical applications rather than purely technical skill acquisition.

The non-linear competency development trajectory, characterized by unexpected decline at semester three followed by substantial recovery and advancement in upper semesters, reveals critical insights into vocational IT teacher preparation program dynamics. This competency dip at the midpoint of preparation diverges from linear growth assumptions underlying many program evaluation frameworks and highlights the necessity of longitudinal competency monitoring rather than single-point assessment.

Multiple factors may contribute to semester three competency decline. This transition period typically introduces advanced pedagogical coursework, field experience requirements, and increased academic rigor that temporarily challenge students' self-assessed competency and revealed performance. The Dunning-Kruger effect suggests that initial confidence in early program stages, based on limited knowledge, may give way to more accurate self-assessment and performance as students encounter complex authentic teaching challenges [98]. Additionally, semester three often represents peak cognitive load as students simultaneously manage advanced coursework, initial practicum experiences, and specialized content area requirements.

The substantial competency recovery and advancement observed in semesters five and seven indicates effective program scaffolding and support systems enabling students to overcome mid-program challenges. This resilience pattern suggests that temporary competency plateaus or declines do not necessarily predict ultimate preparation outcomes but rather represent normative developmental stages requiring targeted support. Programs demonstrating this recovery pattern likely possess effective advising systems, peer support networks, and instructional scaffolding facilitating competency consolidation following challenging transition periods.

These findings suggest strategic intervention timing at semester three could prevent or mitigate competency decline and maintain continuous development trajectories. Potential interventions include enhanced advising and mentoring support during transition periods, structured peer collaboration facilitating knowledge consolidation, carefully sequenced coursework managing cognitive load, and explicit competency monitoring with early warning systems identifying at-risk students. Rather than viewing semester three decline as program failure, institutions should recognize this pattern as developmental opportunity requiring proactive support structures [99].

Figure 4. Digital Competence by Gender

Figure 5. Digital Competence by Semester

- CONCLUSIONS

This study successfully developed and validated a comprehensive diagnostic assessment instrument for profiling digital competency of prospective vocational IT educators using the Generalized DINA model. The rigorous psychometric validation through multiple complementary approaches established robust evidence for instrument quality, with excellent reliability, strong structural validity, and superior item discrimination. The G-DINA model demonstrated exceptional classification accuracy, surpassing typical benchmarks and enabling confident diagnostic inference for educational decision-making.

Systematic competency profiling revealed important patterns with significant implications for program development. Female pre-service teachers consistently demonstrated superior attribute mastery across all competency dimensions, contradicting prevalent technology proficiency stereotypes and suggesting effective program practices worthy of investigation and broader implementation. The non-linear development trajectory, characterized by semester three competency decline followed by substantial upper-semester recovery, identified critical transition points requiring targeted institutional support.

Dimensional analysis highlighted both program strengths and improvement opportunities. Digital Pedagogy's consistent high mastery validates existing pedagogical training effectiveness, while IT Content Knowledge variability and Digital Social Engagement inconsistency indicate domains requiring enhanced curricular attention. Despite the robust psychometric validation, this study acknowledges several limitations that warrant consideration. First, the purposive sampling resulted in an uneven distribution regarding gender (70.7% male) and academic level (predominantly Semester 3), which reflects the current demographics of the study program but requires caution when generalizing findings to balanced populations. Second, the cross-sectional design effectively identified competency differences across semesters but cannot fully isolate cohort effects from developmental changes; thus, future research should prioritize longitudinal tracking to validate the observed trajectory. Finally, while the classification accuracy was high, further investigations incorporating Differential Item Functioning (DIF) analysis are recommended to rigorously rule out potential measurement bias across demographic subgroups These findings generate evidence-based recommendations including strengthened foundational technical instruction, structured semester-three transition support, explicit digital social engagement integration, and continuous diagnostic monitoring enabling early intervention.

DECLARATION

Author Contribution

INIW contributed to conceptualization, methodology development, instrument design, data collection, psychometric analysis, interpretation of results, and manuscript preparation. SH provided research supervision, methodological guidance, theoretical framework development, critical manuscript review, and validation of analytical approaches. MK contributed research supervision, psychometric expertise, cognitive diagnostic modeling guidance, critical review of findings, and manuscript refinement. SF provided theoretical consultation, literature review support, discussion of findings, and manuscript review. All authors have read and approved the final manuscript.

Data and Instrument Availability

The validated assessment instrument and the datasets generated during the current study are available from the corresponding author on reasonable request for research and replication purposes

REFERENCES

- J. Haryanto, “Indonesia: Advancing Southeast Asia's Largest Digital Economy,” In The ASEAN Digital Economy, pp. 42-75, 2023, https://doi.org/10.4324/9781003308751-4.

- S. C. Vetrivel and T. Mohanasundaram, “Building a Tech-Savvy Workforce: Re-Skilling Strategies for Success,” In Reskilling the Workforce for Technological Advancement, pp. 18-40, 2024, https://doi.org/10.4018/979-8-3693-0612-3.ch002.

- A. A. Sihombing and M. Fatra, “Emergency of Indonesian Education System: Conventional, Vulnerable to Crisis, and Ignorant to Digital Learning,” In Resilient and Sustainable Education Futures: Insights from Malaysia and Indonesia's COVID-19 Experience, pp. 183-197, 2025, https://doi.org/10.1007/978-981-96-4971-6_14.

- S. Mishra and P. K. Misra, “Open, Distance, and Digital Non-formal Education in Developing Countries,” in Handbook of Open, Distance and Digital Education, pp. 1-17, 2022. https://doi.org/10.1007/978-981-19-0351-9_21-1.

- F. Caena and C. Redecker, “Aligning teacher competence frameworks to 21st century challenges: The case for the European Digital Competence Framework for Educators (Digcompedu),” European journal of education, vol. 54, no. 3, pp. 356-369, 2019, https://doi.org/10.1111/ejed.12345.

- G. Falloon, “From digital literacy to digital competence: the teacher digital competency (TDC) framework,” Education Tech Research Dev, vol. 68, no. 5, pp. 2449–2472, 2020, https://doi.org/10.1007/s11423-020-09767-4.

- F. Caena and C. Redecker, “Aligning teacher competence frameworks to 21st century challenges: The case for the European Digital Competence Framework for Educators (DigCompEdu),” Euro J of Education, vol. 54, no. 3, pp. 356–369, Sep. 2019, https://doi.org/10.1111/ejed.12345.

- R. Alarcón, E. Del Pilar Jiménez, and M. I. de Vicente‐Yagüe, “Development and validation of the DIGIGLO, a tool for assessing the digital competence of educators,” British Journal of Educational Technology, vol. 51, no. 6, pp. 2407-2421, 2020, https://doi.org/10.1111/bjet.12919.

- E. J. Instefjord and E. Munthe, “Educating digitally competent teachers: A study of integration of professional digital competence in teacher education,” Teaching and Teacher Education, vol. 67, pp. 37–45, Oct. 2017, https://doi.org/10.1016/j.tate.2017.05.016.

- F. D. Guillén-Gámez, M. J. Mayorga-Fernández, J. Bravo-Agapito, and D. Escribano-Ortiz, “Analysis of Teachers’ Pedagogical Digital Competence: Identification of Factors Predicting Their Acquisition,” Tech Know Learn, vol. 26, no. 3, pp. 481–498, Sep. 2021, https://doi.org/10.1007/s10758-019-09432-7.

- O. E. Hatlevik, “Examining the Relationship between Teachers’ Self-Efficacy, their Digital Competence, Strategies to Evaluate Information, and use of ICT at School,” Scandinavian Journal of Educational Research, vol. 61, no. 5, pp. 555–567, 2017, https://doi.org/10.1080/00313831.2016.1172501.

- H. Hidayat, S. Herawati, E. Syahmaidi, A. Hidayati, and Z. Ardi, “Digital literacy of vocational high school teachers in Indonesia,” Journal of Technical Education and Training, vol. 14, no. 1, pp. 107–118, 2022, https://doi.org/10.30880/jtet.2022.14.01.010.

- M. Nurtanto, N. Kholifah, A. Masek, P. Sudira, and A. Samsudin, “Digital literacy of Indonesian teachers in vocational education: Comparing between western and eastern Indonesia,” International Journal of Interactive Mobile Technologies, vol. 15, no. 4, pp. 143–158, 2021, https://doi.org/10.3991/ijim.v15i04.18163.

- L. Xin, G. Zhao, B. Wu, Y. Wang, and C. Stamov-Roßnagel, “Development and validation of a digital competence scale for Chinese TVET teachers,” PLOS ONE, vol. 19, no. 9, p. e0309494, 2024, https://doi.org/10.1371/journal.pone.0309494.

- OECD, OECD Skills Outlook 2023: Skills for a Resilient Green and Digital Transition. in OECD Skills Outlook. OECD Publishing, 2023. https://doi.org/10.1787/27452f29-en.

- A. A. P. Cattaneo, C. Antonietti, and M. Rauseo, “How digitalised are vocational teachers? Assessing digital competence in vocational education and looking at its underlying factors,” Computers & Education, vol. 176, p. 104358, 2022, https://doi.org/10.1016/j.compedu.2021.104358.

- Y. Zhao, A. M. Pinto Llorente, and M. C. Sánchez Gómez, “Digital competence in higher education research: A systematic literature review,” Computers & Education, vol. 168, p. 104212, 2021, https://doi.org/10.1016/j.compedu.2021.104212.

- F. Pettersson, “On the issues of digital competence in educational contexts – a review of literature,” Educ Inf Technol, vol. 23, no. 3, pp. 1005–1021, 2018, https://doi.org/10.1007/s10639-017-9649-3.

- M. Lucas, P. Bem-Haja, F. Siddiq, A. Moreira, and C. Redecker, “The relation between in-service teachers’ digital competence and personal and contextual factors: What matters most?,” Computers & Education, vol. 160, p. 104052, 2021, https://doi.org/10.1016/j.compedu.2020.104052.

- J. Portillo, U. Garay, E. Tejada, and N. Bilbao, “Self-Perception of the Digital Competence of Educators during the COVID-19 Pandemic: A Cross-Analysis of Different Educational Stages,” Sustainability, vol. 12, no. 23, p. 10128, 2020, https://doi.org/10.3390/su122310128.

- M. Ferjan and M. Bernik, “Digital Competencies in Formal and Hidden Curriculum,” Organizacija, vol. 57, no. 3, pp. 261–273, 2024, https://doi.org/10.2478/orga-2024-0019.

- V. Patwardhan, J. Mallya, R. Shedbalkar, S. Srivastava, and K. Bolar, “Students’ Digital Competence and Perceived Learning: The mediating role of Learner Agility,” F1000Res, vol. 11, p. 1038, 2023, https://doi.org/10.12688/f1000research.124884.2.

- F. Albejaidi, “The Mediating Role Of Ethical Climate Between Organizational Justice And Stress: A Cb-Sem Analysis,” JASEM, vol. 5, no. 1, 2021, https://doi.org/10.47263/JASEM.5(1)04.

- F. Gabriel, R. Marrone, Y. Van Sebille, V. Kovanovic, and M. de Laat, “Digital education strategies around the world: practices and policies,” Irish Educational Studies, vol. 41, no. 1, pp. 85-106, 2022, https://doi.org/10.1080/03323315.2021.2022513.

- F. Caena and C. Redecker, “Aligning teacher competence frameworks to 21st century challenges: The case for the European Digital Competence Framework for Educators (Digcompedu),” European journal of education, vol. 54, no. 3, pp. 356-369, 2019, https://doi.org/10.1111/ejed.12345.

- J. Mattar, D. K. Ramos, and M. R. Lucas, “DigComp-based digital competence assessment tools: Literature review and instrument analysis,” Education and Information Technologies, vol. 27, no. 8, pp. 10843-10867, 2022, https://doi.org/10.1007/s10639-022-11034-3.

- P. Svoboda, “Digital Competencies and Artificial Intelligence for Education: Transformation of the Education System,” Int Adv Econ Res, vol. 30, no. 2, pp. 227–230, 2024, https://doi.org/10.1007/s11294-024-09896-z.

- J. Geng, M. S.-Y. Jong, and C. S. Chai, “Hong Kong Teachers’ Self-efficacy and Concerns About STEM Education,” Asia-Pacific Edu Res, vol. 28, no. 1, pp. 35–45, 2019, https://doi.org/10.1007/s40299-018-0414-1.

- E. Flores, X. Xu, and Y. Lu, “Human Capital 4.0: a workforce competence typology for Industry 4.0,” Journal of Manufacturing Technology Management, vol. 31, no. 4, pp. 687-703, 2020, https://doi.org/10.1108/JMTM-08-2019-0309.

- R. Hämäläinen, B. De Wever, K. Nissinen, and S. Cincinnato, “What makes the difference – PIAAC as a resource for understanding the problem-solving skills of Europe’s higher-education adults,” Computers & Education, vol. 129, pp. 27–36, 2019, https://doi.org/10.1016/j.compedu.2018.10.013.

- L. K. J. Baartman and E. De Bruijn, “Integrating knowledge, skills and attitudes: Conceptualising learning processes towards vocational competence,” Educational Research Review, vol. 6, no. 2, pp. 125–134, 2011, https://doi.org/10.1016/j.edurev.2011.03.001.

- M. J. Koehler, P. Mishra, and W. Cain, “What is Technological Pedagogical Content Knowledge (TPACK)?,” Journal of Education, vol. 193, no. 3, pp. 13–19, 2013, https://doi.org/10.1177/002205741319300303.

- T. Valtonen, E. Sointu, J. Kukkonen, S. Kontkanen, M. C. Lambert, and K. Mäkitalo-Siegl, “TPACK updated to measure pre-service teachers’ twenty-first century skills,” AJET, vol. 33, no. 3, 2017, https://doi.org/10.14742/ajet.3518.

- J. Tondeur, R. Scherer, F. Siddiq, and E. Baran, “A comprehensive investigation of TPACK within pre-service teachers’ ICT profiles: Mind the gap!,” AJET, vol. 33, no. 3, 2017, https://doi.org/10.14742/ajet.3504.

- M. Schmid, E. Brianza, and D. Petko, “Developing a short assessment instrument for Technological Pedagogical Content Knowledge (TPACK.xs) and comparing the factor structure of an integrative and a transformative model,” Computers & Education, vol. 157, p. 103967, 2020, https://doi.org/10.1016/j.compedu.2020.103967.

- R. J. Krumsvik, “Teacher educators’ digital competence,” Scandinavian Journal of Educational Research, vol. 58, no. 3, pp. 269–280, May 2014, https://doi.org/10.1080/00313831.2012.726273.

- O. Erstad, S. Kjällander, and S. Järvelä, “Facing the challenges of ‘digital competence’ a Nordic agenda for curriculum development for the 21st century,” Nordic Journal of Digital Literacy, vol. 16, no. 2, pp. 77-87, 2021, https://doi.org/10.18261/issn.1891-943x-2021-02-04.

- H. Amin and M. S. Mirza, “Comparative study of knowledge and use of Bloom's digital taxonomy by teachers and students in virtual and conventional universities,” Asian Association of Open Universities Journal, vol. 15, no. 2, pp. 223-238, 2020, https://doi.org/10.1108/AAOUJ-01-2020-0005.

- I. M. Arievitch, “Reprint of: The vision of Developmental Teaching and Learning and Bloom's Taxonomy of educational objectives,” Learning, culture and social interaction, vol. 27, p. 100473, 2020, https://doi.org/10.1016/j.lcsi.2020.100473.

- P. P. Noushad, “Taxonomies of educational objectives,” In Designing and Implementing the Outcome-Based Education Framework: Theory and Practice, pp. 43-82, 2024, https://doi.org/10.1007/978-981-96-0440-1_2.

- M. Pavlova, Technology and Vocational Education for Sustainable Development: Empowering Individuals for the Future, vol. 10. in Technical and Vocational Education and Training: Issues, Concerns and Prospects, vol. 10. 2009. https://doi.org/10.1007/978-1-4020-5279-8.

- M. Pavlova, “Knowledge and values in technology education,” International Journal of Technology and Design Education, vol. 15, no. 2, pp. 127–147, 2005, https://doi.org/10.1007/s10798-005-8280-6.

- O. E. Hatlevik and K.-A. Christophersen, “Digital competence at the beginning of upper secondary school: Identifying factors explaining digital inclusion,” Computers & Education, vol. 63, pp. 240–247, 2013, https://doi.org/10.1016/j.compedu.2012.11.015.

- D. Masoumi and O. Noroozi, “Developing early career teachers’ professional digital competence: a systematic literature review,” European Journal of Teacher Education, vol. 48, no. 3, pp. 644–666, 2025, https://doi.org/10.1080/02619768.2023.2229006.

- Y. Zhao, M. C. Sánchez-Gómez, A. M. Pinto-Llorente, and R. Sánchez Prieto, “Adapting to crisis and unveiling the digital shift: a systematic literature review of digital competence in education related to COVID-19,” Front. Educ., vol. 10, p. 1541475, 2025, https://doi.org/10.3389/feduc.2025.1541475.

- L. Starkey, “A review of research exploring teacher preparation for the digital age,” Cambridge Journal of Education, vol. 50, no. 1, pp. 37–56, 2020, https://doi.org/10.1080/0305764X.2019.1625867.

- K. Drossel, B. Eickelmann, and J. Gerick, “Predictors of teachers’ use of ICT in school – the relevance of school characteristics, teachers’ attitudes and teacher collaboration,” Educ Inf Technol, vol. 22, no. 2, pp. 551–573, 2017, https://doi.org/10.1007/s10639-016-9476-y.

- W. Admiraal et al., “Preparing pre-service teachers to integrate technology into K–12 instruction: evaluation of a technology-infused approach,” Technology, Pedagogy and Education, vol. 26, no. 1, pp. 105–120, 2017, https://doi.org/10.1080/1475939X.2016.1163283.

- R. Scherer, F. Siddiq, and J. Tondeur, “The technology acceptance model (TAM): A meta-analytic structural equation modeling approach to explaining teachers’ adoption of digital technology in education,” Computers & Education, vol. 128, pp. 13–35, 2019, https://doi.org/10.1016/j.compedu.2018.09.009.

- T. Teo, “Unpacking teachers’ acceptance of technology: Tests of measurement invariance and latent mean differences,” Computers & Education, vol. 75, pp. 127–135, 2014, https://doi.org/10.1016/j.compedu.2014.01.014.

- M. Taimalu and P. Luik, “The impact of beliefs and knowledge on the integration of technology among teacher educators: A path analysis,” Teaching and Teacher Education, vol. 79, pp. 101–110, 2019, https://doi.org/10.1016/j.tate.2018.12.012.

- Y. Xin, Y. Tang, and X. Mou, “An empirical study on the evaluation and influencing factors of digital competence of Chinese teachers for TVET,” PLoS ONE, vol. 19, no. 9, p. e0310187, 2024, https://doi.org/10.1371/journal.pone.0310187.

- S. Hoidn and V. Šťastný, “Labour market success of initial vocational education and training graduates: A comparative study of three education systems in Central Europe,” Journal of Vocational Education & Training, vol. 75, no. 4, pp. 629-653, 2023, https://doi.org/10.1080/13636820.2021.1931946.

- L. Mordhorst and T. Jenert, “Curricular integration of academic and vocational education: a theory-based empirical typology of dual study programmes in Germany,” Higher Education, vol. 85, no. 6, pp. 1257-1279, 2023, https://doi.org/10.1007/s10734-022-00889-7.

- E. Wenger, Communities of practice: Learning, meaning, and identity. Cambridge: Cambridge University Press, 1998. https://doi.org/10.1017/CBO9780511803932.

- J. Lave and E. Wenger, Situated learning: Legitimate peripheral participation. Cambridge: Cambridge University Press, 1991. https://doi.org/10.1017/CBO9780511815355.

- T. Trust, D. G. Krutka, and J. P. Carpenter, “‘Together we are better’: Professional learning networks for teachers,” Computers & Education, vol. 102, pp. 15–34, 2016, https://doi.org/10.1016/j.compedu.2016.06.007.

- Anushka, A. Bandopadhyay, and P. K. Das, “Paper based microfluidic devices: a review of fabrication techniques and applications,” The European Physical Journal Special Topics, vol. 232, no. 6, pp. 781-815, 2023, https://doi.org/10.1140/epjs/s11734-022-00727-y.

- J. De La Torre and N. Minchen, “Cognitively Diagnostic Assessments and the Cognitive Diagnosis Model Framework,” Psicología Educativa, vol. 20, no. 2, pp. 89–97, 2014, https://doi.org/10.1016/j.pse.2014.11.001.

- L. Bradshaw and J. Templin, “Combining Item Response Theory and Diagnostic Classification Models: A Psychometric Model for Scaling Ability and Diagnosing Misconceptions,” Psychometrika, vol. 79, no. 3, pp. 403–425, 2014, https://doi.org/10.1007/s11336-013-9350-4.

- J. Sessoms and R. A. Henson, “Applications of Diagnostic Classification Models: A Literature Review and Critical Commentary,” Measurement: Interdisciplinary Research and Perspectives, vol. 16, no. 1, pp. 1–17, 2018, https://doi.org/10.1080/15366367.2018.1435104.

- A. Huebner and C. Wang, “A Note on Comparing Examinee Classification Methods for Cognitive Diagnosis Models,” Educational and Psychological Measurement, vol. 71, no. 2, pp. 407–419, 2011, https://doi.org/10.1177/0013164410388832.

- J. P. Leighton, M. J. Gierl, and S. M. Hunka, “The Attribute Hierarchy Method for Cognitive Assessment: A Variation on Tatsuoka’s Rule‐Space Approach,” J Educational Measurement, vol. 41, no. 3, pp. 205–237, 2004, https://doi.org/10.1111/j.1745-3984.2004.tb01163.x.

- J. Liu, G. Xu, and Z. Ying, “Data-Driven Learning of Q-Matrix,” Applied Psychological Measurement, vol. 36, no. 7, pp. 548–564, 2012, https://doi.org/10.1177/0146621612456591.

- J. de la Torre, “The Generalized DINA Model Framework,” Psychometrika, vol. 76, no. 2, pp. 179–199, 2011, https://doi.org/10.1007/s11336-011-9207-7.

- P. Nájera, F. J. Abad, C.-Y. Chiu, and M. A. Sorrel, “The Restricted DINA Model: A Comprehensive Cognitive Diagnostic Model for Classroom-Level Assessments,” Journal of Educational and Behavioral Statistics, vol. 48, no. 6, pp. 719–749, 2023, https://doi.org/10.3102/10769986231158829.

- W. Ma and J. de la Torre, “GDINA: An R Package for Cognitive Diagnosis Modeling,” Journal of Statistical Software, vol. 93, no. 14, pp. 1–26, 2020, https://doi.org/10.18637/jss.v093.i14.

- A. A. Rupp and P. W. W van Rijn, “GDINA and CDM Packages in R,” Measurement, vol. 16, no. 1. pp. 71–77, 2018, https://doi.org/10.1080/15366367.2018.1437243.

- A. C. George, A. Robitzsch, T. Kiefer, J. Groß, and A. Ünlü, “The R Package CDM for Cognitive Diagnosis Models,” J. Stat. Soft., vol. 74, no. 2, 2016, https://doi.org/10.18637/jss.v074.i02.

- H. Ravand and P. Baghaei, “Diagnostic Classification Models: Recent Developments, Practical Issues, and Prospects,” International Journal of Testing, vol. 20, no. 1, pp. 24–56, 2020, https://doi.org/10.1080/15305058.2019.1588278.

- P. Nájera, F. J. Abad, and M. A. Sorrel, “Determining the Number of Attributes in Cognitive Diagnosis Modeling,” Front. Psychol., vol. 12, p. 614470, 2021, https://doi.org/10.3389/fpsyg.2021.614470.

- M. Hansen, L. Cai, S. Monroe, and Z. Li, “Limited‐information goodness‐of‐fit testing of diagnostic classification item response models,” Brit J Math & Statis, vol. 69, no. 3, pp. 225–252, 2016, https://doi.org/10.1111/bmsp.12074.

- S. Sen and A. S. Cohen, “Sample Size Requirements for Applying Diagnostic Classification Models,” Front. Psychol., vol. 11, p. 621251, 2021, https://doi.org/10.3389/fpsyg.2020.621251.

- W. Ma, C. Iaconangelo, and J. De La Torre, “Model Similarity, Model Selection, and Attribute Classification,” Applied Psychological Measurement, vol. 40, no. 3, pp. 200–217, 2016, https://doi.org/10.1177/0146621615621717.

- Y. Cui and J. Li, “Evaluating Person Fit for Cognitive Diagnostic Assessment,” Applied Psychological Measurement, vol. 39, no. 3, pp. 223–238, 2015, https://doi.org/10.1177/0146621614557272.

- M. A. Sorrel, F. J. Abad, J. Olea, J. De La Torre, and J. R. Barrada, “Inferential Item-Fit Evaluation in Cognitive Diagnosis Modeling,” Applied Psychological Measurement, vol. 41, no. 8, pp. 614–631, 2017, https://doi.org/10.1177/0146621617707510.

- J. Sessoms and R. A. Henson, “Applications of Diagnostic Classification Models: A Literature Review and Critical Commentary,” Measurement: Interdisciplinary Research and Perspectives, vol. 16, no. 1, pp. 1–17, 2018, https://doi.org/10.1080/15366367.2018.1435104.

- M. K. Aydin, T. Yildirim, and M. Kus, “Teachers’ digital competences: a scale construction and validation study,” Front. Psychol., vol. 15, p. 1356573, 2024, https://doi.org/10.3389/fpsyg.2024.1356573.

- O. Diachuk, “Development of digital competence of teachers in vocational education institutions,” sets, vol. 3, no. 1, pp. 77–91, 2024, https://doi.org/10.69587/ss/1.2024.77.

- L. A. T. Nguyen and A. Habók, “Tools for assessing teacher digital literacy: a review,” J. Comput. Educ., vol. 11, no. 1, pp. 305–346, 2024, https://doi.org/10.1007/s40692-022-00257-5.

- M. J. J. Roll and D. Ifenthaler, “Multidisciplinary digital competencies of pre-service vocational teachers,” Empirical Res Voc Ed Train, vol. 13, no. 1, p. 7, 2021, https://doi.org/10.1186/s40461-021-00112-4.

- A. Yue, “From digital literacy to digital citizenship: policies, assessment frameworks and programs for young people in the Asia Pacific,” In Media in Asia, pp. 181-194, 2022, https://doi.org/10.4324/9781003130628-14.

- F. Wang et al., "NeuralCD: A General Framework for Cognitive Diagnosis," in IEEE Transactions on Knowledge and Data Engineering, vol. 35, no. 8, pp. 8312-8327, 1 Aug. 2023, https://doi.org/10.1109/TKDE.2022.3201037.

- J. L. Templin and L. Bradshaw, “Measuring the reliability of diagnostic classification model examinee estimates,” Journal of Classification, vol. 30, no. 2, pp. 251–275, 2013, https://doi.org/10.1007/s00357-013-9129-4.

- W. Ma, N. Minchen, and J. de la Torre, “Choosing between CDM and unidimensional IRT: The proportional reasoning test case,” Measurement: Interdisciplinary Research and Perspectives, vol. 18, no. 2, pp. 87–96, 2020, https://doi.org/10.1080/15366367.2019.1697122.

- P. Nájera, M. Á. Ángel Sorrel, J. de la Torre, and F. J. Abad, “Balancing fit and parsimony to improve Q-matrix validation,” British Journal of Mathematical and Statistical Psychology, vol. 74, pp. 110–130, 2021, https://doi.org/10.1111/bmsp.12228.

- W. Ma and Z. Jiang, “Estimating Cognitive Diagnosis Models in Small Samples: Bayes Modal Estimation and Monotonic Constraints,” Applied Psychological Measurement, vol. 45, no. 2, pp. 95–111, 2021, https://doi.org/10.1177/0146621620977681.

- A. Gutiérrez-Martín, R. Pinedo-González, and C. Gil-Puente, “ICT and media competencies of teachers. convergence towards an integrated MIL-ICT model,” Comunicar: Media Education Research Journal, vol. 30, no. 70, pp. 19-30, 2022, https://doi.org/10.3916/C70-2022-02.

- H. Crompton, “Evidence of the ISTE Standards for Educators leading to learning gains,” Journal of Digital Learning in Teacher Education, vol. 39, no. 4, pp. 201–219, 2023, https://doi.org/10.1080/21532974.2023.2244089.

- L. R. Aiken, “Content Validity and Reliability of Single Items or Questionnaires,” Educational and Psychological Measurement, vol. 40, no. 4, pp. 955–959,1980, https://doi.org/10.1177/001316448004000419.

- L. Hu and P. M. Bentler, “Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives,” Structural Equation Modeling: A Multidisciplinary Journal, vol. 6, no. 1, pp. 1–55, 1999, https://doi.org/10.1080/10705519909540118.

- R. B. Kline, Beyond significance testing: Statistics reform in the behavioral sciences (2nd ed.). Washington: American Psychological Association, 2013. https://doi.org/10.1037/14136-000.

- M. T. Kane, “Validating the Interpretations and Uses of Test Scores,” J Educational Measurement, vol. 50, no. 1, pp. 1–73, 2013, https://doi.org/10.1111/jedm.12000.

- M. Balducci, “Linking gender differences with gender equality: A systematic-narrative literature review of basic skills and personality,” Frontiers in Psychology, vol. 14, p. 1105234, 2023, https://doi.org/10.3389/fpsyg.2023.1105234.

- D. Voyer and S. D. Voyer, “Gender differences in scholastic achievement: A meta-analysis.,” Psychological Bulletin, vol. 140, no. 4, pp. 1174–1204, 2014, https://doi.org/10.1037/a0036620.

- A. J. Martin, “Examining a multidimensional model of student motivation and engagement using a construct validation approach,” Brit J of Edu Psychol, vol. 77, no. 2, pp. 413–440, Jun. 2007, https://doi.org/10.1348/000709906X118036.

- V. Venkatesh and H. Bala, “Technology Acceptance Model 3 and a Research Agenda on Interventions,” Decision Sciences, vol. 39, no. 2, pp. 273–315, 2008, https://doi.org/10.1111/j.1540-5915.2008.00192.x.

- J. Kruger and D. Dunning, “Unskilled and unaware of it: How difficulties in recognizing one’s own incompetence lead to inflated self-assessments.,” Journal of Personality and Social Psychology, vol. 77, no. 6, pp. 1121–1134, 1999, https://doi.org/10.1037/0022-3514.77.6.1121.

- Q. Huang, X. Wang, Y. Ge, and D. Cai, “Relationship between self-efficacy, social rhythm, and mental health among college students: a 3-year longitudinal study,” Current Psychology, vol. 42, no. 11, pp. 9053-9062, 2023, https://doi.org/10.1007/s12144-021-02160-1.

I Nyoman Indhi Wiradika (Profiling Digital Competency of Prospective Vocational IT Educators Using Generalized DINA Model: A Cognitive Diagnostic Approach))