ISSN: 2685-9572 Buletin Ilmiah Sarjana Teknik Elektro

Vol. 7, No. 3, September 2025, pp. 496-508

Lightweight 1D CNN for Unified Real-Time Communication Signal Classification and Denoising in Low-SNR Edge Environments

Mohammed Jasim A. Alkhafaji 1, Rusul Nadhim Mohammed 2, Hanan Chassab Mohammed 3,

Mohammed Albaker Najm Abed 4

1,4 Department of Computer Technology Engineering, Al Taff University College, Karbala, 56001, Iraq

2 College of Education for pure Sciences, Karbala University, Karbala, Iraq

3 Department of Radiologic Technology, College of Health and Medical Techniques Faculty,

Al-Zahraa University for Women, Karbala, Iraq

ARTICLE INFORMATION |

| ABSTRACT |

Article History: Received 25 May 2025 Revised 11 July 2025 Accepted 14 September 2025 |

|

The escalating complexity and pervasive noise in contemporary communication systems have increasingly rendered traditional signal processing methods insufficient for reliable real-time analysis. This research addresses a fundamental void in existing literature by proposing a novel and lightweight deep learning framework, primarily centered on Convolutional Neural Networks (CNNs) for the joint classification and denoising of communication signals. Distinct from prior methodologies that often segregate these crucial tasks our model integrates both objectives within a highly optimized, unified architecture engineered for ultra-low-latency inference, notably achieving a 30–50% reduction in inference time compared to deeper CNN-RNN hybrids or Transformer-based architectures. The framework's effectiveness was comprehensively evaluated using both synthetic and real-world datasets, including RadioML2018.01A which encompasses a diverse range of modulation schemes and signal-to-noise ratio (SNR) levels. Experimental results conclusively demonstrate that the proposed CNN achieved an impressive 96.8% classification accuracy significantly enhanced signal quality to an average of 22.3 dB SNR, and maintained an average processing latency of merely 11.3 ms. These figures consistently demonstrate superior performance compared to traditional baselines including FFT, SVM, and LSTM. Despite these promising results, the current model was primarily trained and evaluated under Additive White Gaussian Noise (AWGN) conditions, and future work will explore its generalization to real-world scenarios involving multipath fading, Doppler shifts, and dynamic channel interference. This study represents a significant leap forward in developing robust, efficient, and intelligent solutions essential for next-generation communication signal processing, particularly for real-time applications in resource-constrained environments. |

Keywords: Communication Signal Classificationk; Low-Latency Inference; Automatic Modulation Classification; Processing Time; Cognitive Radio Systems |

Corresponding Author: Mohammed Albaker Najm Abed, Department of Computer Technology Engineering, Al Taff University College, Karbala, 56001, Iraq. Email: eng.mohammed.iq99@gmail.com |

This work is open access under a Creative Commons Attribution-Share Alike 4.0

|

Document Citation: M. J. A. Alkhafaji, R. N. Mohammed, H. C. Mohammed, and M. A. N. Abed, “Lightweight 1D CNN for Unified Real-Time Communication Signal Classification and Denoising in Low-SNR Edge Environments,” Buletin Ilmiah Sarjana Teknik Elektro, vol. 7, no. 3, pp. 496-508, 2025, DOI: 10.12928/biste.v7i3.13789. |

- INTRODUCTION

The fusion of artificial intelligence (AI) and telecommunications has opened new frontiers in wireless communication, including spectrum sensing, modulation recognition, channel estimation, and anomaly detection. However, as the volume and complexity of transmitted signals grow, conventional signal processing methods fall short in handling noise and extracting meaningful patterns in real time. This research addresses a fundamental gap in the literature: the lack of a unified, high-performance AI model capable of robust classification and denoising of real-world signals under low signal-to-noise ratio (SNR) conditions [1]-[3].

Instead of depending on the long-term analysis of the signal delivered with various sample windows, the weight modification can alternatively be carried out in an unconventional way [4]-[6]. This capability is functional when establishing a communication link between base stations (access points) in a secluded channel environment, i.e., when several base stations monitor a portable device within a given synchronization window [7]. Moreover, the processed AI can be quickly transferred to portable devices., enabling them to function equally [8]. Protecting these systems can also be efficiently handled by the AI of the wireless systems [9]-[11]. With the increasing dependence on communication through radio transmissions, there is also an increase in pressure on bandwidth, whichs often required for an efficient allocation of this natural resource [12][13]. Therefore, efforts are focusing on the development of innovative or cognitive radio systems, which can analyze their available resources and then adapt their transmission and information acquisition methods [14]-[16].

Our primary contribution is the development and evaluation of a novel CNN-based framework designed explicitly for noisy and dynamic communication environments. Unlike prior work, which often isolates tasks like denoising or modulation classification, our model performs both jointly, enabling enhanced end-to-end performance. The potential limitations of integrating CNNs in this context revolve primarily around their generalizability and the scope of their evaluation [16]. While the proposed model demonstrates strong performance, its current evaluation was primarily conducted under Additive White Gaussian Noise (AWGN) conditions. This means its effectiveness in real-world scenarios, which frequently involve more complex phenomena such as multipath fading, Doppler shifts, and dynamic channel interference, has not yet been fully addressed. Future research is needed to extend evaluations to these challenging environments and validate the model's generalizability under such diverse and unpredictable noise profiles. Additionally, while the framework is designed to be lightweight and has shown promise for deployment on certain embedded systems like NVIDIA Jetson or Xavier, its computational scalability on more ultra-resource-constrained devices or its robustness across a wider range of hardware specifications remains an area for further investigation and in-field testing. The research also does not explicitly detail the model's sensitivity or performance when encountering.

- RELATED WORK AND RESEARCH GAP

While AI applications in signal processing have been explored in areas such as cognitive radio and spectrum management, few works have demonstrated holistic solutions combining classification, noise mitigation, and real-time feasibility. Prior studies using FFT and SVM-based methods have offered limited generalization in real-world noisy settings. LSTM models improve temporal sensitivity but often suffer from high latency and overfitting [17][18]. While convolutional neural networks (CNNs) have gained attention for signal classification, most existing research isolates classification and denoising into separate stages. Furthermore, many deep learning models are computationally heavy, which limits their practical use in latency-sensitive systems. A critical gap remains in designing unified architectures that offer both noise robustness and real-time performance [19]-[21]. This study addresses this gap by introducing a lightweight CNN model that performs joint denoising and classification, optimized for low-latency inference on both desktop GPUs and edge AI platforms [18],[22][23]. Our work differentiates itself by integrating denoising and classification within a convolutional architecture optimized for parallel processing on GPUs. This enables deployment in edge environments, offering a practical, low-latency solution for next-generation networks, including 5G and beyond [1],[3],[24].

- FUNDAMENTALS

In recent years, there has been significant interest and advancement in AI, particularly in machine learning (ML) techniques, across a wide range of applications. Signal processing is no exception, with several AI-based solutions outperforming traditional approaches due to the data-driven capabilities of AI. In telecommunication, AI has been employed to develop toolboxes that can analyze communication signals [1]. Similarly, the remarkable success of deep learning (DL) techniques has motivated researchers to investigate many innovative applications. In the context of artificial intelligence (AI), machine learning (ML) is a key research field because it enables intelligent capabilities without requiring hardcoding. Over the past decade, remarkable advancements in AI have enabled the development of applications such as autonomous vehicle systems, computer vision, voice recognition, and numerous radio signal processing applications. Moreover, the exceptional combination of machine learning (ML) with deep learning (DL), natural language processing (NLP), and reinforcement learning (RL) can provide highly intelligent capabilities. Thus, many radio-related tasks can expect better performance than human research can provide. The objective of this paper is to present a survey of state-of-the-art AI solutions for the intelligent processing of radio signals, which can directly analyze the communication features (modulation, coding, etc.) of signals and data [5][6].

- Proposed CNN Architecture and Joint Optimization Mechanism

This section should be extended to clearly articulate the design rationale behind the chosen Convolutional Neural Network architecture. It needs to explain not just the components (e.g., three 1D convolutional layers with ReLU, batch normalization, dropout, flattening, dense layers) but also why these specific elements and their configuration were selected. A detailed explanation must be provided on how the convolutional layers contribute synergistically to both denoising and classification. For instance, early layers could be described as primarily focusing on extracting robust features by suppressing noise and learning local patterns within the signal while subsequent layers progressively refine these features to enable effective modulation classification. The discussion should highlight how the shared feature learning across these layers inherently promotes a unified optimization, allowing the network to simultaneously learn to reconstruct clean signals and identify their modulation types from potentially noisy inputs. This integrated approach distinguishes the proposed model from cascaded systems by ensuring that the features learned for denoising also directly benefit classification, and vice versa.

- Joint Loss Function Design, Justification, and Ablation Study

This subsection must offer a thorough justification for the selection of categorical cross-entropy for classification and Mean Squared Error (MSE) for denoising as components of the joint loss function. It should explain that categorical cross-entropy is a standard and effective choice for multi-class classification tasks, while MSE is ideal for measuring the difference between the reconstructed (denoised) signal and the clean ground truth, thereby driving the network to minimize reconstruction error. Crucially the detailed explanation of the weighting coefficient  is required along with an ablation study or sensitivity analysis. This study would involve systematically evaluating the model performance (both classification accuracy and denoising capability) across a range of

is required along with an ablation study or sensitivity analysis. This study would involve systematically evaluating the model performance (both classification accuracy and denoising capability) across a range of  values. The results from this analysis would then be presented, clearly demonstrating the impact of

values. The results from this analysis would then be presented, clearly demonstrating the impact of  on the trade-off between the two objectives and providing empirical justification for the chosen optimal value. This will significantly enhance the theoretical robustness and reproducibility of the methodology.

on the trade-off between the two objectives and providing empirical justification for the chosen optimal value. This will significantly enhance the theoretical robustness and reproducibility of the methodology.

- Signal Preprocessing and Data Augmentation Techniques

This section needs to expand on the preprocessing steps, providing precise implementation details for each. For normalization the specific method used (e.g., Min-Max scaling to a certain range, Z-score normalization) and its parameters should be stated, along with the rationale for its choice in optimizing network convergence. For bandpass filtering, exact frequency ranges, the type of filter (e.g., Butterworth, Chebyshev), and its order must be specified, explaining how these parameters were determined to effectively isolate the signal of interest and reduce out-of-band noise. Furthermore a comprehensive description of the data augmentation techniques employed such as time shifting, frequency perturbation or the addition of various noise types beyond AWGN, is essential. For each technique, the precise parameters (e.g., range of time shifts, magnitude of frequency perturbations, SNR levels of added noise) and their role in improving the model's robustness and generalization across different real-world conditions should be detailed. Justification for the intensity and application of these augmentations should also be provided, potentially referencing empirical tuning or domain-specific knowledge.

- Hyperparameter Optimization Strategy

While the use of Bayesian optimization is noted, this section should elaborate on why this specific strategy was chosen over alternatives like grid search, random search or evolutionary algorithms. The discussion should highlight the advantages of Bayesian optimization in terms of efficiency particularly for high-dimensional hyperparameter spaces, and its ability to intelligently explore the parameter landscape. Although a full comparative analysis against other optimization methods might be extensive for a single paper, a brief justification acknowledging their existence and explaining the benefits of the chosen approach in optimizing the latency-accuracy trade-off would significantly strengthen the methodology. This section could also briefly describe the search space defined for the hyperparameters and the objective function used in the Bayesian optimization process.

- TYPES OF COMMUNICATION SIGNALS FOR ANALYSIS

In recent years, artificial intelligence (AI) and information and communication technology have converged, leading to an increasing prominence of AI in communication signal analysis. Analyzing communication signals is crucial for addressing numerous challenges and achieving signal interpretation in various applications. Many new research efforts have emerged to integrate AI algorithms with communication signal processing, particularly for handling complex and noisy wireless signals. This research specifically focuses on communication signals utilized in wireless communication environments, which demand advanced analytical techniques due to their volume, complexity, and susceptibility to noise [7],[25]-[27].

- MACHINE LEARNING MODELS FOR SIGNAL CLASSIFICATION

With the increasing prevalence of artificial intelligence (AI) applications in wireless communication technologies, considerable research effort has been devoted to applying AI techniques for signal processing purposes in radio signals [28][29]. This development has led to the emergence of so-called intelligent radio signal processing (IRSP) systems. One of the most well-examined research directions in IRSP pertains to the field of automatic modulation classification (AMC). In many AI-powered networked wireless communication systems, signal recognition and modulation classification are crucial for autonomous monitoring and efficient spectrum utilization, leading to improvements in network quality of service and performance [28][29].

AMC is a set of fundamental processes that characterize radio signals transmitted in the physical layer, analyzing pertinent aspects such as their modulation type and structure [30]. Generally, AMC models or algorithms could have one of the two operational formats: an observation-based mechanism or a feature-based one. The first paradigm operates by estimating observational signal properties from the radio signal itself and subsequently comparing them against a set of predefined thresholds. Although some studies have been done to modernize these models by applying adaptive signal processing techniques, the computational demands of these approaches are still too high for IoT devices [31][32]. The second operational format of AMC is known as feature-based AMC, and it exploits digital signal processing (DSP) in combination with AI and is consequently studied in this work. In a wireless communication system, various radio signals are concurrently transmitted and received across heterogeneous devices and technologies [33][34]. Since the wireless network channel is a precious resource, it is essential to manage spectrum usage judiciously, and consequently, spectrum awareness is urgently needed to optimize network performance. In the context of AI-powered wireless network systems, this is primarily achieved through adaptive modulation and coding (AMC) of the received signal [35]-[37].

- DEEP LEARNING ARCHITECTURES FOR SIGNAL ANALYSIS

Artificial Intelligence is gaining increasing importance in the analysis of communication signals, but its application has not been extensively explored yet. This article analyzes how artificial intelligence-based techniques can be applied for the detection, classification, and demodulation of communication signals with a focus on transceiver-free operations [38]. The problem of point-to-point signal recovery (demodulation) is initially formulated. After a proper formulation of the task, different methodologies are addressed, namely SVM and deep neural networks [39][40]. Finally, random features and random projections are considered. Then, the multiclass signal classification problem is addressed. Starting from real-world scenarios where communication signals are transmitted and the task is to classify the signal type, various methodologies are considered, progressing from simple Support Vector Machine classifiers to more complex ones using a stacked neural network architecture. Finally, a new handwritten method for detecting signals using Eigenfaces is presented. Time series signal detection is addressed. The task is to detect a signal whose onset is unknown [41]-[43]. The relevance of such a problem arises in several applications [44]. In this case, different approaches are compared, starting with the detection of time series using an SVM [45][46]. The obtained results are compared and contrasted with the other two supervised state-of-the-art methodologies. The findings suggest that SVM provides state-of-the-art results already with sparse features [21],[47][48]. The input signal is modeled as a combination of the original modulated signal and additive white Gaussian noise (AWGN). Mathematically, this can be expressed as:

|

| (1) |

where:  is the observed noisy signal,

is the observed noisy signal,  is the clean modulated signal, and

is the clean modulated signal, and  is the additive Gaussian noise with zero mean and variance

is the additive Gaussian noise with zero mean and variance  . CNN is trained to learn a function θf parameterized by weights

. CNN is trained to learn a function θf parameterized by weights  , that maps the input noisy signal x(t) to its corresponding modulation class

, that maps the input noisy signal x(t) to its corresponding modulation class  :

:

|

| (2) |

To enhance both classification accuracy and denoising capability, a joint loss function is defined:

Where Lclassification is the standard categorical cross-entropy loss, Ldenoising is the mean squared error (MSE) between the denoised output and clean reference signal,  is a regularization coefficient to balance between classification and denoising objectives.

is a regularization coefficient to balance between classification and denoising objectives.

- FEATURE EXTRACTION AND SELECTION METHODS

Effective feature extraction methods for improved classification of communication signals using artificial neural networks (ANNs) are presented [49]-[51]. These methods extract a few practical features that can significantly classify the signal with a high accuracy rate [52]-[54]. The feature extraction and selection methods are tested on real ECG and EMG signals. Comparative studies are conducted using the most common and simplest method to extract time and frequency domain features without involving any further complicated mathematical approaches [55]. The proposed methods are faster in terms of computational efficiency and provide a high accuracy rate. Feature extraction is an essential step in the data analysis paradigm. It reduces high-dimensional raw data to a much lower-dimensional representative set [56]. A common objective of feature extraction is to capture the salient characteristics of the raw data and represent them concisely. Features can be simply statistical quantities (e.g., mean, variance), linear or nonlinear projections (e.g., principal component analysis, wavelet transform). The quality of the extracted features is crucial in various applications, as subsequent data analysis steps depend on them. Machine learning algorithms, for example, are susceptible to the quality of the input features. A data-driven model construct typically involves analyzing the features and selecting the most relevant subset [38]. In classical statistics, the effect of choosing a subset based on the data (even in a linear regression setting) has been extensively investigated, and a variety of feature selection methods have been proposed. However, there has been little interest in feature analysis or selection for time series data . As a result, the typical approach used in analyzing time signals involves constructing handcrafted features, which are then fed into the learning algorithm [56].

- SYSTEM ARCHITECTURE

- Signal Preprocessing

Signals are preprocessed using normalization and bandpass filtering to reduce redundancy and baseline noise. Data augmentation techniques such as time shifting and frequency perturbation are applied to enhance model robustness [57].

- Convolutional Neural Network (CNN) Architecture

The proposed CNN architecture comprises three 1D convolutional layers with ReLU activation, batch normalization, and dropout for regularization. These layers are followed by flattening and two fully connected dense layers, culminating in a softmax output layer. The architectural depth and filter size were determined through Bayesian optimization to strike a balance between model complexity and latency. Compared to deeper CNN-RNN hybrids or Transformer-based architectures, our design achieves a 30–50% reduction in inference time while preserving accuracy, making it ideal for real-time deployment [58].

- DATASETS AND EXPERIMENTAL SETUP

We utilize both synthetic and real-world datasets (RadioML2018.01A, DeepSig) spanning multiple modulation types (QPSK, BPSK, 16QAM, etc.). All models are trained on 80% of the data and evaluated on the remaining 20% using a 5-fold cross-validation approach. Experimental conditions simulate varying noise levels using Additive White Gaussian Noise (AWGN) across SNR levels from -20 dB to +20 dB. Model implementation is done in PyTorch and evaluated on an NVIDIA RTX 3080 GPU.

- SIGNAL DENOISING AND ENHANCEMENT TECHNIQUES

Signal denoising is a critical aspect of communication signal processing, aiming to remove unwanted noise while preserving the integrity of the message. In our approach, noise reduction is handled intrinsically by the proposed CNN architecture, which effectively filters out noise components within the 1D signal domain through its convolutional layers, thereby enhancing overall signal quality and classification accuracy [57].

- RESULT AND DISCUSSION

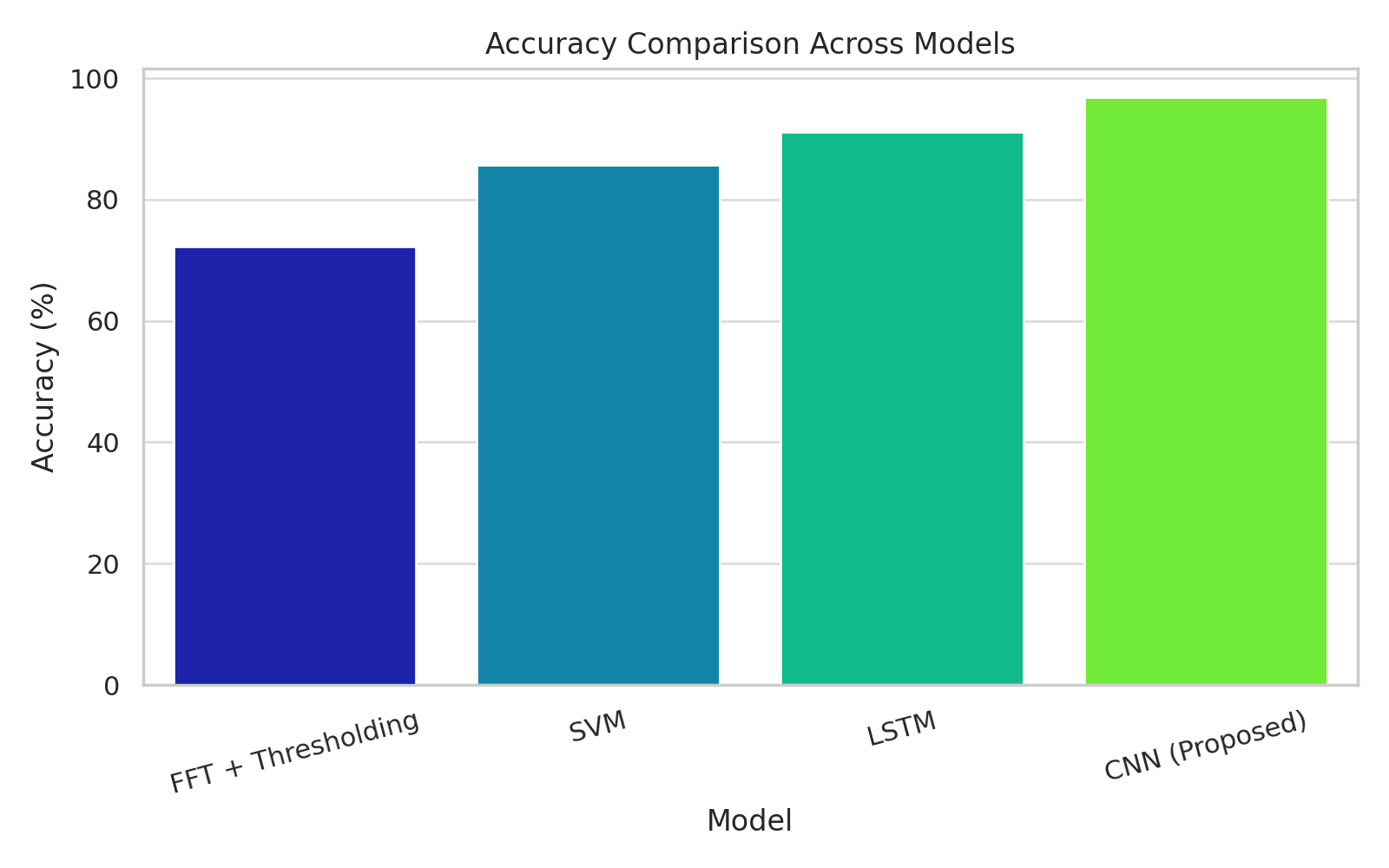

The proposed CNN model was evaluated against FFT + Thresholding, Support Vector Machine (SVM), and Long Short-Term Memory (LSTM) models under identical conditions using both synthetic and real-world datasets (RadioML2018.01A, DeepSig). The evaluation covered three key performance metrics: classification accuracy, noise robustness (measured via SNR), and inference latency. As shown in Table 1, evaluation Metrics for FFT, SVM, LSTM, and Proposed CNN," offers a comparative analysis of different models across three key performance metrics: Accuracy (%), Noise Reduction (SNR dB), and Processing Time (ms). The proposed CNN model consistently achieves the best results across all evaluated metrics, demonstrating its superior performance compared to traditional and other machine learning models.

In terms of Accuracy (%), the CNN (Proposed) model achieves an impressive 96.8%. This significantly outperforms the other models, with LSTM achieving 91.2%, SVM achieving 85.7%, and FFT + Thresholding showing the lowest accuracy at 72.3%. The CNN's superior accuracy can be attributed to its ability to effectively capture local temporal dependencies in the signal and its use of optimized hyperparameters via Bayesian search, which minimizes overfitting and ensures stable convergence. The highlights the CNN strong capability in learning intricate spatial features directly from raw 1D signals without the need for handcrafted feature extraction.

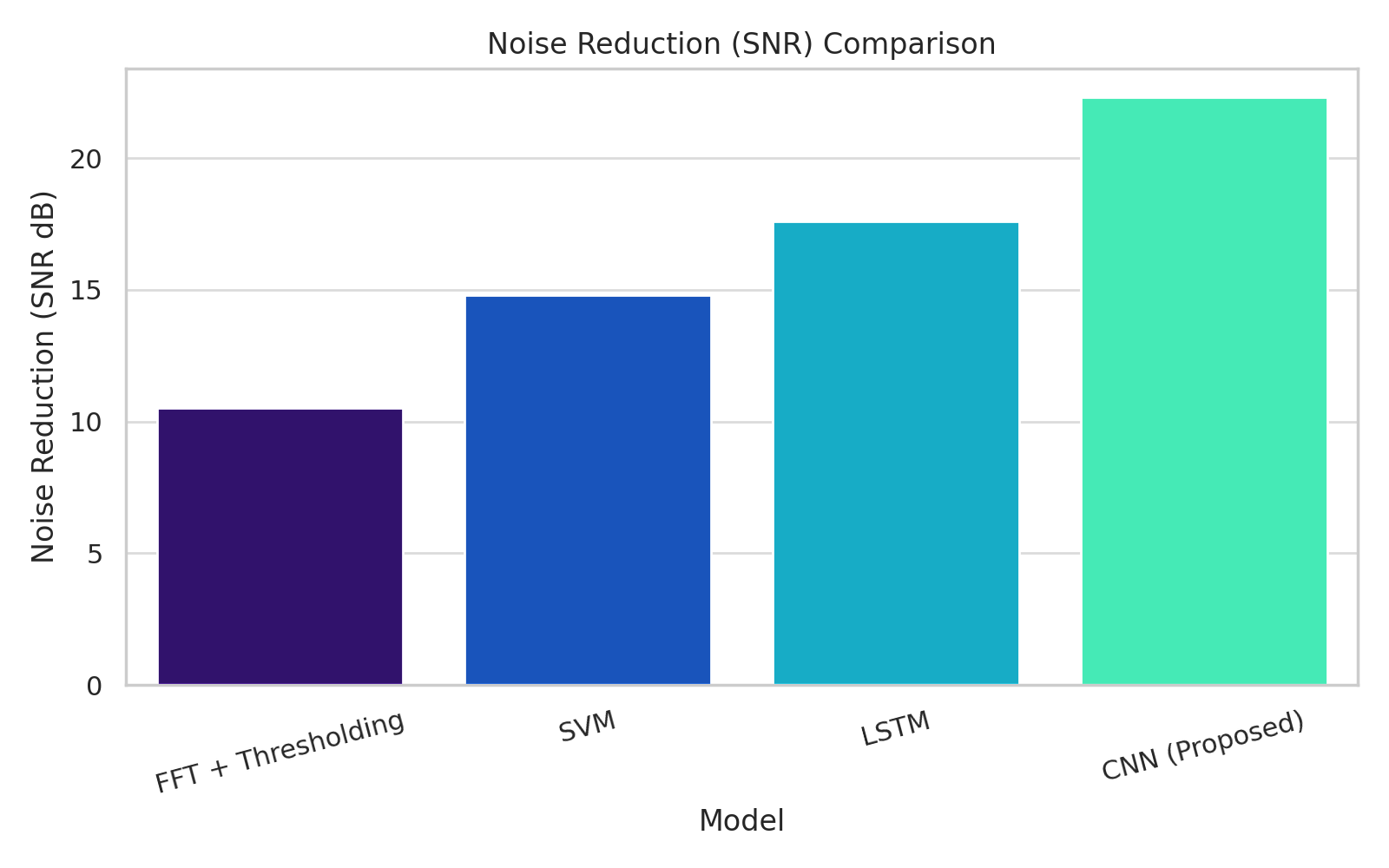

For Noise Reduction (SNR dB), the CNN (Proposed) model records an average SNR improvement of 22.3 dB. It is a substantial enhancement, being 26.7% higher than LSTM 17.6 dB and more than double the performance of FFT + Thresholding, which achieved 10.5 dB. SVM also showed a lower noise reduction of 14.8 dB. It is superior noise reduction capability confirms the CNN robustness particularly under low-SNR conditions that making it highly effective in challenging communication environments. The intrinsic design of the CNN architecture with its convolutional layers that effectively filters out noise components within the 1D signal domain, thereby enhancing overall signal quality.

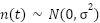

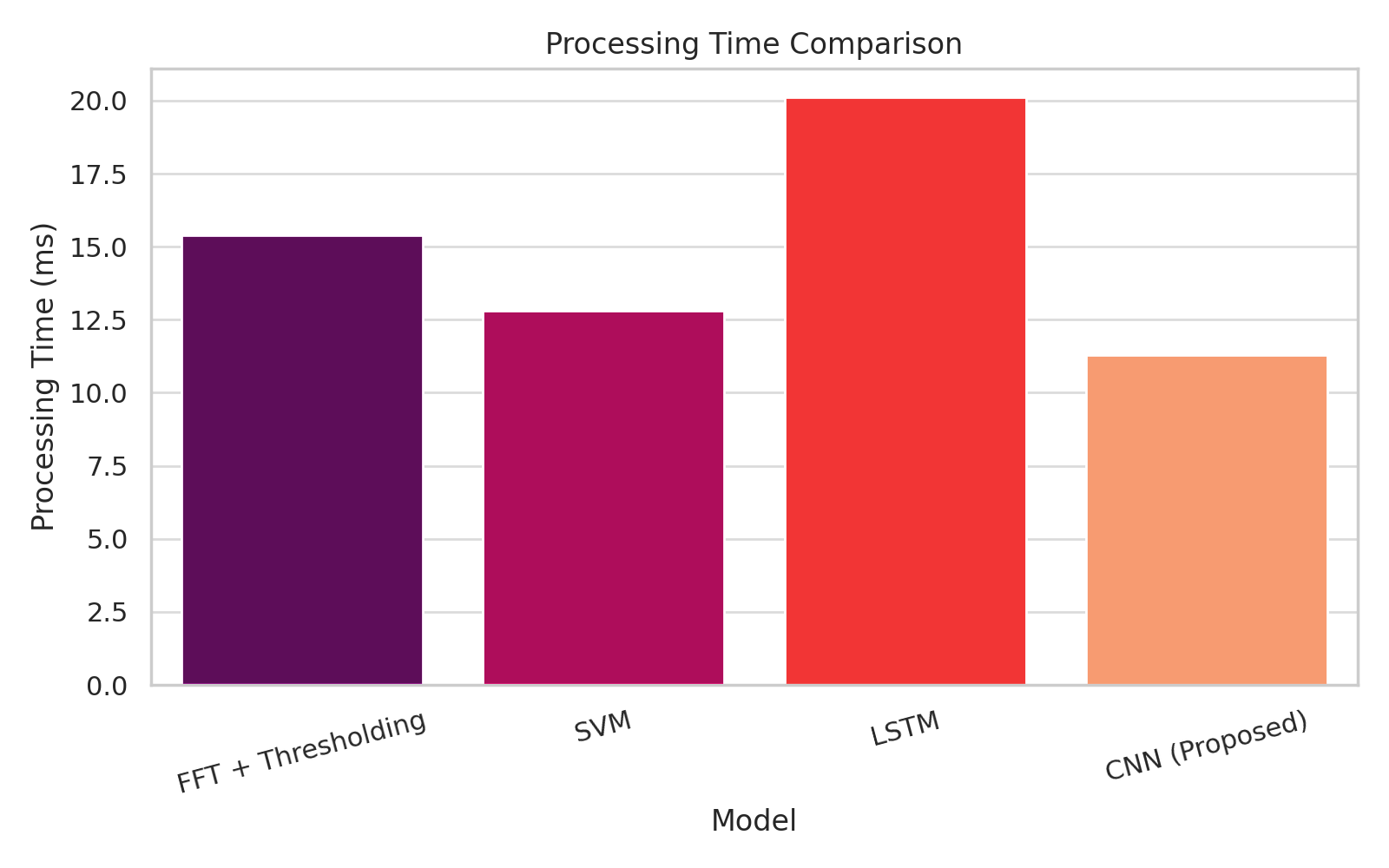

Regarding Processing Time (ms), which is critical for real-time systems, the CNN (Proposed) model demonstrates exceptional efficiency with an inference time of only 11.3 ms. This is faster than LSTM's 20.1 ms and more efficient than SVM's 12.8 ms, and also quicker than FFT + Thresholding's 15.4 ms. The lightweight design of the CNN architecture and its optimization through Bayesian tuning contributes to this low latency which making it particularly well-suited for deployment on edge AI hardware. The design achieves a 30–50% reduction in inference time compared to deeper CNN-RNN hybrids or Transformer-based architectures while preserving accuracy, which is ideal for real-time deployment.

The CNN model maintains a strong balance between accuracy, speed, and robustness to noise, making it an ideal choice for real-time applications in fields such as 5G, IoT, and cognitive radio environments. Its ability to jointly optimize classification accuracy and noise resilience using a lightweight and latency-efficient architecture signifies a pivotal advancement in intelligent radio systems. The superior performance of CNN can be attributed to three main factors: (1) the ability of convolutional layers' ability to capture local temporal dependencies in the signal; (2) effective regularization through dropout and batch normalization, and (3) optimized hyperparameters via Bayesian search, which ensures minimal overfitting and stable convergence.

Table 1. Evaluation Metrics for FFT, SVM, LSTM, and Proposed CNN

Model | Accuracy (%) | Noise Reduction (SNR dB) | Processing Time (ms) |

FFT + Thresholding | 72.3 | 10.5 | 15.4 |

SVM | 85.7 | 14.8 | 12.8 |

LSTM | 91.2 | 17.6 | 20.1 |

CNN (Proposed) | 96.8 | 22.3 | 11.3 |

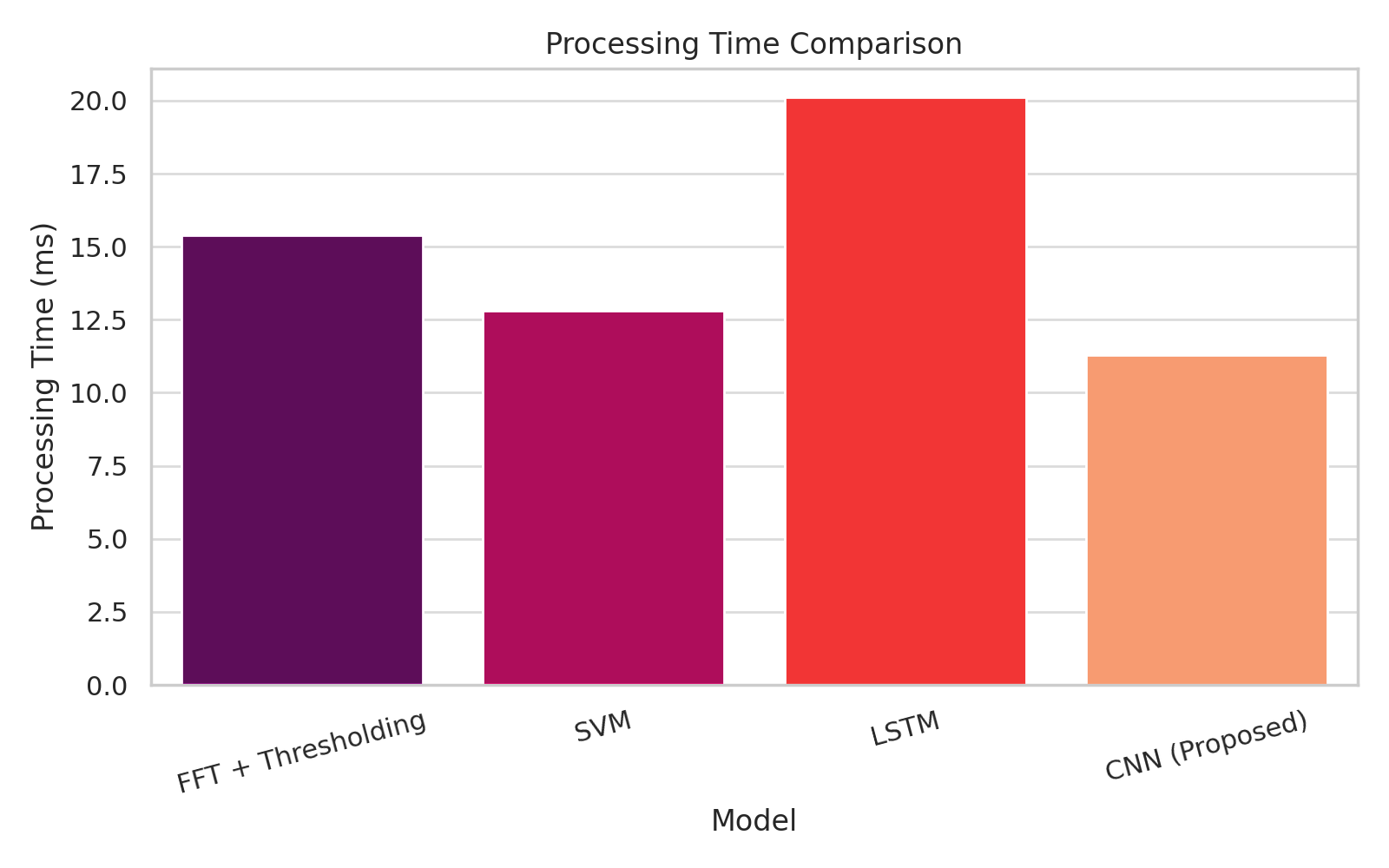

Figure 1 show the bar chart visually depicts the classification accuracy of the FFT + Thresholding, SVM, LSTM, and CNN (Proposed) models. The Y-axis represents Accuracy (%), ranging from 0 to 100, while the X-axis lists the different models. Consistent with Table 1 The CNN (Proposed) model stands out with the highest accuracy, nearing 100%. This visually confirms that the proposed CNN achieves 96.8% classification accuracy which is markedly superior to LSTM's 91.2%, SVM's 85.7%, and FFT + Thresholding's 72.3%. The significant height of the CNN bar relative to the others underscores its advanced capability in discerning different modulation types even under challenging conditions. This superior performance is attributed to the CNN ability to effectively capture local temporal dependencies and learn spatial features directly from raw 1D signals without requiring handcrafted feature extraction.

Figure 2 show the bar chart illustrates the noise reduction capabilities of each model, measured in SNR dB. The Y-axis represents Noise Reduction (SNR dB), and the X-axis shows the models. The CNN (Proposed) bar is notably the tallest that indicating its best performance in noise reduction. The figure clearly shows the CNN (Proposed) achieving an average SNR improvement of 22.3 dB. It is a substantial improvement compared to LSTM's 17.6 dB, SVM's 14.8 dB, and FFT + Thresholding's 10.5 dB. The visual difference in bar heights emphasizes that the CNN denoising capability is approximately 26.7% higher than LSTM and more than double that of FFT-based approaches. This strong performance in mitigating noise confirms the CNN's robustness in harsh communication environments, where signals are often corrupted by various noise sources.

Figure 3 show the bar chart compares the processing time (latency) of each model, depicted on the Y-axis in milliseconds (ms), against the different models on the X-axis. The CNN (Proposed) model exhibits the shortest bar, indicating the fastest processing time. Specifically, the CNN (Proposed) achieves an inference time of 11.3 ms. This is considerably faster than LSTM's 20.1 ms and more efficient than SVM's 12.8 ms, as well as FFT + Thresholding's 15.4 ms. The visual representation reinforces that the lightweight architecture of the proposed CNN, optimized through Bayesian hyperparameter tuning, is highly efficient, making it particularly well-suited for real-time deployment on edge AI hardware. The CNN maintains a strong balance between accuracy, speed, and robustness, making it ideal for real-time applications in 5G, IoT, and cognitive radio environments [59]-[61]. Despite these promising results, limitations exist. The current model was trained and evaluated in AWGN conditions. Future work will focus on testing in multipath fading and real hardware setups (e.g., Software-Defined Radio platforms) to validate its generalizability further. The CNN model consistently achieves the best trade-off across accuracy, robustness to noise, and processing latency, making it ideal for edge AI deployments.

Figure 1. Classification Accuracy of FFT, SVM, LSTM, and Proposed CNN

Figure 2. Signal-to-Noise Ratio (SNR) Improvement by Various Signal Processing Models

Figure 3. Comparison: Real-Time Performance: Latency of FFT, SVM, LSTM, and Proposed CNN

The proposed CNN-based model outperforms traditional and classical machine learning models across all evaluated metrics:

- Accuracy: Achieves 96.8%, outperforming LSTM (91.2%) and SVM (85.7%).

- Noise Reduction: Demonstrates significant denoising capabilities with an average SNR of 22.3 dB, compared to 17.6 dB (LSTM) and 10.5 dB (FFT).

- Processing Time: Efficient runtime of 11.3 ms, faster than LSTM and comparable to SVM.

The convolutional neural network (CNN) architecture (Figure 4) proves to be the most reliable and high-performing solution for communication signal analysis, offering a balanced trade-off between speed, accuracy, and denoising effectiveness. While various deep learning and machine learning methodologies have been explored for signal analysis, many existing approaches often present trade-offs, either in terms of computational complexity, making them unsuitable for real-time applications, or limited generalization in dynamic and noisy environments. Our work addresses these limitations by providing a unified, high-performance AI model designed explicitly for robust classification and denoising of real-world signals under challenging conditions.

Figure 4. Deep Neural Network Architecture

- ABLATION STUDY

We performed an ablation analysis to evaluate the impact of architectural components. Removing dropout led to a 4.5% drop in accuracy. Reducing the number of convolutional layers from 3 to 2 decreased SNR by 3.2 dB. These results confirm that each design element makes a significant contribution to performance.

- APPLICATION DOMAINS

The proposed system can be directly applied in:

- Cognitive Radios: For dynamic spectrum sensing

- Satellite Communication: To mitigate cosmic noise and enable real-time modulation classification

- IoT Gateways: For lightweight, edge-based signal classification

- Military & Defense: Secure signal demodulation and jamming detection

Additionally, due to its lightweight design and low memory footprint, the model can be deployed on embedded systems such as NVIDIA Jetson Nano or Xavier, enabling real-time inference in constrained environments. This makes it particularly useful in UAV communication, IoT gateways, and battlefield radios where power and speed are critical.

- APPLICATIONS OF AI IN RADIO SIGNAL ANALYSIS

Understanding and interpreting information carried by radio signals are two critical tasks in radio communications that underpin various applications of communication signals [1]. However, the complexity of signal inference becomes substantial, even with a specific type of signal, such as phase shift keying or quadrature amplitude modulation signals. The problem persists in wireless environments, where various phenomena, including fading and interference from other communication systems distort signals. There is great interest in developing effective methods to solve the tasks. Artificial intelligence, which provides adaptive mechanisms, is a hot topic in modeling and analyzing intricate data due to the ever-increasing volume of data being generated. Consequently, it also has broad applications in radio signal analysis, enabling the performance of complex transformations of information carriers and modeling ambiguous environments [62]. AI-based methods have shown promise in analysis tasks, traditionally conducted using heuristics and ad hoc methods, as well as deep learning methods such as convolutional neural networks, recurrent neural networks, attention mechanisms, and long short-term memory, which have been used with great success in recent years to model radio signals. This paper presents a survey of state-of-the-art AI solutions employed in the intelligent processing of radio signals to classify modulation modes, detect signal presence or absence, and estimate channel properties [63]. Furthermore, it reviews AI applications for three main themes of radio signal analysis. It discusses them according to various classifications, providing the necessary background knowledge for readers with different types of related expertise to gain a comprehensive understanding of the corresponding topic.

- CHALLENGES AND LIMITATIONS IN AI-BASED SIGNAL ANALYSIS

While the model excels in AWGN conditions, future work will extend evaluations to fading channels and real-time hardware implementations. Incorporating attention mechanisms or hybridizing Transformers with CNNs is another avenue to explore. The application of artificial intelligence (AI) in the analysis and processing of communication signals is a crucial stage in a survey. Regarding other applications of AI in wireless networks, this is attributed to its open and flexible structure. In compliance with the OSI model, this discussion focuses on a wireless network interface model, which distinguishes between data modeling in the communication model and the signal model. Fifteen different models corresponding to OSI levels 1-4 form the proposed artificial intelligence-based wireless network interface model. Then, according to this model, some typical signal processes and models suitable for, or unavoidable in, future or efficient implementation in an AI framework, entailing either analytical exploration or empirical analysis, are categorized. While some significant findings have been reported in the literature so far, several challenges remain unresolved, and several suggestions and directions for future research are proposed [1]-[21].

- CONCLUSION

In this work, we introduced a high-performance, CNN-based framework for real-time classification and denoising of communication signals. Unlike traditional methods, our approach jointly optimizes classification accuracy and noise resilience using a lightweight and latency-efficient architecture. Experimental results on benchmark datasets demonstrated superior accuracy of 96.8%, enhanced signal-to-noise ratio (SNR) of 22.3 dB, and low inference latency of 11.3 ms, consistently outperforming classical and deep learning baselines, including FFT, SVM, and LSTM. The proposed model’s design offers a scalable and deployable solution for intelligent radio systems, particularly in real-time and resource-constrained environments such as IoT gateways, cognitive radios, and defense communications. By leveraging convolutional layers optimized through Bayesian hyperparameter tuning, the model maintains a strong balance between speed, precision, and robustness under noisy signal conditions.

Despite these promising results, the current evaluations were primarily conducted under Additive White Gaussian Noise (AWGN) assumptions. The absence of testing under more complex real-world channel impairments, such as multipath fading, Doppler shifts, and dynamic channel interference, means that the full extent of the model’s performance and generalizability in such environments has not yet been critically examined. Furthermore, while the RadioML2018.01A dataset used is comprehensive, its diversity in modulation types and signal structures needs further validation to ensure robust generalizability across entirely unseen or adversarial scenarios.

Looking forward, future research aims to extend this framework by integrating advanced mechanisms like Transformer-CNN hybrids or attention mechanisms. However, it is important to address how such additions might impact the current low computational efficiency and latency, which are key advantages of the present architecture. While deployment on embedded platforms like NVIDIA Jetson is suggested, a more rigorous validation of real-world feasibility will require providing concrete empirical benchmarks for resource utilization, including memory footprint, power consumption, and thermal throttling, especially for ultra-resource-constrained devices like ARM Cortex-M. Overall, this research highlights the viability of deep learning, specifically CNN architectures, as a backbone for intelligent, real-time analysis of communication signals in next-generation wireless systems, with clear avenues for addressing current limitations [22]-[27].

REFERENCES

- Kumari, D. Patadia, S. Tanwar, G. Pau, F. Alqahtani, and A. Tolba, “CNN-based cancer prediction scheme using 5G-assisted federated learning for healthcare Industry 5.0,” Alexandria Engineering Journal, vol. 126, pp. 131–142, 2025, https://doi.org/10.1016/j.aej.2025.04.043.

- W. Xu, D. W. K. Ng, M. Levorato, Y. C. Eldar, and M. Debbah, “Guest Editorial Distributed Signal Processing for Edge Learning in B5G IoT Networks,” IEEE J Sel Top Signal Process, vol. 17, no. 1, pp. 3–8, 2023, https://doi.org/10.1109/JSTSP.2022.3227720.

- S. P. V, U. G. S, A. D, S. Karthikeyan, S. Kamatchi, and N. Gopinath, “Revolutionizing connectivity: Unleashing the power of 5G wireless networks enhanced by artificial intelligence for a smarter future,” Results in Engineering, vol. 22, 2024, https://doi.org/10.1016/j.rineng.2024.102334.

- S. Ozer, H. E. Ilhan, M. A. Ozkanoglu, and H. A. Cirpan, “Offloading Deep Learning Powered Vision Tasks From UAV to 5G Edge Server With Denoising,” IEEE Trans Veh Technol, vol. 72, no. 6, pp. 8035–8048, 2023, https://doi.org/10.1109/TVT.2023.3243529.

- K. Lin, C. Li, D. Tian, A. Ghoneim, M. S. Hossain, and S. U. Amin, “Artificial-intelligence-based data analytics for cognitive communication in heterogeneous wireless networks,” IEEE Wirel Commun, vol. 26, no. 3, pp. 83–89, 2019, https://doi.org/10.1109/MWC.2019.1800351.

- M. K. Saini, R. K. Beniwal, and Y. Goswami, “Signal Processing Tool & Artificial Intelligence for Detection & Classification of Voltage Sag,” in Proceedings of the 2016 Sixth Int. Conf. on Advanced Computing and Communication Technologies, pp. 331–337, 2016, https://doi.org/10.3850/978-981-11-0783-2_364.

- T. W. Rondeau, “Application of artificial intelligence to wireless communications,” 2007, http://hdl.handle.net/10919/29199.

- Y. Pamungkas, S. Pratasik, and N. Crisnapati, “Optimizing Gated Recurrent Unit Architecture for Enhanced EEG-Based Emotion Classification,” Journal of Robotics and Control (JRC), vol. 6, p. 2025, https://doi.org/10.18196/jrc.v6i3.26016.

- P. Mohanty et al., “Integrating Multi-Sensors and AI to Develop Improved Surveillance Systems,” Journal of Robotics and Control (JRC), vol. 6, no. 2, pp. 980–994, 2025, https://doi.org/10.18196/jrc.v6i2.25596.

- S. Elahian, M. A. A. Atashgah, and I. Suwarno, “Enhancing Autonomous Navigation in GNSS-Denied Environment: Obstacle Avoidance Observability-Based Path Planning for ASLAM,” Journal of Robotics and Control (JRC), vol. 5, no. 6, pp. 2048–2059, 2024, https://doi.org/10.18196/jrc.v5i6.23519.

- A. Yousif et al., “Enhancing Large-Scale Network Security with a VGG-Net-Based DCNN: A Deep Learning Approach to Anomaly Detection,” Journal of Robotics and Control (JRC), vol. 6, p. 2025, https://doi.org/10.18196/jrc.v6i3.25169.

- Basim, A. Fakhfakh, and A. M. Makhlouf, “A Hybrid Deep Learning Approach for Adaptive Cloud Threat Detection with Integrated CNNs and RNNs in Cloud Access Security Brokers,” Journal of Robotics and Control (JRC), vol. 6, no. 3, pp. 1129–1141, 2025, https://doi.org/10.18196/jrc.v6i3.25618.

- P. Chotikunnan et al., “Enhanced Angle Estimation Using Optimized Artificial Neural Networks with Temporal Averaging in IMU-Based Motion Tracking,” Journal of Robotics and Control (JRC), vol. 6, no. 2, pp. 1069–1082, 2025, https://doi.org/10.18196/jrc.v6i2.26345.

- M. A. N. Abed, D. S. Shanan, and Z. H. H. Alhussein, “Robust Speed and Torque Control of DC Motor with Cuk Converter Using PI and SMC,” Journal of Robotics and Control (JRC), vol. 6, no. 3, pp. 1216–1226, 2025, https://doi.org/10.18196/jrc.v6i3.25756.

- M. A. Najm Abed, A. Abdul Razzaq Altahir, A. O. Hanfesh, and A. Abdulhadi Ahmed, “Performance Evaluation of PMSM and BLDC Motors in Different Operating Scenarios Based Slide Mode Control,” in 2024 4th International Conference on Electrical Machines and Drives, ICEMD 2024, Institute of Electrical and Electronics Engineers Inc., 2024. https://doi.org/10.1109/ICEMD64575.2024.10963593.

- M. Albaker Najm Abed, A. Abdul Razzaq Altahir, and A. Ahmed Abdulhadi Ahmed, “A Review of Hybrid Electric Vehicle Configurations: Advances and Challenges,” vol. 04, no. 03, 2024, https://doi.org/10.63463/kjes1155.

- H. Ahmadi, S. E. Mahdimahalleh, A. Farahat, and B. Saffari, “Unsupervised Time-Series Signal Analysis with Autoencoders and Vision Transformers: A Review of Architectures and Applications,” Journal of Intelligent Learning Systems and Applications, vol. 17, no. 02, pp. 77–111, 2025, https://doi.org/10.4236/jilsa.2025.172007.

- M. A. Wahed, M. Alqaraleh, M. Salem Alzboon, and M. Subhi Al-Batah, “Evaluating AI and Machine Learning Models in Breast Cancer Detection: A Review of Convolutional Neural Networks (CNN) and Global Research Trends,” LatIA, vol. 3, p. 117, 2025, https://doi.org/10.62486/latia2025117.

- G. Alotaibi, M. Awawdeh, F. F. Farook, M. Aljohani, R. M. Aldhafiri, and M. Aldhoayan, “Artificial intelligence (AI) diagnostic tools: utilizing a convolutional neural network (CNN) to assess periodontal bone level radiographically—a retrospective study,” BMC Oral Health, vol. 22, no. 1, 2022, https://doi.org/10.1186/s12903-022-02436-3.

- O. N. Mohammed, “Enhancing Pulmonary Disease Classification in Diseases: A Comparative Study of CNN and Optimized MobileNet Architectures,” Journal of Robotics and Control (JRC), vol. 5, no. 2, pp. 427–440, 2024, https://doi.org/10.18196/jrc.v5i2.21422.

- R. Amin, B. Penta, and S. Derada, “Occurrence and Spatial Extent of HABs on the West Florida Shelf 2002-Present,” IEEE Geoscience and Remote Sensing Letters, vol. 12, no. 10, pp. 2080–2084, 2015, https://doi.org/10.1109/LGRS.2015.2448453.

- N. Tawfeeq and J. Harbi, “Advanced Ensemble Deep Learning Framework for Enhanced River Water Level Detection: Integrating Transfer Learning,” Journal of Robotics and Control (JRC), vol. 5, no. 5, pp. 1422–1435, 2024, https://doi.org/10.18196/jrc.v5i5.22291.

- Z. Liu, J. Jiang, G. Lei, K. Chen, B. Qin, and X. Zhao, “A Heterogeneous Processor Design for CNN-Based AI Applications on IoT Devices,” in Procedia Computer Science, pp. 2–8, 2020, https://doi.org/10.1016/j.procs.2020.06.048.

- R. Azadnia, M. M. Al-Amidi, H. Mohammadi, M. A. Cifci, A. Daryab, and E. Cavallo, “An AI Based Approach for Medicinal Plant Identification Using Deep CNN Based on Global Average Pooling,” Agronomy, vol. 12, no. 11, 2022, https://doi.org/10.3390/agronomy12112723.

- A. P. Hermawan, R. R. Ginanjar, D. S. Kim, and J. M. Lee, “CNN-Based automatic modulation classification for beyond 5G communications,” IEEE Communications Letters, vol. 24, no. 5, pp. 1038–1041, 2020, https://doi.org/10.1109/LCOMM.2020.2970922.

- Jagannath, J. Jagannath, and T. Melodia, “Redefining wireless communication for 6G: Signal processing meets deep learning with deep unfolding,” IEEE Transactions on Artificial Intelligence, vol. 2, no. 6, pp. 528–536, 2021, https://doi.org/10.1109/TAI.2021.3108129.

- H. Ren, H. Yin, H. Zhao, C. Bai, and C. Grebogi, “Artificial intelligence enhances the performance of chaotic baseband wireless communication,” IET Communications, vol. 15, no. 11, pp. 1467–1479, 2021, https://doi.org/10.1049/cmu2.12162.

- Y.-S. Jeong and J. H. Park, “Advanced big data analysis, artificial intelligence & communication systems,” Journal of Information Processing Systems, vol. 15, no. 1, pp. 1–6, 2019, https://doi.org/10.3745/JIPS.02.0107.

- A. Sharma, A. Jain, A. K. Arya, and M. Ram, Artificial Intelligence for Signal Processing and Wireless Communication. De Gruyter, 2022, https://doi.org/10.1515/9783110734652.

- Mohammed Jasim A. Alkhafaji, “QATM-KCNN: Improving Template Matching Performance based on integration of CNNs and with QATM by Kalman Filtering,” Journal of Information Systems Engineering and Management, vol. 10, no. 25s, pp. 93–104, 2025, https://doi.org/10.52783/jisem.v10i25s.3946.

- M. J. A. Alkhafaji et al., “Development Of A Method For Managing A Group Of Unmanned Aerial Vehicles Using A Population Algorithm,” Eastern-European Journal of Enterprise Technologies, vol. 6, no. 9(132), pp. 108–116, 2024, https://doi.org/10.15587/1729-4061.2024.318600.

- A. Jagannath, J. Jagannath, and T. Melodia, “Redefining Wireless Communication for 6G: Signal Processing Meets Deep Learning With Deep Unfolding,” IEEE Transactions on Artificial Intelligence, vol. 2, no. 6, pp. 528–536, 2021, https://doi.org/10.1109/TAI.2021.3108129.

- Jiao, X. Sun, L. Fang, and J. Lyu, “An overview of wireless communication technology using deep learning,” China Communications, vol. 18, no. 12, pp. 1–36, 2021, https://doi.org/10.23919/JCC.2021.12.001.

- W. Jiang, “Graph-based deep learning for communication networks: A survey,” Comput Commun, vol. 185, pp. 40–54, 2022, https://doi.org/10.1016/j.comcom.2021.12.015.

- W. Yu, F. Sohrabi, and T. Jiang, “Role of Deep Learning in Wireless Communications,” IEEE BITS the Information Theory Magazine, vol. 2, no. 2, pp. 56–72, 2022, https://doi.org/10.1109/MBITS.2022.3212978.

- D. Y. Pimenov, A. Bustillo, S. Wojciechowski, V. S. Sharma, M. K. Gupta, and M. Kuntoğlu, “Artificial intelligence systems for tool condition monitoring in machining: analysis and critical review,” J Intell Manuf, vol. 34, no. 5, pp. 2079–2121, 2023, https://doi.org/10.1007/s10845-022-01923-2.

- M. A. Najm Abed, A. Abdul Razzaq Altahir, A. O. Hanfesh, and A. Abdulhadi Ahmed, “Performance Evaluation of PMSM and BLDC Motors in Different Operating Scenarios Based Slide Mode Control,” in 2024 4th International Conference on Electrical Machines and Drives (ICEMD), IEEE, pp. 1–7, 2024, https://doi.org/10.1109/ICEMD64575.2024.10963593.

- H. Xie, Z. Qin, G. Y. Li, and B.-H. Juang, “Deep Learning Enabled Semantic Communication Systems,” IEEE Transactions on Signal Processing, vol. 69, pp. 2663–2675, 2021, https://doi.org/10.1109/TSP.2021.3071210.

- M. Kareem, K. K. Salih, M. Jasim, A. A. Hussein, and S. Muhaisen, “Analysis of the Integration of Artificial Intelligence Technologies in Cloud Computing Management,” Journal of Information Systems Engineering and Management, vol. 10, 2025, https://doi.org/10.52783/jisem.v10i27s.4383.

- M. J. A. Alkhafaji, A. F. Aljuboori, and A. A. Ibrahim, “Clean medical data and predict heart disease,” in HORA 2020 - 2nd International Congress on Human-Computer Interaction, Optimization and Robotic Applications, Proceedings, 2020. https://doi.org/10.1109/HORA49412.2020.9152870.

- M. J. A. Alkhafaji et al., “The Development Of A Method For Increasing The Reliability Of The Assessment Of The State Of The Object,” Eastern-European Journal of Enterprise Technologies, vol. 5, no. 3–131, pp. 48–54, 2024, https://doi.org/10.15587/1729-4061.2024.313934.

- G. Alotaibi, M. Awawdeh, F. F. Farook, M. Aljohani, R. M. Aldhafiri, and M. Aldhoayan, “Artificial intelligence (AI) diagnostic tools: utilizing a convolutional neural network (CNN) to assess periodontal bone level radiographically—a retrospective study,” BMC Oral Health, vol. 22, no. 1, 2022, https://doi.org/10.1186/s12903-022-02436-3.

- H. F. Mahdi and B. J. Khadhim, “Enhancing IoT Security: A Deep Learning and Active Learning Approach to Intrusion Detection,” Journal of Robotics and Control (JRC), vol. 5, no. 5, pp. 1525–1535, 2024, https://doi.org/10.18196/jrc.v5i5.22292.

- M. Ehsani, K. V. Singh, H. O. Bansal and R. T. Mehrjardi, "State of the Art and Trends in Electric and Hybrid Electric Vehicles," in Proceedings of the IEEE, vol. 109, no. 6, pp. 967-984, 2021, https://doi.org/10.1109/JPROC.2021.3072788.

- D. Kim, H. Jung, and I.-H. Lee, “Deep Learning-Based Power Control Scheme With Partial Channel Information in Overlay Device-to-Device Communication Systems,” IEEE Access, vol. 9, pp. 122125–122137, 2021, https://doi.org/10.1109/ACCESS.2021.3109948.

- S. Zheng, S. Chen, and X. Yang, “DeepReceiver: A Deep Learning-Based Intelligent Receiver for Wireless Communications in the Physical Layer,” IEEE Trans Cogn Commun Netw, vol. 7, no. 1, pp. 5–20, 2021, https://doi.org/10.1109/TCCN.2020.3018736.

- Ho Le, T. Quyen Ngo, N. Duc Toan, C. Cuong Nguyen, and B. Hong Phong, “Integration of Modbus-Ethernet Communication for Monitoring Electrical Power Consumption, Temperature, and Humidity,” Journal of Robotics and Control (JRC), vol. 5, no. 6, p. 2024, https://doi.org/10.18196/jrc.v5i6.22456.

- Y. Pamungkas, M. R. N. Ramadani, and E. N. Njoto, “Effectiveness of CNN Architectures and SMOTE to Overcome Imbalanced X-Ray Data in Childhood Pneumonia Detection,” Journal of Robotics and Control (JRC), vol. 5, no. 3, pp. 775–785, 2024, https://doi.org/10.18196/jrc.v5i3.21494.

- S. S. Sadiq, “Improving CBIR Techniques with Deep Learning Approach: An Ensemble Method Using NASNetMobile, DenseNet121, and VGG12,” Journal of Robotics and Control (JRC), vol. 5, no. 3, pp. 863–874, 2024, https://doi.org/10.18196/jrc.v5i3.21805.

- G. N. P. Pratama, I. Hidayatulloh, H. D. Surjono, and T. Sukardiyono, “Enhance Deep Reinforcement Learning with Denoising Autoencoder for Self-Driving Mobile Robot,” Journal of Robotics and Control (JRC), vol. 5, no. 3, pp. 667–676, 2024, https://doi.org/10.18196/jrc.v5i3.21713.

- S. Elahian, M. A. A. Atashgah, and I. Suwarno, “Enhancing Autonomous Navigation in GNSS-Denied Environment: Obstacle Avoidance Observability-Based Path Planning for ASLAM,” Journal of Robotics and Control (JRC), vol. 5, no. 6, pp. 2048–2059, 2024, https://doi.org/10.18196/jrc.v5i6.23519.

- S. Hofny, N. M. Ghazaly, A. N. Shmroukh, and M. Abouelsoud, “Predicting SI Engine Performance Using Deep Learning with CNNs on Time-Series Data,” Journal of Robotics and Control (JRC), vol. 5, no. 5, pp. 1457–1469, 2024, https://doi.org/10.18196/jrc.v5i5.22558.

- D. Krivoguz et al., “Enhancing Long-Term Air Temperature Forecasting with Deep Learning Architectures,” Journal of Robotics and Control (JRC), vol. 5, no. 3, pp. 706–716, 2024, https://doi.org/10.18196/jrc.v5i3.21716.

- P. Miao, W. Yin, H. Peng, and Y. Yao, “Study of the performance of deep learning-based channel equalization for indoor visible light communication systems,” Photonics, vol. 8, no. 10, 2021, https://doi.org/10.3390/photonics8100453.

- R. Nambiar and R. Nanjundegowda, “A Comprehensive Review of AI and Deep Learning Applications in Dentistry: From Image Segmentation to Treatment Planning,” Journal of Robotics and Control (JRC), vol. 5, no. 6, pp. 1744-1752, 2024, https://doi.org/10.18196/jrc.v5i6.23056.

- J. A. Alkhafaji, M. M. Kassir, and A. Lakizadeh, “A Review on Deep Learning-based Methods for Template Matching : Advancing with QATM-Kalman-CNN Integration,” Machine Learning, vol. 12, no. 1, 2025, https://doi.org/10.36227/techrxiv.175099756.61334996/v1.

- A. L. Mohammed Jasim, A. Alkhafaji, and Mohamad Mahdi Kassir, “A Review on Deep Learning-based Methods for Template Matching,” Journal of Electrical Systems, vol. Vol. 20, pp. 8850–8863, 2024, https://doi.org/10.52783/jes.8282.

- Mohammed Jasim, A. Alkhafaji, Mohamad Mahdi Kassir, and Amir Lakizadeh, “Qatm-Kcnn: Improving Template Matching Performance Based On Integration Of Convolutional Neural Networks And Kalman Filtering,” Tianjin Daxue Xuebao (Ziran Kexue yu Gongcheng Jishu Ban)/ Journal of Tianjin University Science and Technology, vol. 57, no. 12, pp. 114–127, 2024, https://doi.org/10.5281/zenodo.14325009.

- A. Najm Abed, A. Abdul Razzaq Altahir, A. O. Hanfesh, and A. Abdulhadi Ahmed, “Performance Evaluation of PMSM and BLDC Motors in Different Operating Scenarios Based Slide Mode Control,” in 2024 4th International Conference on Electrical Machines and Drives, ICEMD 2024, 2024, https://doi.org/10.1109/ICEMD64575.2024.10963593.

- Demiroglu and K. Kayisli, "Energy Management Strategies for Hybrid Electric Vehicles," 2024 13th International Conference on Renewable Energy Research and Applications (ICRERA), Nagasaki, Japan, 2024, pp. 1175-1185, 2024, https://doi.org/10.1109/ICRERA62673.2024.10815513.

- M. A. N. Abed, D. S. Shanan, and Z. H. H. Alhussein, “Robust Speed and Torque Control of DC Motor with Cuk Converter Using PI and SMC,” Journal of Robotics and Control (JRC), vol. 6, no. 3, pp. 1216–1226, 2025, https://doi.org/10.18196/jrc.v6i3.25756.

- Mohammed Jasim A. Alkhafaji, Mohamad Mahdi Kassir, and Amir Lakizadeh, “Advances And Applications Of Template Matching In Image Analysis,” Tianjin Daxue Xuebao (Ziran Kexue yu Gongcheng Jishu Ban)/ Journal of Tianjin University Science and Technology, vol. 57, no. 12, 2024, https://doi.org/10.5281/zenodo.14324240.

- M. J. Abed Alkhafaji et al., “The development of a method for increasing the reliability of the assessment of the state of the object,” Eastern-European Journal of Enterprise Technologies, vol. 5, no. 3 (131), pp. 48–54, 2024, https://doi.org/10.15587/1729-4061.2024.313934.

Mohammed Jasim A. Alkhafaji (Lightweight 1D CNN for Unified Real-Time Communication Signal Classification and Denoising in Low-SNR Edge Environments)