Buletin Ilmiah Sarjana Teknik Elektro ISSN: 2685-9572

Maintaining Empathy and Relational Integrity in Digitally Mediated Social Work: Practitioner Strategies for Artificial Intelligence Integration

Yih-Chang Chen 1, Chia-Ching Lin 2

1 Department of Information Management, Chang Jung Christian University, Tainan 711, Taiwan

2 Department of Finance, Chang Jung Christian University, Tainan 711, Taiwan

ARTICLE INFORMATION |

| ABSTRACT |

Article History: Received 13 March 2025 Revised 27 April 2025 Accepted 30 April 2025 |

|

This study addresses the critical challenge of preserving relational integrity in social work practice within artificial intelligence (AI)-enhanced environments. While AI technologies promise operational efficiency, their impact on empathy and human connection in social work is not fully understood. This research aims to explore how social workers maintain relational integrity when interacting with clients through AI tools, providing practical strategies and theoretical insights. The research contributes to the field by proposing a relational framework for AI integration in social work practice, emphasizing human-centered principles. The study utilizes a qualitative phenomenological approach, drawing on 24 licensed social workers from diverse sectors (e.g., child welfare, elder care, and mental health) in three urban areas known for AI adoption. Data collection involved semi-structured interviews and artifact analysis, including AI interface screenshots and decision-making protocols, to capture practitioner experiences. Findings reveal three key themes: reframing empathy in digital interactions, AI as a dual partner and adversary, and ethical tensions. Results indicate that video calls and visual aids are crucial for preserving empathy, while social workers employ proactive strategies to manage AI’s limitations. The study highlights the need for clear guidelines, interdisciplinary collaboration, and training to ensure AI supports relational practices rather than replacing them. These findings have significant policy and practice implications, offering a foundation for future research and AI tool development in social services. |

Keywords: Digitally Mediated Social Work; Empathy Preservation; Artificial Intelligence Integration; Relational Integrity; AI-Enhanced Social Services |

Corresponding Author: Yih-Chang Chen, Department of Information Management, Chang Jung Christian University, Tainan 711, Taiwan. Email: cheny@mail.cjcu.edu.tw |

This work is licensed under a Creative Commons Attribution-Share Alike 4.0

|

Document Citation: Y.-C. Chen and C.-C. Lin, “Maintaining Empathy and Relational Integrity in Digitally Mediated Social Work: Practitioner Strategies for Artificial Intelligence Integration,” Buletin Ilmiah Sarjana Teknik Elektro, vol. 7, no. 2, pp. 111-121, 2025, DOI: 10.12928/biste.v7i2.13008. |

- INTRODUCTION

Social work has long been defined by its relational essence, where empathy, human connection, and interpersonal understanding are central to effective practice [1]-[8]. With the rapid advancement of Artificial Intelligence (AI) technologies — such as predictive analytics, automated case assessments, chatbots, and virtual assistants — the traditional landscape of social work is undergoing a significant transformation [9]-[19]. These innovations promise increased efficiency, improved decision-making, and optimized resource allocation. However, the introduction of AI tools also presents critical challenges to maintaining the relational aspects that are essential to social work practice, particularly empathy, trust-building, and meaningful human engagement.

The growing reliance on digital platforms, which has been dramatically accelerated by the COVID-19 pandemic, amplifies these challenges. Practitioners now depend heavily on technology to bridge physical distances, but there remains substantial uncertainty regarding the impact of digital mediation on relational quality and client outcomes. While AI-driven tools have been shown to enhance operational tasks, they also raise concerns about impersonal interactions, the erosion of client autonomy, and the potential for algorithmic bias [20]-[32]. Despite these issues being widely acknowledged in the literature, there is still a limited exploration of how frontline social workers can preserve relational integrity and empathy in their practice, especially in digitally mediated environments.

This study addresses this significant research gap by examining the strategies social workers use to maintain relational integrity in AI-enhanced practice settings. Specifically, the research aims to:

- Identify the AI tools commonly used in social work practice.

- Investigate the strategies that practitioners employ to preserve empathy and relational integrity when interacting with clients through AI-driven platforms.

- Analyze the organizational influences that affect the dynamics between social workers and AI technologies.

The research contributes to both theory and practice by providing a nuanced understanding of how AI can be integrated into social work without undermining its relational core. By developing and proposing a relational framework for AI integration, this study aims to guide policymakers, social work practitioners, and AI developers in fostering ethical, human-centered innovations in social services. This framework outlines the key strategies for balancing technological efficiency with empathetic engagement, ensuring that AI tools enhance, rather than replace, the relational dimensions of social work.

Ultimately, the contribution of this research is twofold: it offers a critical theoretical perspective on the intersection of AI and relational practice, and it provides practical recommendations for maintaining the human element in AI-driven social work environments. By examining both the opportunities and challenges presented by AI, this study aims to foster a more balanced, ethical, and relationally grounded approach to the future integration of technology in social work practice.

- METHODS

- Research Design

This study utilized a qualitative phenomenological approach informed by Heideggerian phenomenology [33]-[40], specifically chosen to deeply explore and articulate the lived experiences and subjective interpretations of social work practitioners interacting with AI-enhanced environments. Phenomenology, particularly Heideggerian tradition, emphasizes individuals’ existential contexts and subjective experiences, making it uniquely suited for investigating how social workers interpret and assign meaning to their interactions within technologically mediated settings [41]-[49]. Although phenomenology was the sole methodological approach, limiting generalizability, it was intentionally selected to capture the complex, nuanced experiences critical to the study’s objectives.

Participants were selected through a purposive sampling strategy from social service agencies across three major urban areas known for their pioneering adoption of AI technologies. These urban centers were specifically chosen due to their advanced integration and frequent utilization of AI in social work, providing ideal contexts for capturing diverse and rich experiences related to human-AI interactions. A total of 24 licensed social workers from various sectors — child welfare, elder care, and mental health services — were recruited, ensuring comprehensive coverage of experiences and perspectives regarding AI’s role in social work. The rationale for choosing 24 participants was based on principles of data saturation, achieved through iterative analysis until additional interviews yielded no new substantive themes or insights. Despite potential selection bias inherent in focusing on technologically advanced areas, the study prioritized depth and richness of qualitative data over broader representational claims.

- Participants and Sampling

Participants in this study comprised 24 licensed social workers purposefully selected to represent diverse professional backgrounds, ensuring comprehensive insights into the integration and use of AI technologies within social work. These individuals were recruited from three distinct service domains: child welfare, elder care, and mental health services. The choice of these sectors ensured a wide-ranging perspective on AI’s integration across various critical areas of social work practice.

The purposive sampling strategy, a non-probabilistic technique suitable for qualitative research, was employed explicitly to identify participants capable of providing in-depth, contextually rich information aligned with the research objectives [50]-[58]. Inclusion criteria encompassed professional licensure, active employment within AI-integrated social service environments, and a minimum of two years of experience using digital mediation tools. These criteria ensured that participants could offer authentic, experience-based insights critical to addressing the research questions.

The decision to include 24 participants was guided by achieving data saturation, verified through iterative analysis until additional interviews ceased to yield new substantive themes or insights. Additionally, the three metropolitan regions from which participants were selected were specifically chosen due to their advanced adoption and sophisticated integration of AI technologies in social work. This focus enabled the study to deeply explore contexts at the forefront of technological innovation, acknowledging, however, that it introduces potential selection bias regarding broader generalizability.

Ethical standards were rigorously maintained throughout participant recruitment, ensuring transparency about participant rights, voluntary involvement, confidentiality, and the right to withdraw at any stage without repercussions. This rigorous ethical approach safeguarded participants’ autonomy and enhanced the validity and integrity of the collected qualitative data.

- Data Collection

Data collection involved comprehensive semi-structured interviews specifically designed to elicit rich, detailed narratives about participants’ experiences, perceptions, strategies, and challenges in maintaining relational practices within digitally mediated environments [59]-[63]. Interviews were conducted via Zoom and lasted between 60 and 90 minutes, allowing sufficient time for participants to articulate nuanced insights into their professional interactions. Explicit participant consent was obtained prior to audio-recording each session, and all interviews were subsequently transcribed verbatim, ensuring accurate data representation and reliability in subsequent analyses.

Artifact analysis supplemented interview data, providing additional contextual understanding and concrete evidence of AI interactions within social work practice. Artifacts included screenshots of AI interfaces, workflow diagrams, AI-driven decision-making documentation, and specific examples of communications facilitated by AI tools. These artifacts were systematically integrated and coded alongside interview data to enhance the triangulation process, thereby increasing the robustness, credibility, and validity of the study’s findings.

Throughout data collection, strict adherence to ethical protocols emphasized informed consent, participant confidentiality, data security, and anonymity. Data was securely stored with access restricted solely to authorized research team members, further safeguarding the integrity and confidentiality of the information collected.

- Proposed Analytical Framework

The analytical framework employed Braun and Clarke’s six-phase thematic analysis method to systematically identify, code, and interpret emerging themes [64][65]. These phases included: (1) data familiarization through multiple readings and preliminary notes; (2) initial code generation through systematic coding of interview transcripts and artifacts; (3) theme identification by clustering related codes into potential themes; (4) reviewing themes iteratively to assess coherence and consistency; (5) clearly defining and naming each theme based on explicit and distinct criteria; and (6) producing a detailed analytical narrative.

NVivo qualitative software facilitated this rigorous analytical process, enhancing depth and transparency by enabling detailed documentation, systematic coding comparisons, and cross-validation across multiple data sources. The framework specifically accounted for interactional complexities of AI-mediated social work practices, including initial engagement, AI-based assessments, practitioner-client digital interactions, and human-driven adjustments or overrides. Inter-coder reliability was maintained through regular research team meetings, independent coding verifications, and collaborative consensus-building, ensuring robust and consistent analytical outcomes.

- System Flow Diagram

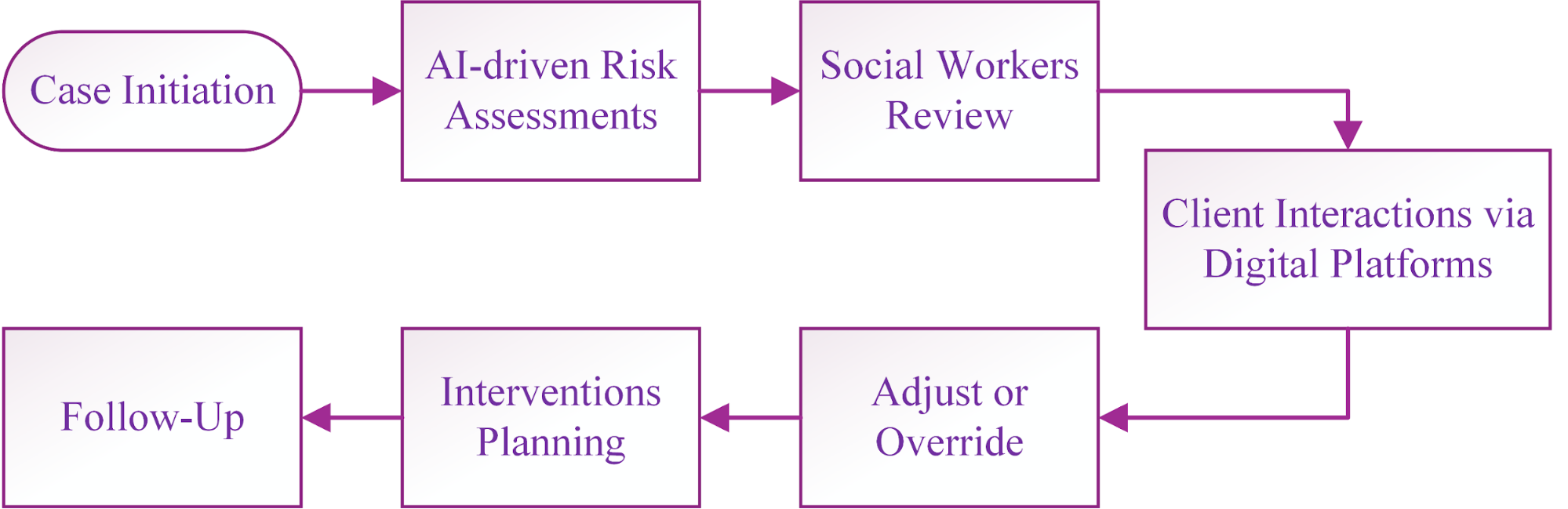

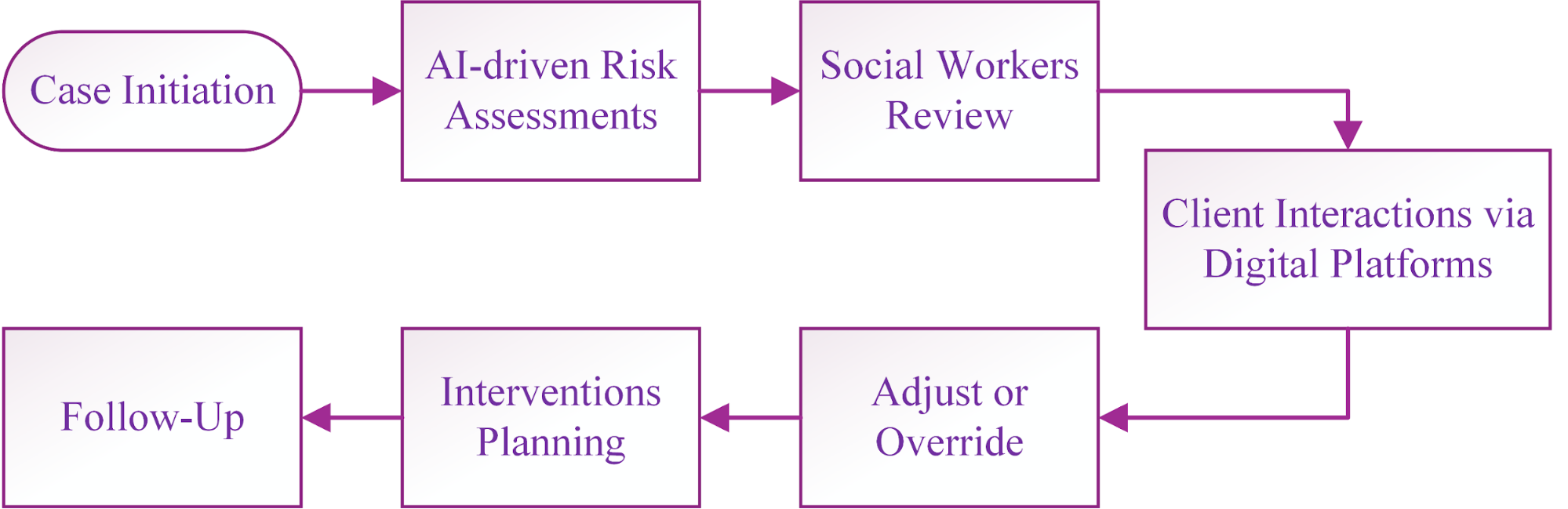

The system flow diagram (Figure 1) visually represents the iterative and dynamic nature of AI-mediated social work practice, emphasizing human oversight and judgment throughout the process. It begins with case initiation, where social workers collect initial client information and concerns. AI-driven assessments and preliminary analyses subsequently inform practitioners about potential client needs and intervention priorities. Critically, this automated analysis is reviewed by social workers through direct, empathy-driven interactions with clients via digital platforms. Practitioners retain ultimate decision-making authority, actively adjusting or overriding AI recommendations as necessary. Finally, the process involves planning and executing tailored interventions, followed by continuous follow-up and reassessment activities. This iterative depiction accurately captures the complexities and nuances inherent in real-world social work decision-making processes, highlighting the indispensable role of human relational competencies alongside technological tools.

Figure 1. System Flow Diagram

- Tool Utilization

NVivo qualitative analysis software was employed comprehensively throughout this study to manage, code, and systematically analyze the extensive qualitative data derived from both interviews and artifact reviews. The software provided significant analytical advantages beyond mere organizational functions, facilitating deeper interpretative insights and enhancing methodological rigor [66]-[71]. Specifically, NVivo enabled sophisticated cross-referencing of data sources, pattern recognition, thematic consistency checks, and rigorous tracking of analytical decisions. These capabilities ensured accuracy, consistency, and transparency in thematic identification and facilitated inter-coder reliability through clearly documented analytical pathways.

Zoom video conferencing served as the primary platform for conducting and recording interviews. This choice allowed for secure, high-quality, real-time communication with participants located across different geographical regions. Interviews were digitally recorded via Zoom’s built-in recording function, producing reliable audio quality suitable for accurate transcription. Transcription software complemented Zoom recordings, efficiently converting spoken words into verbatim text, thereby increasing the precision of subsequent analyses.

Ethical considerations related to data privacy, confidentiality, and security were paramount throughout the study. All digital tools, including NVivo and Zoom, were utilized in strict compliance with institutional ethical guidelines, and data were securely stored with restricted access to authorized personnel only. These rigorous practices ensured robust, ethically sound, and reliable research outcomes.

- RESULT AND DISCUSSION

This study identified three core themes integral to understanding relational practices within digitally mediated social work environments: (1) reframing empathy in digital interactions, (2) AI as both a supportive partner and a challenging adversary, and (3) ethical tensions and role strain. These themes illuminate critical dimensions of social workers' lived experiences with AI technologies.

- Key Findings

- Theme 1: Reframing Empathy in Digital Interfaces

Participants consistently emphasized the importance of reframing empathy in digitally mediated interactions. Social workers described several innovative digital strategies designed to enhance emotional connectivity, including structured video conferencing, tailored AI-scripted communication, and visual aids that foster emotional understanding and trust [72]-[74].

Table 1 presents participant evaluations of various digital empathy strategies. Scheduled video calls achieved the highest effectiveness (mean score = 4.5, SD = 0.5, n = 24), suggesting that face-to-face visual interaction significantly enhances emotional understanding and builds stronger practitioner-client relationships compared to text-based methods. Personalized AI scripts received lower ratings (mean = 3.7, SD = 0.8), highlighting participants’ concern about the lack of nuanced emotional responsiveness without human oversight. Visual aids, rated 3.9 (SD = 0.6), effectively improved client engagement through clarity and transparency. However, some practitioners expressed caution, emphasizing the need for professional interpretation to prevent oversimplification of complex client issues.

Tabel 1. Participant Ratings of Digital Empathy Strategies

Digital Empathy Strategy | Reported Effectiveness (Mean Score, 1–5) | Key Benefits and Limitations |

Scheduled video calls | 4.5 | Enhanced relational depth; supports nuanced emotional interaction. Requires stable technology infrastructure. |

Personalized AI scripts | 3.7 | Efficient for routine communication; risks losing emotional nuance without human oversight. |

Visual aids (client charts) | 3.9 | Clarifies complex information; fosters transparency and client trust. |

- Theme 2: AI as Partner and Adversary

Participants perceived AI as both beneficial and problematic. While acknowledging substantial advantages in operational efficiency, accuracy in initial case assessments, and improved resource management, practitioners expressed significant concerns about AI potentially undermining professional judgment and autonomy.

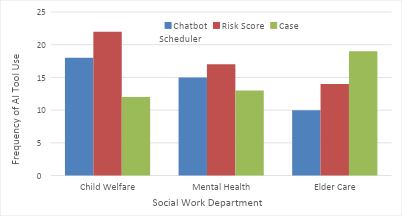

Figure 2 illustrates the frequency of AI tool usage across three social work departments: Child Welfare, Mental Health, and Elder Care. In Child Welfare, the AI-driven Risk Score tool was most frequently employed (n = 22), reflecting a greater reliance on predictive analytics to rapidly assess risks and prioritize interventions. Mental Health practitioners frequently utilized Chatbots (n = 18), supporting ongoing client interaction and immediate engagement. In Elder Care, Case Schedulers were the most frequently adopted tool (n = 20), emphasizing the need for organized, automated management of routine tasks and appointment scheduling. These departmental differences underline distinct practical priorities and demonstrate the varied perceptions and reliance on AI tools across social work practice domains.

Figure 2. Frequency of AI Tool Use by Department

- Theme 3: Ethical Tensions and Role Strain

Ethical considerations featured prominently among participant concerns. Practitioners reported significant anxieties related to depersonalization, transparency in AI decision-making, and potential breaches of client confidentiality. While prior literature has identified similar ethical risks [20]-[22], participants in this study uniquely described specific adaptive strategies to mitigate these issues, such as implementing double validation procedures (i.e., seeking colleague consultation on AI recommendations) and regular client check-ins. Social workers highlighted that while AI tools can increase efficiency, careful management is required to preserve the integrity of relational practices. For instance, one participant noted:

“When AI systems suggest an intervention, I always cross-verify with a colleague before finalizing decisions. This double-validation ensures that we’re making ethically sound and empathetically grounded choices” |

- Comparative Insights

This study provides a more nuanced understanding of AI’s role in social work, specifically regarding the human-AI relationship. Comparisons with earlier research [20]-[22] show that while previous studies have largely focused on the ethical risks and operational limitations of AI, this study highlights adaptive, human-centered strategies employed by social workers. These strategies — such as integrating AI tools without sacrificing empathy — present a dynamic model of how AI can function as both a partner and a tool for professional enhancement.

Unlike studies that express skepticism towards AI (e.g., Afrouz & Lucas [2]), participants in this study demonstrated a proactive approach, engaging actively with AI tools while still maintaining critical oversight (as shown in Figure 2). They emphasized human judgment in interpreting AI recommendations and handling algorithmic limitations, a more balanced view compared to the more cautionary tones in prior research.

Additionally, this research extends discussions on algorithmic transparency by showing how social workers leverage visual aids and client-centered communication to ensure AI decisions are understandable and actionable. By providing context and clarity, social workers bridge the gap between AI-mediated recommendations and the relational aspects of practice, enhancing trust and transparency.

Furthermore, the findings align with and extend Steyvers and Kumar’s work [11], which highlighted the importance of AI transparency. Our study adds depth by showing operational strategies used by social workers to incorporate client feedback and collaborative decision-making, thereby enhancing the ecological validity of AI applications.

Overall, this study illustrates how social workers creatively navigate AI integration, maintaining a deliberate balance between technological efficiency and relational depth. These findings contribute to an evolving discourse on the role of AI in relationally-driven professions, distinguishing current practices from the more cautious or critical stances taken in earlier studies.

- Strengths and Limitations

The methodological strengths of this study include its phenomenological approach, capturing detailed lived experiences from practitioners. The triangulation of interview data and artifact analysis — examining AI interface screenshots, workflow documentation, and AI decision-making protocols — provided additional context and credibility. However, limitations include potential biases stemming from self-reported effectiveness measures and purposive sampling from technologically advanced urban regions, which may limit generalizability to less-equipped settings.

Additionally, while thematic saturation was ensured through iterative analysis and cross-checking among researchers, the qualitative nature of the study inherently poses risks of interpretative researcher bias. Future studies should incorporate quantitative validation to complement qualitative insights and broaden sampling across diverse geographic and socioeconomic contexts to enhance representativeness and applicability.

- Conclusion and Transition

In conclusion, the findings underscore the complexity of integrating AI into relationally driven professional contexts and illustrate practical approaches social workers utilize to maintain empathy and ethical standards amidst technological mediation. Future policy development and research should prioritize clear guidelines for ethical AI use, interdisciplinary collaboration, and comprehensive training programs that equip social workers with essential skills for navigating human-AI interactions. By emphasizing the preservation of relational integrity in the era of digital transformation, this study offers a valuable foundation for the advancement of AI-enhanced practices in social work.

- CONCLUSIONS

- Summary of Contributions

This research makes significant contributions to the understanding of the intersection between relational practices and the integration of artificial intelligence (AI) in social work. It adds to the theoretical and practical knowledge in the field by addressing the complexities of maintaining relational integrity and empathy in AI-enhanced social work environments.

First, the study identifies practitioner-driven strategies to preserve empathy and relational integrity within AI-mediated settings. These practical strategies, which include tailored AI scripts, structured video calls, and the use of visual aids, fill a critical gap in existing literature, which predominantly focuses on technological capabilities and ethical concerns, but rarely provides insights into day-to-day relational adaptations.

Second, the research introduces a unique relational framework for integrating AI technologies into social work practice. This framework emphasizes the need to balance technological efficiency with the core relational aspects of social work. It ensures that AI tools enhance, rather than detract from, empathetic and client-centered practices by highlighting the importance of human oversight and professional judgment.

Third, the study offers valuable perspectives from practitioners and their adaptive strategies. These findings provide actionable insights for policymakers, technology developers, and educators within the social work field. The recommendations from this research underline the importance of maintaining relational ethics, human judgment, and professional oversight when deploying AI systems.

Collectively, these contributions offer a deeper understanding of the dynamic relationship between human practitioners and AI, contributing to more informed, ethically grounded, and relationally enriched approaches to AI integration in social services.

- Policy and Practice Implications

The findings of this research hold significant implications for both policy and practice in social work:

- Establish Clear Guidelines and Standards: Policymakers should develop clear and detailed guidelines for the ethical use of AI in social work. These guidelines must address the potential for algorithmic bias, depersonalization, and privacy issues, ensuring transparency, accountability, and respect for client autonomy in AI-mediated decision-making. Given the variations in legal, cultural, and institutional frameworks across different regions, regulatory approaches should be flexible and adaptable to local contexts.

- Enhance Training and Professional Development: Social work organizations must prioritize continuous training and professional development programs that focus on digital empathy, relational strategies, and ethical oversight. These programs will empower social workers to integrate technology without compromising the core relational values that are essential to the profession.

- Promote Collaboration Between AI Developers and Social Work Agencies: Collaboration between social work agencies and AI developers should be encouraged to design human-centered AI technologies. Co-design processes that actively involve end-users—social workers—can help ensure that AI tools complement practitioners’ judgment, support their relational capabilities, and improve the quality of service delivery.

- Integrate Technological Literacy into Social Work Education: Social work education programs should incorporate comprehensive modules on technological literacy, focusing on the ethical, relational, and empathetic use of AI tools. Educators must ensure that future social workers are not only equipped with the necessary technical skills but also understand the ethical implications of technology in practice.

These implications will foster ethical, human-centered AI integration and help social workers navigate the complexities of technology in their practice while maintaining professional and relational standards.

- Future Research Directions

Future research should focus on several key areas to build upon the findings of this study and further enhance the integration of AI in social work practice:

- Client Perspectives and Outcomes: Quantitative studies examining client perspectives on AI-mediated interventions are essential. Such research would provide empirical evidence regarding the effectiveness and acceptance of AI tools from the client’s point of view, thus complementing the insights provided by practitioners in this study. Research questions could include: How do clients perceive AI’s role in their social work interactions?

- Cross-Cultural Comparative Research: Future studies should investigate how different cultural contexts affect the adaptation and acceptance of AI in social services. For example, in societies where family-centered values dominate, the relational dynamics of AI-driven decision-making may differ from those in individualistic cultures. This research could help design culturally sensitive AI systems. What cultural factors influence AI acceptance in social work, and how can AI tools be adapted to reflect cultural nuances?

- Longitudinal Studies on Social Worker Well-Being: Long-term studies should examine the impact of sustained AI integration on social worker resilience, professional identity, and burnout. Research questions could include: How does prolonged use of AI tools impact social workers’ professional satisfaction and mental health?

- Collaborative Interdisciplinary Research: Interdisciplinary collaborations between social work practitioners, AI developers, ethicists, and policymakers will be critical for advancing the development of human-centered AI applications. Joint efforts can drive the creation of AI systems that improve relational practices and maintain ethical standards in social work.

These research directions aim to address current knowledge gaps and provide actionable insights into the long-term impact of AI on social work practice.

- Final Synthesizing Statement

In conclusion, this study emphasizes the critical role of maintaining human empathy in the digital era of social work. While AI technologies can significantly enhance operational efficiency and decision-making, they should never replace the human relational core of social work. By identifying practical strategies for maintaining empathy, promoting human-AI collaboration, and addressing ethical tensions, this research offers a comprehensive framework for integrating AI into social work practice. The study’s findings contribute to the ongoing discourse on AI in human-centered professions and provide valuable insights for the future development of AI tools in social services.

REFERENCES

- J. van Dijke, I. van Nistelrooij, P. Bos, and J. Duyndam, “Towards a relational conceptualization of empathy,” Nursing Philosophy, vol. 21, no. 3, p. e12297, 2020, https://doi.org/10.1111/nup.12297.

- R. Afrouz and J. Lucas, “A systematic review of technology-mediated social work practice: Benefits, uncertainties, and future directions,” Journal of Social Work, vol. 23, no. 5, pp. 953–974, 2023, https://doi.org/10.1177/14680173231165926.

- G. Pei, H. Li, Y. Lu, Y. Wang, S. Hua, and T. Li, “Affective Computing: Recent Advances, Challenges, and Future Trends,” Intelligent Computing, vol. 3, Art. no. 0076, 2024, https://doi.org/10.34133/icomputing.0076.

- B. Van Rhyn, A. Barwick, and M. Donelly, “Embodiment as an instrument for empathy in social work,” Australian Social Work, vol. 74, no. 2, pp. 146-158, 2021, https://doi.org/10.1080/0312407X.2020.1839112.

- L. Mitchinson, A. Dowrick, C. Buck, K. Hoernke, S. Martin, S. Vanderslott, H. Robinson, F. Rankl, L. Manby, S. Lewis-Jackson, and C. Vindrola-Padros, “Missing the human connection: A rapid appraisal of healthcare workers’ perceptions and experiences of providing palliative care during the COVID-19 pandemic,” Palliative Medicine, vol. 35, no. 5, pp. 852-861, 2021, https://doi.org/10.1177/02692163211004228.

- K. Nordesjö, G. Scaramuzzino, and R. Ulmestig, “The social worker-client relationship in the digital era: a configurative literature review,” European Journal of Social Work, vol. 25, no. 2, pp. 303–315, 2021, https://doi.org/10.1080/13691457.2021.1964445.

- N. Ondrejková and J. Halamová, “Prevalence of compassion fatigue among helping professions and relationship to compassion for others, self‐compassion and self‐criticism,” Health and Social Care in the Community, vol. 30, no. 5, pp. 1680–1694, 2022, https://doi.org/10.1111/hsc.13741.

- C. Guidi and C. Traversa, “Empathy in patient care: from ‘Clinical Empathy’ to ‘Empathic Concern’,” Med. Health Care and Philos., vol. 24, pp. 573–585, 2021, https://doi.org/10.1007/s11019-021-10033-4.

- O. Keyes and J. Austin, “Feeling fixes: Mess and emotion in algorithmic audits,” Big Data & Society, vol. 9, no. 2, 2022, https://doi.org/10.1177/20539517221113772.m

- F. Reamer, “Artificial intelligence in social work: Emerging ethical issues,” Int. J. Social Work Values Ethics, vol. 20, no. 2, pp. 52–71, 2023, https://doi.org/10.55521/10-020-205.

- M. Steyvers and A. Kumar, “Three challenges for AI-assisted decision-making,” Perspectives on Psychological Science, vol. 19, no. 5, pp. 722–734, 2023, https://doi.org/10.1177/17456916231181102.

- M. Nuwasiima, M. P. Ahonon, and C. Kadiri, “The role of artificial intelligence (AI) and machine learning in social work practice,” World Journal of Advanced Research and Reviews, vol. 24, no. 1, pp. 80–97, 2024, https://doi.org/10.30574/wjarr.2024.24.1.2998.

- S. Pink, H. Ferguson, and L. Kelly, “Digital social work: Conceptualising a hybrid anticipatory practice,” Qualitative Social Work, vol. 21, Issue 2, pp. 413–430, 2021, https://doi.org/10.1177/14733250211003647.

- E. Weber-Guskar, “How to feel about emotionalized artificial intelligence? When robot pets, holograms, and chatbots become affective partners,” Ethics and Information Technology, vol. 23, pp. 601–610, 2021, https://doi.org/10.1007/s10676-021-09598-8.

- M. Seniutis, V. Gruzauskas, A. Lileikiene, and V. Navickas, “Conceptual framework for ethical artificial intelligence development in social services sector,” Human Technology, vol. 20, pp. 6–24, 2024, https://doi.org/10.14254/1795-6889.2024.20-1.1.

- A. Noonia, R. Beg, A. Patidar, B. Bawaskar, S. Sharma, and H. Rawat, “Chatbot vs Intelligent Virtual Assistance (IVA),” in Conversational Artificial Intelligence, pp. 655–673, 2024, https://doi.org/10.1002/9781394200801.ch36.

- M. Mekni, “An Artificial Intelligence Based Virtual Assistant Using Conversational Agents,” Journal of Software Engineering and Applications, vol. 14, pp. 455-473, 2021, https://doi.org/10.4236/jsea.2021.149027.

- A. Aggarwal, C. C. Tam, D. Wu, X. Li, and S. Qiao, “Artificial intelligence–based chatbots for promoting health behavioral changes: systematic review,” Journal of Medical Internet Research (JMIR), vol. 25, p. e40789, 2023, https://doi.org/10.2196/40789.

- A. Casheekar, A. Lahiri, K. Rath, K. S. Prabhakar, and K. Srinivasan, “A contemporary review on chatbots, AI-powered virtual conversational agents, ChatGPT: Applications, open challenges and future research directions,” Computer Science Review, vol. 52, p. 100632, 2024, https://doi.org/10.1016/j.cosrev.2024.100632.

- F. Osasona, O. Amoo, A. Atadoga, T. Abrahams, O. Farayola, and B. Ayinla, “Reviewing the ethical implications of AI in decision making processes,” International Journal of Management & Entrepreneurship Research, vol. 6, no. 2, pp. 322–335, 2024, https://doi.org/10.51594/ijmer.v6i2.773.

- H. Hah and D. Goldin, “How clinicians perceive artificial intelligence–assisted technologies in diagnostic decision making: Mixed methods approach,” Journal of Medical Internet Research (JMIR), vol. 23, no. 12, p. e33540, 2021, https://doi.org/10.2196/33540.

- I. D. Raji, A. Smart, R. N. White, M. Mitchell, T. Gebru, B. Hutchinson, J. Smith-Loud, D. Theron, and P. Barnes, “Closing the AI accountability gap: Defining an end-to-end framework for internal algorithmic auditing,” in Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency (FAT ’20), pp. 33–44, 2020, https://doi.org/10.1145/3351095.3372873.

- D. Saxena, C. Repaci, M. D. Sage, and S. Guha, “How to Train a (Bad) Algorithmic Caseworker: A Quantitative Deconstruction of Risk Assessments in Child Welfare,” in Extended Abstracts of the 2022 CHI Conference on Human Factors in Computing Systems (CHI EA’22), pp. 1–7, 2022, https://doi.org/10.1145/3491101.3519771.

- A. Akingbola, O. Adeleke, A. Idris, O. Adewole, and A. Adegbesan, “Artificial Intelligence and the Dehumanization of Patient Care,” Journal of Medicine, Surgery, and Public Health, vol. 3, no. 100138. 2024, https://doi.org/10.1016/j.glmedi.2024.100138.

- A. Sabater, B. López, R. Campdepadrós, and C. Sánchez, “Participatory Action Research for AI in Social Services: An Example of Local Practices from Catalonia,” In Participatory Artificial Intelligence in Public Social Services: From Bias to Fairness in Assessing Beneficiaries, pp. 79-96, 2025, https://doi.org/10.1007/978-3-031-71678-2_4.

- N. Mehrabi, F. Morstatter, N. Saxena, K. Lerman, and A. Galstyan, “A Survey on Bias and Fairness in Machine Learning,” ACM Computing Surveys, vol. 54, no. 6, pp. 1-35, 2022, https://doi.org/10.1145/3457607.

- L. Savolainen and M. Ruckenstein, “Dimensions of autonomy in human–algorithm relations,” New Media & Society, vol. 26, no. 6, pp. 3472–3490, 2024, https://doi.org/10.1177/14614448221100802.

- K. R. McKee, X. Bai, and S. T. Fiske, “Humans perceive warmth and competence in artificial intelligence,” iScience, vol. 26, no. 8, 2023, p. 107256, https://doi.org/10.1016/j.isci.2023.107256.

- D. Grewal, A. Guha, C. B. Satornino, and E. B. Schweiger, “Artificial intelligence: The light and the darkness,” Journal of Business Research, vol. 136, pp. 229-236, 2021, https://doi.org/10.1016/j.jbusres.2021.07.043.

- P. Formosa, W. Rogers, Y. Griep, S. Bankins, and D. Richards, “Medical AI and human dignity: Contrasting perceptions of human and artificially intelligent (AI) decision making in diagnostic and medical resource allocation contexts,” Computers in Human Behavior, vol. 133, p. 107296, 2022, https://doi.org/10.1016/j.chb.2022.107296.

- O. Brown, et al., “Theory-Driven Perspectives on Generative Artificial Intelligence in Business and Management,” British Journal of Management, vol. 35, no. 1, pp. 3-23, 2024, https://doi.org/10.1111/1467-8551.12788.

- M. Dorotic, E. Stagno, and L. Warlop, “AI on the street: Context-dependent responses to artificial intelligence,” Int. J. Res. Mark., vol. 41, no. 1, pp. 113-137, 2024, https://doi.org/10.1016/j.ijresmar.2023.08.010.

- J. Emiliussen, S. Engelsen, R. Christiansen, and S. H. Klausen, “We are all in it!: Phenomenological Qualitative Research and Embeddedness,” International Journal of Qualitative Methods, vol. 20, 2021, https://doi.org/10.1177/1609406921995304.

- H. Williams, “The Meaning of ‘Phenomenology’: Qualitative and Philosophical Phenomenological Research Methods,” The Qual. Rep., vol. 26, no. 2, pp. 366-385, 2021, https://doi.org/10.46743/2160-3715/2021.4587.

- M. Englander and J. Morley, “Phenomenological psychology and qualitative research,” Phenomenol. Cogn. Sci., vol. 22, pp. 25–53, 2023, https://doi.org/10.1007/s11097-021-09781-8.

- S. Monaro, J. Gullick, and S. West, “Qualitative data analysis for health research: A step-by-step example of phenomenological interpretation,” The Quality Report, vol. 27, no. 4, pp. 1040-1057, 2022, https://doi.org/10.46743/2160-3715/2022.5249.

- L. Jedličková, M. Müller, D. Halová, and T. Cserge, “Combining interpretative phenomenological analysis and existential hermeneutic phenomenology to reveal critical moments of managerial lived experience: a methodological guide,” Qual. Res. Organ. Manag., vol. 17, no. 1, pp. 84-102, 2022, https://doi.org/10.1108/QROM-09-2020-2024.

- I. A. Urcia, “Comparisons of adaptations in grounded theory and phenomenology: Selecting the specific qualitative research methodology,” International Journal of Qualitative Methods, vol. 20, 2021, https://doi.org/10.1177/16094069211045474.

- J. Pilarska, “6 The Constructivist Paradigm and Phenomenological Qualitative Research Design,” in Research Paradigm Considerations for Emerging Scholars, pp. 64-83, 2021, https://doi.org/10.21832/9781845418281-008.

- H. G. Larsen and P. Adu. The theoretical framework in phenomenological research: Development and application. Routledge. 2021. https://doi.org/10.4324/9781003084259.

- T. Watanabe, “How to Develop Phenomenology as Psychology: from Description to Elucidation, Exemplified Based on a Study of Dream Analysis,” Integrative Psychological and Behavioral Science, vol. 56, pp. 964–980, 2022, https://doi.org/10.1007/s12124-020-09581-w.

- E. D. S. Andrade and V. E. Cury, “Investigating the Experience of users in a Coexistence Community Center from the perspective of Humanistic Psychology and Phenomenology”, Phenomenological Studies, vol. 27, no. 1, pp. 25–36, 2021, https://doi.org/10.18065/2021v27n1.3.

- L. Law, “Digital creativity and ecolinguistics: An analysis of Final Fantasy VII Remake Intergrade,” Journal of Language and Pop Culture, vol. 1, no. 1, pp. 73–108, 2025. https://doi.org/10.1075/jlpop.24012.law.

- R. Y. A. Hambali, “Being in the digital world: A Heideggerian perspective,” Jaqfi: Jurnal Aqidah dan Filsafat Islam, vol. 8, no. 2, pp. 274-287, 2023, https://doi.org/10.15575/jaqfi.v8i2.30889.

- R. C. Scharff, “On making phenomenologies of technology more phenomenological,” Philos. Technol., vol. 35, p. 62, 2022, https://doi.org/10.1007/s13347-022-00544-0.

- S. Vidolov, “Uncovering the affective affordances of videoconference technologies,” Inf. Technol. People, vol. 35, no. 6, pp. 1782-1803, 2022, https://doi.org/10.1108/ITP-04-2021-0329.

- A. A. Alhazmi and A. Kaufmann, “Phenomenological qualitative methods applied to the analysis of cross-cultural experience in novel educational social contexts,” Frontiers in Psycholgy, vol. 13, p. 785134, 2022, https://doi.org/10.3389/fpsyg.2022.785134.

- B. Pula, “Does Phenomenology (Still) Matter? Three Phenomenological Traditions and Sociological Theory,” International Journal of Politics, Culture, and Society, vol. 35, pp. 411–431, 2022, https://doi.org/10.1007/s10767-021-09404-9.

- A. Rapp and A. Boldi, “Exploring the lived experience of behavior change technologies: Towards an existential model of behavior change for HCI,” ACM Transactions on Computer-Human Interaction (TOCHI), vol. 30, no. 6, p. 81, pp. 1-50, 2023, https://doi.org/10.1145/3603497.

- M. Memon, T. Ramayah, H. Ting, and J.-H. Cheah, “Purposive Sampling: A Review and Guidelines for Quantitative Research,” Journal of Applied Structural Equation Modeling, vol. 9, pp. 1–23, 2025, https://doi.org/10.47263/JASEM.9(1)01.

- R. S. Robinson, “Purposive Sampling,” In Encyclopedia of quality of life and well-being research, pp. 5645-5647, 2024, https://doi.org/10.1007/978-3-031-17299-1_2337.

- M. Ahmad and S. Wilkins, “Purposive sampling in qualitative research: a framework for the entire journey,” Quality & Quantity, vol. 58, no. 2, 2024, https://doi.org/10.1007/s11135-024-02022-5.

- F. Nyimbili and L. Nyimbili, “Types of purposive sampling techniques with their examples and application in qualitative research studies,” Brit. J. Multidiscip. Adv. Stud., vol. 5, no. 1, pp. 90-99, 2024, https://doi.org/10.37745/bjmas.2022.0419.

- D. Hossan, Z. Dato Mansor, and N. S. Jaharuddin, “Research population and sampling in quantitative study,” Int. J. Bus. Technopreneurship (IJBT), vol. 13, no. 3, pp. 209–222, 2023, https://doi.org/10.58915/ijbt.v13i3.263.

- C. Andrade, “The inconvenient truth about convenience and purposive samples,” Indian Journal of Psychological Medicine, vol. 43, no. 1, pp. 86-88, 2020, https://doi.org/10.1177/0253717620977000.

- K. Leighton, S. Kardong-Edgren, T. Schneidereith, and C. Foisy-Doll, “Using social media and snowball sampling as an alternative recruitment strategy for research,” Clinical Simulation In Nursing, vol. 55, pp. 37-42, 2021, https://doi.org/10.1016/j.ecns.2021.03.006.

- S. K. Ahmed, “How to choose a sampling technique and determine sample size for research: A simplified guide for researchers,” Oral Oncology Reports (OOR), vol. 12, pp. 100662, 2024, https://doi.org/10.1016/j.oor.2024.100662.

- H. Douglas, “Sampling techniques for qualitative research,” in Principles of Social Research Methodology, pp. 367-380, 2022, https://doi.org/10.1007/978-981-19-5441-2_29.

- O. A. Adeoye‐Olatunde and N. L. Olenik, “Research and scholarly methods: Semi‐structured interviews,” Journal of the American College of Clinical Pharmacy, vol. 4, no. 10, pp. 1358–1367, 2021, https://doi.org/10.1002/jac5.1441.

- E. Knott, A. H. Rao, K. Summers, and C. Teeger, “Interviews in the social sciences,” Nature Reviews Methods Primers, vol. 2, no. 73, pp. 1–13, 2022, https://doi.org/10.1038/s43586-022-00150-6.

- M. G. Henriksen, M. Englander, and J. Nordgaard, “Methods of data collection in psychopathology: the role of semi-structured, phenomenological interviews,” Phenomenol. Cogn. Sci., vol. 21, pp. 9–30, 2022, https://doi.org/10.1007/s11097-021-09730-5.

- A. Belina, “Semi-structured interviewing as a tool for understanding informal civil society,” Voluntary Sector Review, vol. 14, no. 2, pp. 331-347, 2023, https://doi.org/10.1332/204080522X16454629995872.

- Y. Voytenko Palgan, O. Mont, and S. Sulkakoski, “Governing the sharing economy: Towards a comprehensive analytical framework of municipal governance,” Cities, vol. 108, Art. 102994, 2021, https://doi.org/10.1016/j.cities.2020.102994.

- V. Braun and V. Clarke, “Thematic analysis,” in Encyclopedia of Quality of Life and Well-Being Research, pp. 7187–7193, 2024, https://doi.org/10.1007/978-3-031-17299-1_3470.

- V. Braun, V. Clarke, and N. Hayfield, “‘A starting point for your journey, not a map’: Nikki Hayfield in conversation with Virginia Braun and Victoria Clarke about thematic analysis,” Qualitative Research in Psychology, vol. 19, no. 2, pp. 424–445, 2022, https://doi.org/10.1080/14780887.2019.1670765.m

- K. Dhakal, “NVivo,” Journal of the Medical Library Association: Journal of the Medical Library Association (JMLA), vol. 110, no. 2, p. 270, 2022, https://doi.org/10.5195/jmla.2022.1271.

- M. K. Alam, “A systematic qualitative case study: questions, data collection, NVivo analysis and saturation,” Qualitative Research in Organizations and Management: An International Journal, vol. 16, no. 1, pp. 1-31, 2021, https://doi.org/10.1108/QROM-09-2019-1825.

- S. Dalkin, N. Forster, P. Hodgson, M. Lhussier, and S. M. Carr, “Using computer assisted qualitative data analysis software (CAQDAS; NVivo) to assist in the complex process of realist theory generation, refinement and testing,” International Journal of Social Research Methodology, vol. 24, no. 1, pp. 123–134, 2020, https://doi.org/10.1080/13645579.2020.1803528.

- M. K. Alam, “A systematic qualitative case study: questions, data collection, NVivo analysis and saturation,” Qualitative Research in Organizations and Management (QROM), vol. 16, no. 1, pp. 1-31, 2021, https://doi.org/10.1108/QROM-09-2019-1825.

- W. M. Lim, “What is qualitative research? An overview and guidelines,” Australasian Marketing Journal, vol. 0, no. 0, 2024, https://doi.org/10.1177/14413582241264619.

- A. L. Nevedal, C. M. Reardon, M. A. Opra Widerquist, et al., “Rapid versus traditional qualitative analysis using the Consolidated Framework for Implementation Research (CFIR),” Implementation Science, vol. 16, no. 67, 2021, https://doi.org/10.1186/s13012-021-01111-5.

- S. Park and M. Whang, “Empathy in Human-Robot Interaction: Designing for Social Robots,” International Journal of Environmental Research and Public Health, vol. 19, no. 3, p. 1889, 2022. https://doi.org/10.3390/ijerph19031889.

- E. Gkintoni, S. P. Vassilopoulos, and G. Nikolaou, “Next-Generation Cognitive-Behavioral Therapy for Depression: Integrating Digital Tools, Teletherapy, and Personalization for Enhanced Mental Health Outcomes,” Medicina, vol. 61, no. 3, p. 431, Mar. 2025, https://doi.org/10.3390/medicina61030431.

- P. Fatouros et al., “Randomized controlled study of a digital data driven intervention for depressive and generalized anxiety symptoms,” npj Digital Medicine, vol. 8, art. no. 113, 2025, https://doi.org/10.1038/s41746-025-01511-7.

AUTHOR BIOGRAPHY

| Yih-Chang Chen Yih-Chang Chen received his Ph.D. in Computer Science from the University of Warwick, United Kingdom. He is currently an Assistant Professor in both the Bachelor Degree Program of Medical Sociology and Health Care and the Department of Information Management at Chang Jung Christian University, Taiwan. His academic expertise spans across multiple interdisciplinary domains, including artificial intelligence (AI), software engineering, machine learning, social media applications, social work management, and long-term care. His research is driven by a commitment to bridging the gap between technology and social application. He actively engages in cross-disciplinary initiatives that integrate information technology, management science, and social welfare to tackle complex societal and healthcare challenges. In addition to his academic responsibilities, He currently serves as an Audit Committee Member in the Office of the President at Chang Jung Christian University and leads projects under Taiwan’s Ministry of Labor, specifically focused on the development and implementation of Employment-Oriented Curriculum Programs. |

|

|

| Chia-Ching Lin Chia-Ching Lin received her Ph.D. from Kobe University, Japan. She is currently an Assistant Professor in the Department of Finance at Chang Jung Christian University, Taiwan. Her research expertise spans across multiple domains including investment, portfolio management, financial management, insurance, international financial management, and financial securities regulations. Her academic contributions are marked by an interdisciplinary approach, integrating financial theory with practical insights to address globally relevant issues in economics and management. In addition to her academic endeavors, she had led the Ministry of Labor-funded employment program initiatives aimed at advancing career-oriented curriculum development and workforce readiness. Her professional trajectory reflects a deep commitment to bridging academic research with policy application and cross-sector collaboration in finance, management, and public service. |

Maintaining Empathy and Relational Integrity in Digitally Mediated Social Work: Practitioner Strategies for Artificial Intelligence Integration (Yih-Chang Chen)